The third invited talk in our colloquium series in 2018 was given by Martin Garriga, at that time finishing his time as post-doctoral fellow at Politecnico di Milano’s Deep SE group, and now continuing as lecturer at the Informatics Faculty at National University of Comahue (UNComa) in Patagonia, Argentina. Martin, like several people at the Service Prototyping Lab, has been interested for quite some time in serverless computing, as evidenced by his ESOCC 2017 article on empowering low-latency applications with OpenWhisk and related tools (see details). In his colloquium talk, entitled «Towards the Serverless Continuum», he reflected on this work and proposed a wider view on a spectrum from mobile applications over edge nodes to, eventually, powerful cloud platforms.

The talk started off with compelling visuals from the Patagonia region, adding to the already strong reasons to have a visit in the other direction in the future. Moving on to the technical presentation, reasons were presented for the systematic introduction of a serverless continuum. Applications, including mobile apps, become more and more compute-intensive and single devices are unable to accommodate the needs especially when also considering energy efficiency. Offloading to such a continuum is needed but software developers struggle to identify the right target. Should they move logic and/or data to the edge, to the cloud, or to still unclear fog architectures? Furthermore, such offloading increasingly blurs the clearness of the execution model for mobile devices and apps, rendering existing education in this domain partly worthless. Indeed, in many institutions, including at Zurich University of Applied Sciences, no continuum-aware integrated module is offered on the teaching side. Trade-offs between the consumption of constrained resources, high processing latency and low battery power as well as monetary and strategic cost of edge and cloud services need to be found. The requirements for offloading are thus: dynamic, transparent, and QoS-aware.

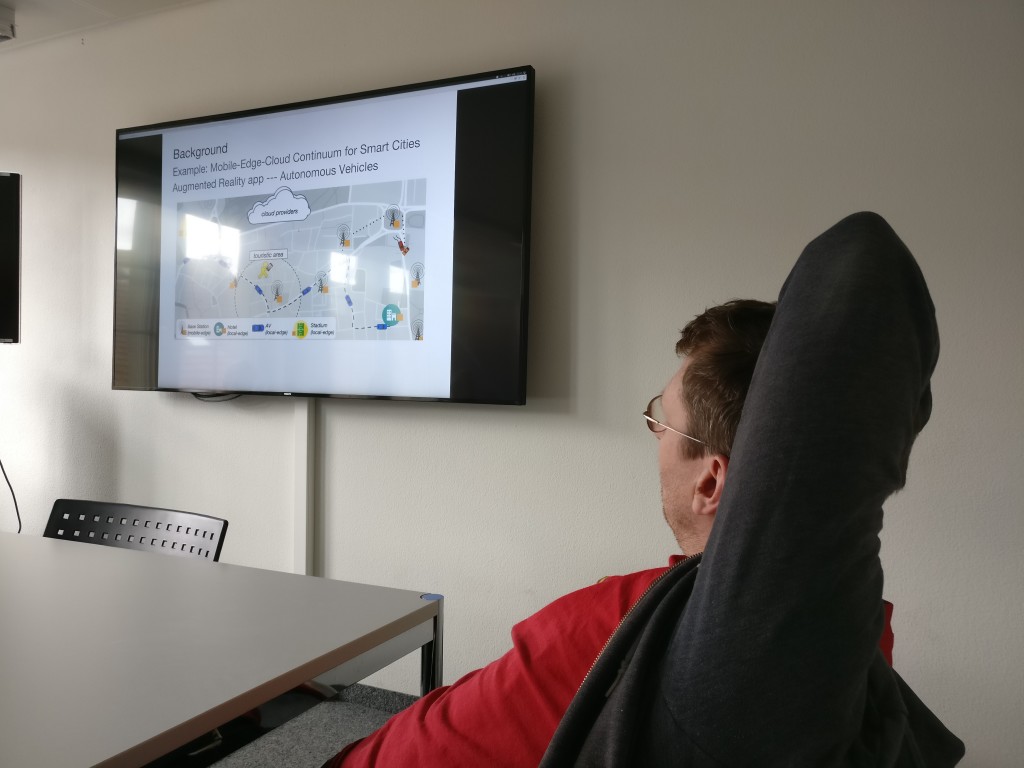

A use case in smart cities was presented by the speaker to confirm the advantages of a systematic offloading approach despite the limitations due to the still immature technology and unclear definitions around serverless computing. However, the consistent use of functions everywhere along the continuum brings several advantages, including opening the door for a generic code caching concept, the ability to migrate back and forth over smaller or larger distances, and better vertical scaling. Conceptually, pull-based policies will be largely complemented or even replaced by push-based FaaS platforms.

As usual, the highly interesting talk sparked an inspirational debate with lots of technical questions. For instance, how lambdas could run on the client in the presence of CPU architecture differences and the fact that code is sometimes not directly executable. Furthermore, it is not clear whether the client or the continuum domain would decide which function to run where. A protocol would need to consider negotiation with criticality and resource account. Latency sources need to be distinguished. In experiments, the CPU was more of a limiting factor than the network. Some of the relative advantages of functions over Docker containers can be attributed to (non-optimal) ENORM containers, considering that functions also often run in containerised isolation in production. Still, producing and deploying containers on demand without developer interference is beneficial not just for performance reasons, but also for higher security as evidenced by the wide-spread presence of vulnerabilities in developer-provided Docker containers. Furthermore, edge systems need to be better described. For instance, they should show a better (lower) response time but in the experiments, due to overloading and wait queues, actually showed higher times.

We would like to thank Martin for his visit and the insight into his findings. His slides are (unfortunately downscaled due to blog quotas) available for download.