Maciej Malawski from AGH University of Science and Technology in Kraków, Poland, visited us today to give a colloquium talk titled «Can we use Serverless Architectures and Highly-elastic Cloud Infrastructures for Scientific Computing?» and to discuss research around the wider topics of workflows and cloud function compositions. This post summarises the talk and the subsequent discussion mixed with further general reflections on the state of serverless applications.

The speaker is affiliated with the Distributed Computing Environments (DICE) team in the academic computing centre CYFRONET which among other tasks maintains the TOP500 machine Prometheus, the most powerful supercomputer in Poland at this time. Furthermore, he is affiliated with the Department of Computer Science which hosts around 800 students. His research evolves mostly around scientific workflows for scientists which carry out a mix of small and large tasks in diverse structures, mostly represented as Direct Acyclic Graphs (DAGs). Among the scenarios for such workflows are earthquake predictions (applied in a Californian use case) and flood protection calculations. Research around scientific workflows is coping with the transition from traditional High-Performance Computing (HPC) clusters to Infrastructure-as-a-Service (IaaS) environments in private and public cloud installations. Beyond early cloud-based workflow execution systems such as various adaptations of Condor and the popular Pegasus system, AGH has contributed HyperFlow which features a light-weight programming model involving JSON descriptors and Node.js function wrappers. HyperFlow is already integrated with multi-cloud adapters such as the ones produced in the EU-funded PaaSage project. Thus, it is destined as suitable testbed for coupling workflows to stateless and transient cloud functions.

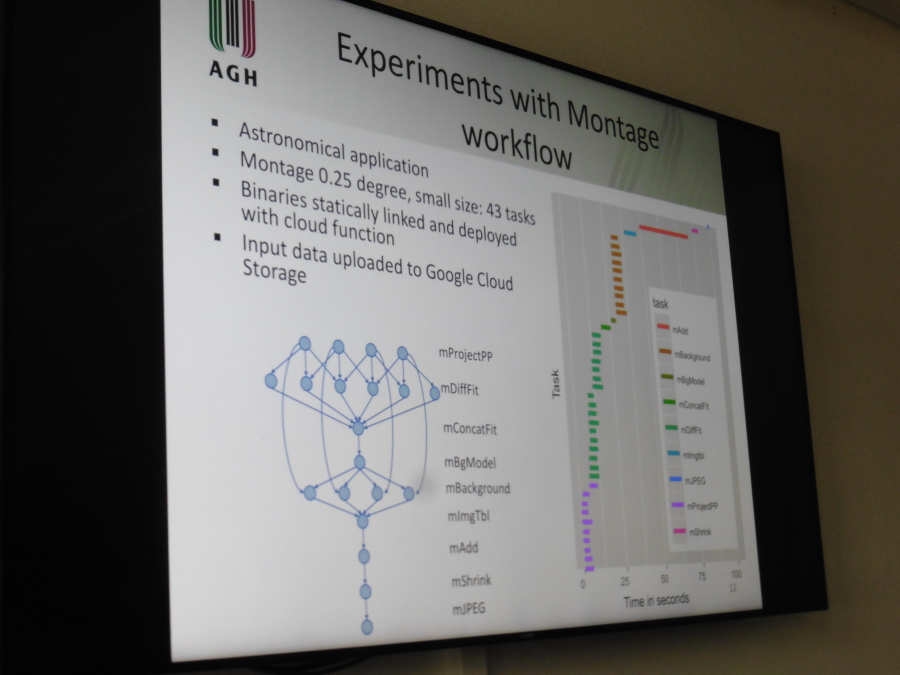

In Function-as-a-Service (FaaS) environments, the infrastructure or platform is supposed to do everything: startup, parallel execution, load balancing, autoscaling and further service management tasks. This leads to multiple architecture options for integrating workflows into cloud functions, from light-weight replacement of the typically used Virtual Machines (VMs) with functions to the more sophisticated substitution of the workflow description itself with a cloud function composition language such as AWS Step Functions, IBM Composer or Fission Workflows. In total, four architectural options have been presented and compared by Maciej. Based on this comparison, a proof-of-concept cloud function workflow was deployed into the Google Cloud and tested extensively.

Interestingly, in our own publication «FaaSter, Better, Cheaper: The Prospect of Serverless Scientific Computing and HPC» presented at CARLA 2017 many of the observations, although independently carried out, match the ones presented by Maciej, although he and his co-authors went much deeper into the detailed multi-month analysis of the runtime behaviour including instance re-use and the influence of the memory allocation on performance. For instance, their findings include that although parallelism was observed, it was sometimes limited, and furthermore performance was reduced significantly due to up to 90% of the execution time being spent on data storage tasks. Variability and variances of execution times (sometimes including network traffic, sometimes not) were generally high. The differences between the public FaaS providers are remarkable. To mention one metric, AWS and IBM Cloud generally yielded stable response times, whereas Google Cloud and Microsoft Azure did less so. Moreover, Google unexpectedly boosted performance in about 5% of all cases which is an undeterministic but welcome behaviour. The distributions of execution overheads and region influences also contribute to the overall complex performance charts. The AGH team spent considerable effort on checking for instance re-use and the influence of cold-start effects. Possible techniques include MAC address recording, global variables in Node.js, and leftover files in the temporary file system. This experiment alone could be refactored and turned into a standalone check to produce probablistically exploitable machine-readable FaaS offering descriptions.

The insightful talk provoked several questions concerning the actual usefulness of cloud functions for both performance-intensive and I/O-intensive applications. The colloquium participants also discussed further optimisation strategies, some of obviously only academic value, while some others linked to potential cost savings.

We would like to thank Maciej for his talk and presence and hope to see him back in Zurich in December 2018 for the European Symposium on Serverless Computing and Applications!