We received our RPLIDAR this morning and, just as kids on Christmas day, we were very eager to play with it right away.

But I’ll hold my horses, as I can hear you ask: “and what exactly is a RPLIDAR?”

A RPLIDAR is a low cost LIDAR sensor (i.e., a light-based radar, a “laser scanner”) from Robo Peak suitable for indoor robotic applications. Basically a cheaper version of that weird rotating thing you see on top of the Google self-driving cars. You can use it for collision avoidance and for the robot to quickly figure out what’s around it.

We bought some sensors for our incoming robotic fleet that will take over the world (the true aim of our Cloud Robotics initiative), and this is the first arrival.

I didn’t have ROS installed on my system and I really wanted to get going, so while TMB proceeded with the HW unboxing and config I got started with the SW on my laptop.

Here’s the RPLIDAR in all its might:

Mind you, I run Ubuntu 14.04, so if your OS is different the process might need some adjustment.

The whole thing is quite straightforward. The RPLIDAR starts spinning as soon as you plug it in your laptop USB port. The software part involves installing ROS, providing it some basic configuration, creating a workspace, downloading the ROS node for the RPLIDAR, and building it with catkin. Although it looks like a lot of commands it takes a very short time.

Anyhow, here’s the quick and dirty list of commands I entered to get it working:

### Install ros (Jade) according to this guide: http://wiki.ros.org/jade/Installation/Ubuntu sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list' sudo apt-key adv --keyserver hkp://pool.sks-keyservers.net:80 --recv-key 0xB01FA116 sudo apt-get update sudo apt-get install ros-jade-desktop-full ### init ros sudo rosdep init rosdep update echo "source /opt/ros/jade/setup.bash" >> ~/.bashrc source ~/.bashrc sudo apt-get install python-rosinstall ### Create a ROS Workspace mkdir -p ~/catkin_ws/src cd ~/catkin_ws/src catkin_init_workspace ### Clone the ROS node for the Lidar in the catkin workspace src dir git clone https://github.com/robopeak/rplidar_ros.git ### Build with catkin cd ~/catkin_ws/ catkin_make ### Set environment when build is complete source devel/setup.bash ### Launch demo with rviz roslaunch rplidar_ros view_rplidar.launch

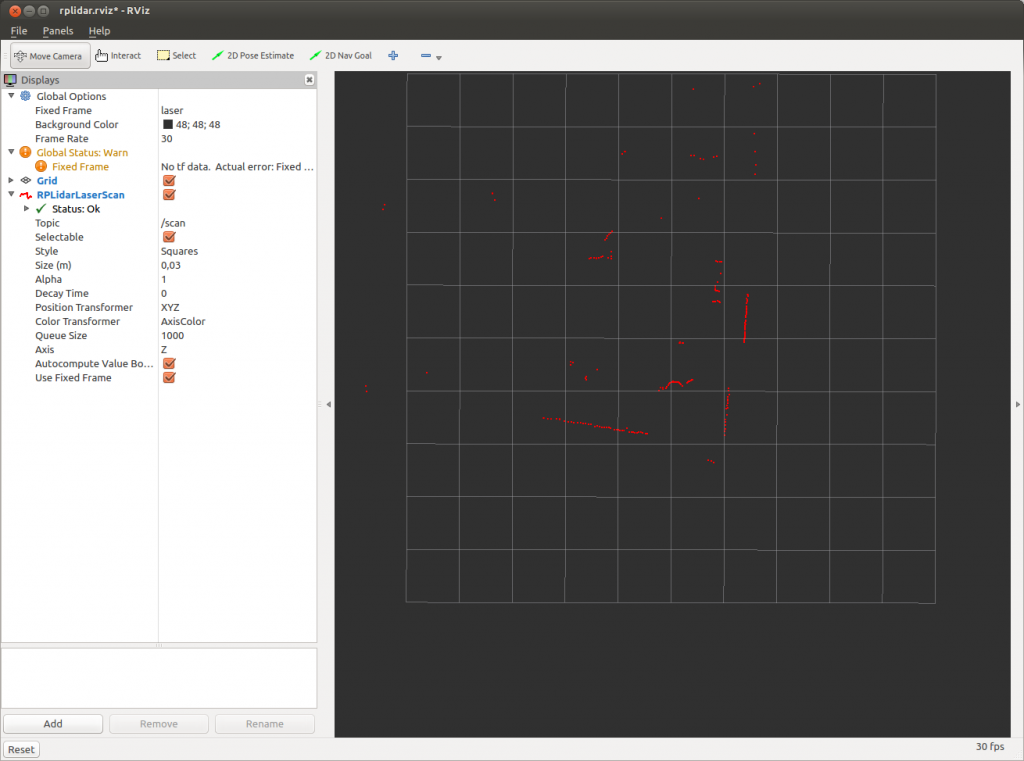

Result:

Rviz will pop-up and show a background grid. The “view” from the laser scanner will be marked in red. The laser scanner is positioned at the center of the grid, it has a range of roughly 15cm to 6 meters, so you’ll be able to see everything around it on its scanning plane within that range.

Rviz will pop-up and show a background grid. The “view” from the laser scanner will be marked in red. The laser scanner is positioned at the center of the grid, it has a range of roughly 15cm to 6 meters, so you’ll be able to see everything around it on its scanning plane within that range.

Troubleshooting

If you get permission errors on accessing the USB device with ROS take a look here:

http://question2722.rssing.com/browser.php?indx=42655234&last=1&item=4

TL;DR – run this:

sudo gpasswd --add ${USER} dialout

su ${USER}

Then cd to catkin dir and run the last two commands above.

I did the same steps for running rplidar A1 scan data But it gives error after running “roslaunch rplidar_ros view_rplidar.launch” .i also write following lines before running rplidar node.

ls -l /dev |grep ttyUSB

sudo chmod 666 /dev/ttyUSB0

But no use. it gives the error:

[rplidarNode-2] process has died [pid 3116, exit code 255,]

Can you please help me??

Hi memona

It’s kinda hard to tell what the error could be in your configuration. But somehow it looks like the launch file can’t find the correct usb device or is missing a symlink to it. Please check if you have included the udev rules for creating a symlink to the device. A script to create those rules can be found here: https://github.com/robopeak/rplidar_ros/blob/master/scripts/create_udev_rules.sh

For more help i would need a bit more information about the error or the setup you did.

I hope this hint helps you to get it running.

Thanks loeh. there was a problem in my lidar.perhaps it was burned. i tried same procedure with another lidar and now it is working and slam is produced.

Hi,

I am working my senior design and it’s a drone consisted of six motors that can fly without a controller using an RPlidar

and a flight control board. However, I am using a Raspberry Pi and an Ubuntu software, I am wondering what kind of a ROS should I install. and how can modify the drone to fly on a certain level in the air.

Thanks,

Hi Mohammed,

we are by any means not hardware experts. However, if you are on Ubuntu 16.04 the “default” ROS release is Kinetic: http://wiki.ros.org/kinetic

If you are using 2 units how can you tell them apart in the code? There doesn’t seem to be a device ID with the data. Any ideas…

There is no device ID with the data, but you’ll typically start 2 different ROS nodes reading the data from two different devices (e.g., /dev/ttyUSB0 /dev/ttyUSB1). You’ll then have the ROS nodes publishing on two different ROS topics (e.g., /front_scan /rear_scan).

What we typically do is having a udev script adding a symlink with a logical name for each of our rplidars. See for example here: https://github.com/icclab/icclab_turtlebot/blob/turtlebot2/root_files/etc/udev/rules.d/77-rplidar.rules

asked Jan 14 ’19

kazi ataul goni gravatar image

kazi ataul goni

1

In an industrial field, one robot will pick up the apples and sort them out. The robot will move fast. In that case, if any human is near to robot it should be slow down. For that purpose, I want to use Rplidar A2 which will be in a fixed position. using Rplidar I wanted to detect any human or other obstacle is approaching towards the danger zone. So far using Rplidar python package I was able to extract the data from it. As I am totally new I do not know how to achieve this.

I was thinking I could do the environment mapping using hector slam beforehand which i have seen here, so that robot can sense the environment and later on when the environment is changing it could take the decision whether any human or obstacle is near to the robot or not. After I got the image of the environment what would be the next step?

I will be so glad if i you could give me an idea how i can achieve this

Hi Kazi,

what you want to do is pretty standard and implemented in the move_base ROS package of any (supported) robot.

Basically you can navigate a map you have built with SLAM (e.g., gmapping or Google cartographer) and use the lidar for obstacle avoidance.

I suggest you have a look at the turtlebot tutorials to see how it’s done.

This question has zero to do with the programming aspect. Does anyone know the size of screws for the standoff on the RPLIDAR?

i am getting the output as described but how do i use this output for collision avoidance? i just want my robot to stop if it encounters a obstacle and the robot uses rtk gps for automatic navigation.

Hi Siddhart,

in ROS you should use a package like move_base (http://wiki.ros.org/move_base) to build cost maps of the obstacles from the lidar and navigate around them.

In ROS2 there’s a new more modular design: https://navigation.ros.org/getting_started/index.html

Hi, how do i make sure the actual position of the RPLiDAR on my base is the same as in the URDF file? I am not sure about the dimensions and such.

Unless you have a fixed (i.e., known) mounting point, I guess your best option is to measure and calibrate with what you see. Even with some minimal error mapping and AMCL will work.