Two of the most influential robotics events of 2016, ROSCon and IROS, were conveniently co-located in South Korea during the second week of October.

We had previously attended ROSCon 2015 in Hamburg, but it was our first time at the International Conference on Intelligent Robots and Systems (IROS), this year in Daejeon.

The two events are very different in nature: ROSCon is a narrowly focused developer conference for Robot Operating System (ROS) enthusiasts and practitioners, while IROS is a large academic conference covering most of the (very wide) area of robotics research.

As such, the impressions a visitor to the events would gain from attending them are quite far apart.

ROSCon is single track, very focused and energetic (450 attendants), dealing with near-production ready systems, implementations, and the general state of ROS(2) development and development based on ROS.

IROS instead is extremely large, with several parallel tracks and somehow dispersive due to the sheer amount of talks and papers being presented.

I imagine this works well for a robotics researcher who will probably be able to carve out a schedule fitting his specific interests, while it was a bit too crowded for yours truly who was trying to get a general understanding of the state of the field and the role ROS is playing in it.

Hence it’s no surprise I enjoyed the whole of ROSCon way more, simply due to its single track nature, good quality talks, and me having the background to follow all the technical details of the talks; while IROS gave me the impression of having extremely high quality talks side by side with less compelling work.

It would take too long to go through the entire program of the two events (by the way, ROSCon talks videos will be soon online), so I will report here only the main highlights of the most interesting things we saw.

- Gazebo 7 is out and there’s a big focus on cloud-based simulation and Web-based clients for visualization

- William Woodhall and Deanna Hood gave an update on the state of ROS 2 implementation and its design leveraging a common core for the different supported programming languages (e.g., C, Python)

- Turtlebot3 is out and will be shipping next year. It’s smaller, cheaper, and more modular (3D printer friendly!)

- Victor and the great guys @ Erle Robotics have come up with a smart way to build modular robots through easily composable hardware. Their approach is called H-ROS and it’s an elegant way of splitting HW components down the lines of ROS2 nodes, providing power and connections through simple Ethernet cables. They even got DARPA funding for it. Congrats!

- Our friends from Rapyuta Robotics chaired a very interesting and crowded “birds of feathers” session on interconnected robots and the main issues in their practical deployments

- Interesting work on continuous integration (CI) for ROS builds were presented by OSRF’s Dirk Thomas, and the people from Fetch doing “physical CI”, that is including the physical robots in the testing loop and the issues this causes

IROS required its own mobile application to navigate through the plethora of talks and events (e.g., robotic competitions!). I managed to only view a limited subset of all the talks, but among the most interesting things I found the special forum on deep learning / AI, in particular Sergey Levine’s talk about his work on robots autonomously learning manipulation.

IROS required its own mobile application to navigate through the plethora of talks and events (e.g., robotic competitions!). I managed to only view a limited subset of all the talks, but among the most interesting things I found the special forum on deep learning / AI, in particular Sergey Levine’s talk about his work on robots autonomously learning manipulation.

A very grounded outlook on autonomous driving was given by the CEO of the Toyota Research Institute, Gill Pratt. His point is that statistically speaking humans are very good drivers, with roughly one death per 1 billion driven kilometers worldwide. Hence, if autonomous driving wants to substitute humans it has to learn to do better than that, meaning focusing on hard situations rather than the simple ones (e.g., driving on a highway).

A very grounded outlook on autonomous driving was given by the CEO of the Toyota Research Institute, Gill Pratt. His point is that statistically speaking humans are very good drivers, with roughly one death per 1 billion driven kilometers worldwide. Hence, if autonomous driving wants to substitute humans it has to learn to do better than that, meaning focusing on hard situations rather than the simple ones (e.g., driving on a highway).

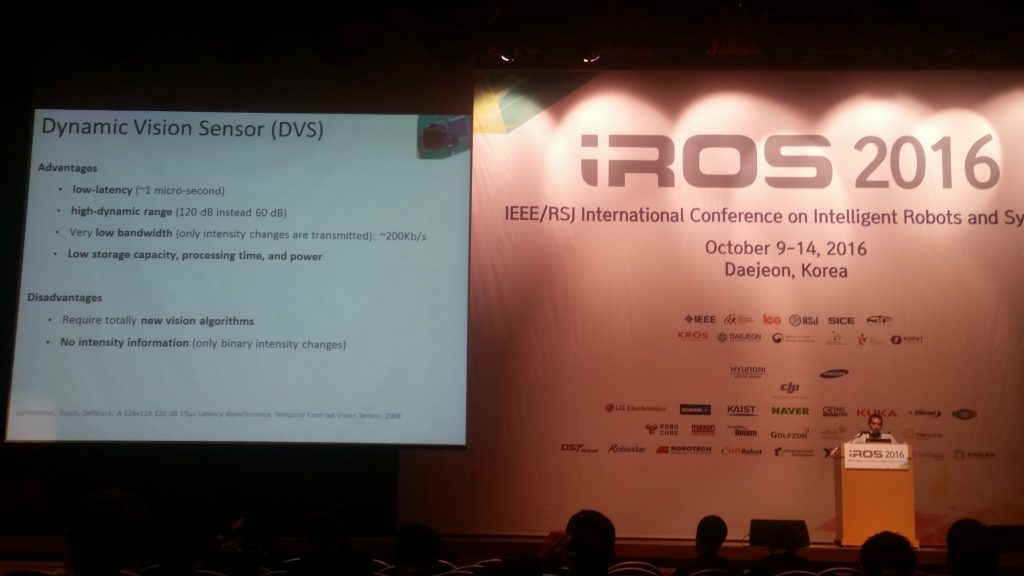

Finally, my favorite IROS talk was given by UZH’s Davide Scaramuzza in the context of the RSJ Tutorial. The title was “Visual Odometry and SLAM: present, future, and the robust-perception age”, and he really did manage to put together a comprehensive overview of the field from its early days (40 years ago) all the way to the latest cutting edge algorithms that allow a drone to perform visual odometry (localization through a camera stream) in a few milliseconds. Apart from the depth and clarity of the exposition, what was impressive was also the pervasiveness of the research he and his team are conducting, spanning all the way from software down to new event-based cameras (a.k.a. dynamic vision sensors: DVS) and related algorithms.

Finally, my favorite IROS talk was given by UZH’s Davide Scaramuzza in the context of the RSJ Tutorial. The title was “Visual Odometry and SLAM: present, future, and the robust-perception age”, and he really did manage to put together a comprehensive overview of the field from its early days (40 years ago) all the way to the latest cutting edge algorithms that allow a drone to perform visual odometry (localization through a camera stream) in a few milliseconds. Apart from the depth and clarity of the exposition, what was impressive was also the pervasiveness of the research he and his team are conducting, spanning all the way from software down to new event-based cameras (a.k.a. dynamic vision sensors: DVS) and related algorithms.

All in all, it was a very formative week in which we managed to appreciate the different stages of maturity of different robotic tasks: some ready for production, some still cutting edge research. Food for thought: a lot for our Cloud Robotics initiative to digest, but a great context to direct our work in this new and exciting area.

All in all, it was a very formative week in which we managed to appreciate the different stages of maturity of different robotic tasks: some ready for production, some still cutting edge research. Food for thought: a lot for our Cloud Robotics initiative to digest, but a great context to direct our work in this new and exciting area.