Have you created a highly popular and frequently used JavaScript (Node.js) functions for execution in Google Cloud Functions? In this case, the economics of FaaS turn against you due to the per-invocation pricing. You might want to have more options for both testing the same function locally and for deploying it into an environment with fixed monthly pricing. This blog post explains step-by-step how to migrate functions from FaaS environments into a fixed per-month pricing container environment. The running example will be Node.js functions running in Google Cloud Functions albeit the procedure is similarly applicable to other combinations.

The prerequisite to this HOWTO is that you have a Google Cloud account with at least one deployed function. Furthermore, this tutorial describes the migration path into a Docker container running at APPUiO, the Swiss Container Platform, using an OpenShift-managed combination of Snafu and a persistent volume container to safeguard your functions. An account into an OpenShift instance is therefore also necessary. The APPUiO public cloud runs the latest version 3.4 which improves the user experience over previous versions and is therefore used in the tutorial. The HOWTO will show the process both on the GUI path and on the command-line path of OpenShift, and subsequently the command-line operations of Snafu.

The initial setting in the Google Cloud will be a set of deployed functions. The list of functions can be retrieved with gcloud beta functions list or via the web interface which is shown in the following screenshot.

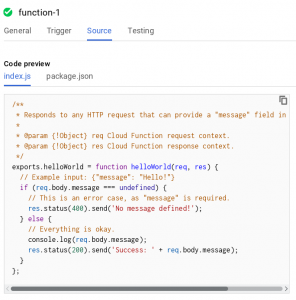

The function referenced above is the unmodified hello world function offered by the provider. For a better understanding of its invocation later on, its implementation is shown in the following.

The function referenced above is the unmodified hello world function offered by the provider. For a better understanding of its invocation later on, its implementation is shown in the following.

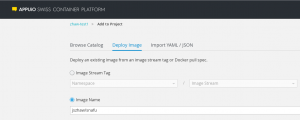

In order to run this function in a container through Snafu, the open source Swiss Army Knife of Serverless Computing, first prepare your container execution environment. The following screenshot shows how a dockerised version of Snafu can be imported in the OpenShift web console.

In order to run this function in a container through Snafu, the open source Swiss Army Knife of Serverless Computing, first prepare your container execution environment. The following screenshot shows how a dockerised version of Snafu can be imported in the OpenShift web console.

(The recommended way is to build the image locally from the Dockerfile by navigating to Catalog -> Python 3.5, although this route has not been tested for this HOWTO.)

Subsequently, you will have to choose a name for the deployment. The running example uses just functions but any other name can be chosen as well. Beside the name, more deployment options can be configured although the default values are suitable.

Subsequently, you will have to choose a name for the deployment. The running example uses just functions but any other name can be chosen as well. Beside the name, more deployment options can be configured although the default values are suitable.

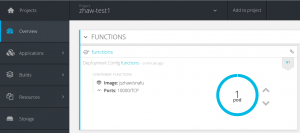

Once confirmed, you will be taken to the overview screen. The application is now already running but data would be lost during restarts. Therefore, adding a volume container to manage files for a certain part of the instance filesystem is going to be the next step.

Once confirmed, you will be taken to the overview screen. The application is now already running but data would be lost during restarts. Therefore, adding a volume container to manage files for a certain part of the instance filesystem is going to be the next step.

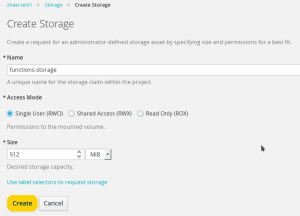

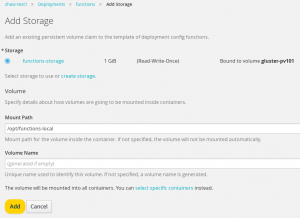

The storage configuration consists of two steps. First, a volume claim for a certain size needs to be configured (in this case for 512 MiB and named functions-storage) and second, it needs to be integrated into the container instances. Snafu reads user-supplied functions in single-tenant mode from /opt/functions-local which is therefore the directory of choice for persisting functions across container scaling or restarts.

The storage configuration consists of two steps. First, a volume claim for a certain size needs to be configured (in this case for 512 MiB and named functions-storage) and second, it needs to be integrated into the container instances. Snafu reads user-supplied functions in single-tenant mode from /opt/functions-local which is therefore the directory of choice for persisting functions across container scaling or restarts.

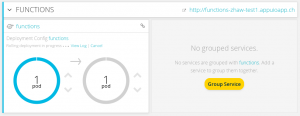

During the reconfiguration, the container is automatically restarted and includes the specified directory from the volume. The OpenShift overview page nicely displays an animated rolling update mechanism which is triggered by any such reconfiguration.

During the reconfiguration, the container is automatically restarted and includes the specified directory from the volume. The OpenShift overview page nicely displays an animated rolling update mechanism which is triggered by any such reconfiguration.

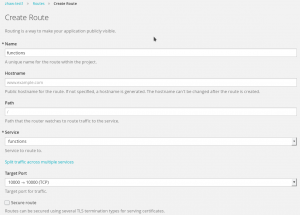

Note that compared to the previous overview screenshot, the application is now publicly accessible through an endpoint represented by the given URL. This endpoint terminates a route which was configured through OpenShift’s route configuration as shown below.

Note that compared to the previous overview screenshot, the application is now publicly accessible through an endpoint represented by the given URL. This endpoint terminates a route which was configured through OpenShift’s route configuration as shown below.

Alternatively, the entire application setup can be performed on the command line using OpenShift’s oc command line tool. The following snipped shows both the commands entered and the expected answers.

oc new-app --name functions jszhaw/snafu --> Found Docker image fee4903 (32 minutes old) from Docker Hub for "jszhaw/snafu" * An image stream will be created as "functions:latest" that will track this image * This image will be deployed in deployment config "functions" * Port 10000/tcp will be load balanced by service "functions" * Other containers can access this service through the hostname "functions" * WARNING: Image "jszhaw/snafu" runs as the 'root' user which may not be permitted by your cluster administrator --> Creating resources ... imagestream "functions" created deploymentconfig "functions" created service "functions" created --> Success Run 'oc status' to view your app. oc volume dc/functions --add --name=functions-storage --type pvc --claim-name=functions-pvc --claim-size=256Mi --overwrite --mount-path=/opt/functions-local warning: volume "functions-storage" did not previously exist and was not overriden. A new volume with this name has been created instead.persistentvolumeclaims/functions-pvc deploymentconfig "functions" updated oc expose service functions route "functions" exposed

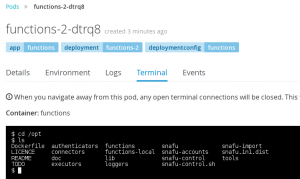

The last step of the setup after the GUI or command line setup is the verification that Snafu runs correctly. This can be done by navigating to the pods overview, loading one of the pods associated with the application (before scaling there is just one), and using the pod’s terminal which offers unprivileged shell access.

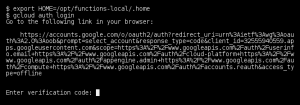

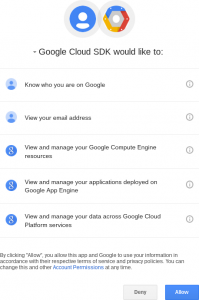

With this preparation, the function migration can begin. First, log into the Google Cloud from the running environment. Notice how the enforced authentication uses OAuth which works nice in web browsers but is somewhat cumbersome in command-line environments. You can copy and paste the shown URL, but will have to type the generated Google token manually into the terminal. Notice further that there are no permissions specifically for Google Cloud Functions; instead, the local application needs to be granted access to a combination of other services hosted in Google Cloud. And finally notice how the unprivileged user does neither have a home directory nor any other user-related data and therefore a custom home directory needs to be set for the Google Cloud SDK to work.

With this preparation, the function migration can begin. First, log into the Google Cloud from the running environment. Notice how the enforced authentication uses OAuth which works nice in web browsers but is somewhat cumbersome in command-line environments. You can copy and paste the shown URL, but will have to type the generated Google token manually into the terminal. Notice further that there are no permissions specifically for Google Cloud Functions; instead, the local application needs to be granted access to a combination of other services hosted in Google Cloud. And finally notice how the unprivileged user does neither have a home directory nor any other user-related data and therefore a custom home directory needs to be set for the Google Cloud SDK to work.

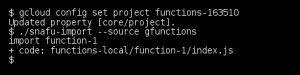

After the project, select the project which hosts your functions (via its id, not the user-visible name) and then run the Snafu import tool, specifying the Google Cloud as its source. The import works flawlessly and the JavaScript functions are placed into the right folder which is backed up by a volume container.

After the project, select the project which hosts your functions (via its id, not the user-visible name) and then run the Snafu import tool, specifying the Google Cloud as its source. The import works flawlessly and the JavaScript functions are placed into the right folder which is backed up by a volume container.

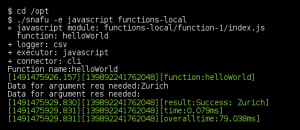

The running Snafu service contains an autodeployer which by now has already picked up the function. Still it can also be executed directly on the command line to verify its functionality, as shown below.

The running Snafu service contains an autodeployer which by now has already picked up the function. Still it can also be executed directly on the command line to verify its functionality, as shown below.

Overall, this process only takes a few minutes and leads to an environment which can be used to test or host the functions inside an already-paid-for container instance. As a research prototype, anything beyond simple functions may not work, although the limitations will be gradually eliminated.

Overall, this process only takes a few minutes and leads to an environment which can be used to test or host the functions inside an already-paid-for container instance. As a research prototype, anything beyond simple functions may not work, although the limitations will be gradually eliminated.