Carlo Vallati was a visiting researcher during Aug/Sept 2015. (See here for a short note on his experience visiting). In this post he outlines how cloud computing needs to evolve to meet future requirements.

Despite the increasing usage of cloud computing as enabler for a wide number of applications, the next wave of technological evolution – the Internet of Things and Robotics – will require the extension of the classical centralized cloud computing architecture towards a more distributed architecture that includes computing and storage nodes installed close to users and physical systems. Edge computing will also require greater flexibility, necessary to handle the huge increase in the number of devices – a distributed architecture will guarantee scalability – and to deal with privacy concerns that are arising among end users – edge computing will limit exposure of private data[1].

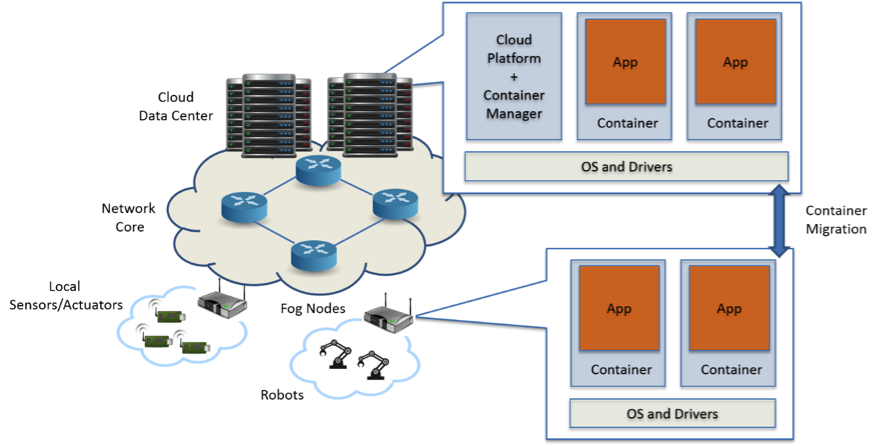

In this context, a Fog computing[2] layer – an extension to the centralized cloud infrastructure installed close to physical systems or end users – is commonly recognized as natural extension for the future cloud architecture to enable the execution of application logic at the edge[3]. Such edge computing will be implemented through Fog nodes which will bring small computation and storage capabilities, supporting execution of applications that require low latency interactions with sensors, actuators, robots or end-users.

Such Fog nodes will be implemented using embedded systems, typically existing hardware such as industrial control boards or home routers. The limited memory, storage and computation of such devices will represent the main challenge in their integration into the existing cloud architecture to enable the execution of application logic, as current cloud platforms execute on powerful, centralized nodes and cannot support embedded systems.

Their integration will leverage containers as virtualization technology for application delivery and execution that offers many advantages[4] such as a lightweight and resource-friendly structure, which accommodates the constrained nature of Fog nodes, process and resource isolation and rapid and easy deployment. The latter in particular fostered the diffusion of containers in today’s cloud platforms – especially in PaaS platforms – making containerization the best strategy to guarantee large-scale distribution and efficient migration of applications[5]. Their lightweight structure, in particular, makes containers the ideal virtualization technique for constrained devices deployed outside large datacenters for the following reasons:

- Their reduced memory and computing footprint – containers does not require instantiation of an hypervisor but they leverage functionalities offered natively by the Operating System – enables their adoption in devices with limited memory and computational capabilities;

- Their small size – containers are considerably smaller than virtual machines – guarantees short time for transport and deployment, enabling installation and migration of applications outside the high-speed core network of data centers.

Although container implementations such as Linux Containers, Solaris Zones and FreeBSD Jails can run natively on Embedded systems, their efficient integration into existing cloud platforms requires additional features to implement delivery, management and execution of application logic, pushed dynamically by the cloud, when required by the application itself. In this context, the nature of Embedded systems and their deployment outside the core network of data centers will present specific challenges:

- Package portability among different embedded computing architectures: Embedded systems are characterized by a large variety of different architectures. Considering that containers do not abstract system details from developers, solutions to ensure container portability among different architectures implementing the proper balance between system abstraction and ease of portability will be required, e.g. by means of tools that produce containers for different architectures automatically.

- Efficient container management in constrained environments: Embedded devices are characterized by limited memory (usually hundreds of MBs of ram), storage (usually a few GBs) and computation (one or two different cores). Such scarcity of resources will require efficient management of resources to enable concurrent execution of more than one container at a time, e.g. scheduling algorithms ensuring priority to containers with applications that require real-time computation.

- Support for frequent migration from/to the cloud: The scarcity of resources available on each Embedded node will enable the concurrent execution of just a few containers. For this reason, frequent migration from/to the cloud to/from Embedded systems will be required to execute a container only for the time in which the application logic requires low-latency interactions. Efficient support for frequent migration of containers, e.g. through incremental transfer, through the implementation of reduced status information, etc, will be crucial to guarantee deployment of different containers at the same time.

The general interest in enabling Fog computing as technology for the evolution of computing is rapidly growing as demonstrated by the draft H2020 ICT Work Programme for 2016-17 which highlights integration of Fog-computing with the cloud as a work item under the Cloud Computing topic (ICT-06-2016). In this context, making the Fog computing real is an exciting research challenges that can be beneficial to different application scenarios ranging from the future IoT to Robotics. We look forward to tackle some of these challenges, exploiting a compelling virtualization technology such as containers!

[1] Luis M. Vaquero and Luis Rodero-Merino. 2014. Finding your Way in the Fog: Towards a Comprehensive Definition of Fog Computing. SIGCOMM Comput. Commun. Rev. 44, 5 (October 2014), 27-32.

[2] Flavio Bonomi, Rodolfo Milito, Jiang Zhu, and Sateesh Addepalli. 2012. Fog computing and its role in the internet of things. In Proceedings of the first edition of the MCC workshop on Mobile cloud computing (MCC ’12). ACM, New York, NY, USA, 13-16.

[3] Abdelwahab, S.; Hamdaoui, B.; Guizani, M.; Rayes, A., “Enabling Smart Cloud Services Through Remote Sensing: An Internet of Everything Enabler,” in Internet of Things Journal, IEEE , vol.1, no.3, pp.276-288, June 2014.

[4] http://www.oracle.com/us/technologies/linux/lxc-features-1405324.pdf

[5] http://www.cisco.com/c/dam/en/us/solutions/collateral/data-center-virtualization/openstack-at-cisco/linux-containers-white-paper-cisco-red-hat.pdf