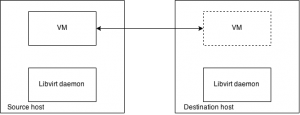

In our previous blog posts we mostly focused on virtual machine live migration performance comparing pre-copy, post-copy and hybrid approaches in an Openstack context rather than exploring other live migration features. Libvirt together with the Qemu hypervisor provides many migration configuration options. One of these options is a possibility to use tunneled live migration. Recently we found that the current libvirt tunneling implementation is not supported in post-copy migration. Consequently, In order to make the post-copy patch more production ready we decided to support the community and add support for post-copy tunneled live migration to libvirt on our own. This blog post describes the whole story of immersing ourselves into the open source community and hacking an established open source project since we believe this experience can be generalized.

Since live migration is essentially a process of transferring complete virtual machine RAM, the constituent data stream can contain sensitive and confidential user information; consequently, it is necessary to separate this traffic from the other guest network traffic. In many Openstack environments physical network separation or VLAN tagging is used to isolate different traffic types. In the cases where traffic separation is not possible libvirt’s tunneling feature can be used instead. However since tunneling uses extra libvirtd-to-Qemu and Qemu-to-libvirtd data handling on both the source and the destination side it may perform worse than native live migration. Libvirt RPC protocol used for tunneled LM provides, amongst the other, data encryption. Also, using tunneling, there is no need for any hypervisor specific network and firewall configuration since only one port per host is used no matter how many simultaneous parallel migrations are in progress. In contrast using the native direct guest-to-guest migration Qemu opens a new connection on a new port for each migration separately. So, there are some good reasons why the tunneled approach could be considered in preference to the native approach.

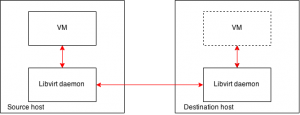

To enable tunneled live migration in an Openstack context just add the VIR_MIGRATE_TUNNELLED flag to your current live_migration_flags in the nova.conf file, restart the nova-compute service and you are all set for tunneled live migration in most cases. Unfortunately, if you want to use tunneling for post-copy live migration this will not work. The reason why tunneled post-copy live migration doesn’t work is simple – the post-copy algorithm by its very nature requires bi-directional communication between the source and the destination virtual machine while libvirt currently supports only unidirectional tunnels (which are sufficient for pre-copy live migration). The channel from the source to the destination is used for transferring of VM’s memory pages. The other direction, from the destination to the source is used by the post-copy algorithm for requesting particular missing memory pages. (If you don’t understand why, you need to read up a bit more on post-copy live migration.)

Unfortunately libvirt’s post-copy patch currently doesn’t come with code providing bi-directional tunneling; therefore there are two options: a) don’t use tunneled post-copy live migration or b) get pulled into the libvirt’s code and implement this feature on your own.

Due to the fact we really like working with post-copy live migration and we would love to see it finally upstream we decided for option b) implement bi-directional tunneling into the libvirt project – sounds simple, right? Not quite!

The libvirt migration tunnel (the connection between source and destination libvirt daemons) is represented by the ‘virStream’ object from libvirt-stream module. This provides the functionality to handle standard stream events such as “stream readable” / “stream writable” occurring on both sides of the tunnel. These stream structures support general data transfers (also out of the live migration context) and consequently have a number of different possible configurations: to support bidirectional communications, it was necessary to modify the migration related streams to operate in non-blocking mode using the ‘VIR_STREAM_NONBLOCK’ flag.

Once the stream is able to both send and receive data the corresponding event handlers providing communication between the libvirt daemon and Qemu need to be modified as well. The uni-directional implementation uses unix pipes to transfer migration traffic between Qemu and libvirt daemon as it is simple and reliable tool for interprocess communication. To support bi-directional communication it was necessary to replace unidirectional pipes with bidirectional socketpairs. Libvirt module libvirt-event allows to handle events occurring on the FDs in the similar fashion as in the previous case with the streams.

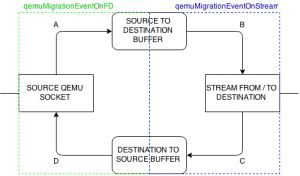

Although the basic building blocks are now in place to support bidirectional communications, the logic that sits on top of this must be added. The pre-copy implementation in ‘qemuMigrationIOFunc’ function awaits the data from source Qemu and repeatedly passes those to the migration stream until the migration process is finished completely. Post-copy needs to be able also read the data from the migration stream and send them back to the source Qemu. To do so we replaced the original function with two callback functions (‘qemuMigrationEventOnFD’ and ‘qemuMigrationEventOnStream’) handling standard data events in both directions. To avoid blocking we also added two data buffers that are shown in Fig. 3. The bi-directional communication mechanism implemented can be summed up as follows:

- ‘qemuMigrationEventOnFD’ handles events on the Qemu FD (socket) by:

- A) If the FD is readable, read the data and write those to the “Source To Destination” buffer.

- D) If the FD is writable and “Destination To Source” buffer contains data, write those to the FD.

- ‘qemuMigrationEventOnStream’ handles events on the stream by:

- B) If the stream is writable and “Source To Destination” buffer contains data, write those to the stream.

- C) If the stream is readable, read the data and write those to the “Destination To Source” buffer.

That’s very much so regarding modification in libvirt in order to enable tunnelled post-copy live migration. Although, retrospectively all the modifications don’t seem to require much coding effort, the biggest challenge here was to understand and modify a part of very complex and relatively large project. Also, due to the nature of the problem, it was difficult to debug and diagnose problems. There is still a long way transferring this modification into the part of libvirt as it makes sense only in the post-copy live migration context. However we believe this modification can help to push post-copy live migration closer to production which would ultimately make live migration much more robust.

Note that this modification is very much based on the post-copy live migration work of Cristian Klein; we are currently looking at how the code can be published in the public domain. Also I’d like to thank to all who helped developing this modification, particularly the guys at Umea University, and also for all valuable comments pushing this forward.

* UPDATE 7.5.2015 *

The source code is now available as a fork of the libvirt’s wp3-postcopy branch in our github repository. Any comments are welcome!