What’s a better way to kick-start the 2018’s blog post series than with SDN topics? 🙂 Therefore in order to keep the tradition of regular blog posts dedicated to the SDN workshop, we have prepared a thorough reflection of the talks and demonstrations featuring the 9th workshop held on the 4th of December 2017 in the premises of IBM Research, Zurich, and organized by the ICCLab and SWITCH.

We continued hosting talks on 5G and NFV technology as one of the actual telco topics in the past two years. Eryk Schiller from the University of Bern spoke about “NFV/SDN-based application management for MEC in 5G Systems”. This work was initiated in the FLEX project they are involved in, and the main objective was to create CDS-MEC for traffic management and control on IP/MAC level. A demonstration was shown on packet redirection from MEC via several NFVs. This is achieved by doing custom modification on OVS level in order to support GTP header decapsulation. The OVS GTP matcher intercepts the traffic before sending the packets to the application. The traffic management can happen on different levels depending on the use case and in the case when OpenStack is used, the OpenStack OVS will be managed internally. The controller they use is an OVS-based controller using OpenFlow, while Open Air Interface (OAI) is used for the Evolved Packet Core EPC. The implementation of MEC cloud resides in the EPC in order to segregate different IP traffic flows, since it is not possible to manage this on eNodeB-level. They support a disaster recovery use-case by initiating micro-core VNFs. This is done based on the ICN and DTN paradigms to enhance the way of operation in disaster situations by doing better matching of the traffic. Within 10 min time, a fully operational micro core is established. The data is then exchanged with the DTN application in order to help send and receive traffic between the users, achieving a maximum throughput of 15 Mbps.

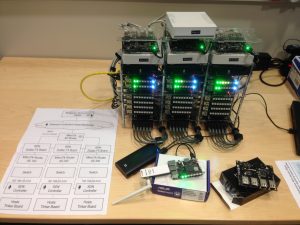

Eryk together with Bruno Rodrigues from the University of Zurich brought the first hardware-based demonstrations at our workshop. During the breaks we could see traffic redirection in the local “cloudified” LTE core with a MEC platform (MEC Cloud & ENB). Bruno showed their Blockchain Signaling System (BloSS) for DDoS Defense. The motivation for this implementation is lined up with the increasing number of simple but massive DDoS attacks, taking as an example those that occurred as a result of a large amount of machines sending traffic (e.g. DynSDN attack). One mechanism to fight against these attacks is described in the IETF working draft, DDoS Open Thread Signaling (dots) – currently in the process of standardization. The idea in dots is to share traffic knowledge as a cooperation among corporates, in order to detect and prevent malicious traffic. Incentives, trust, regulatory laws, etc. are some of the challenges in this approach that have motivated the blockchain system implementation. The blockchain is based on trusted collaborative domains and uses SDN to detect black-holing, and to combine the system with other known DDoS defense systems. The detection is based on thresholds as minimal number of addresses to accept and request blocking. IPFS is used for grouping the data in blocks and for data-exchange. In the demo that Bruno showed, they run traffic overload, which takes up to 12 seconds to detect the malicious source. In this process, a Hash file is generated that points to the IPFS list of addresses to be blocked, then this information is submitted to the blockchain. The reason why they opted for consortium-based blockchain instead of the public block like the one Bitcoin uses, is due to the fast reaction (Bitcoin takes 10-15 min to mind a block and thus is unsuitable for this use-case).

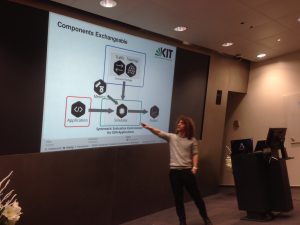

Michael Koenig from Karlsruhe Institute of Technology presented the SEED environment for evaluating SDN applications. Their main focus was to understand the evaluation of the current SDN applications and find a way to optimize this process. The main motivation was the lack of comparability in the increasing number of SDN implementations. In this respect, they analyzed 60 research papers using Stanford campus network, but they failed to compare or reproduce the different studies. The reason why it is nontrivial to compare the applications is due to the usage of different simulators, topologies, metrics, SDN controllers, etc. The SEED method decouples components and let them communicate with standard interfaces or applications. The application is translated to their simulator via OpenFlow protocol. The metrics can be selected from the simulator, while the traffic, the topology and the selection of the output logs is currently a work in progress. This approach decouples the application, the evaluation and the scenario and uses only one configuration file that contains the evaluation setup description. They provide frontend as a unified starting point that preprocesses the configs and initializes the experiment. Bundles are scenarios pre-composed of topology and traffic to combine de-coupled components for more accurate and faster setup. As a next step, there will be possible to make a collection of reusable bundles, like for example: IXP scenario, DC scenario and small prototypical apps. This will provide uniform evaluation ground and give one a better idea of the requirements. Overall this is a great approach to optimize the common evaluation steps using pre-cooked scenario-bundles with built-in topology and traffic simulators. Making very useful contribution to the research community, SEED promises an open source and systematic SDN-evaluation tool with a unified GUI and CLI.

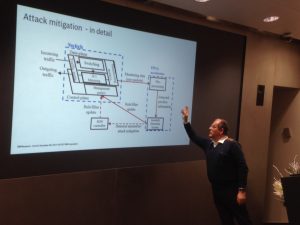

Rolf Clauberg from IBM Research GmbH focused his talk on real-time automatic DDoS mitigation for SDN-based data centers. This work is coming fro the Horizon 2020 project for 100 Gbps IXPs. According to Akamai’s report, 99.9% of the attacks are infrastructure layer attacks. They address this problem by using C-GEP – an FPGA based accelerator for 100 Gbps traffic and Red Rock Canyon Intel switches for 1:1 or N:1 port mirroring by truncating the packets to analyze the header. Multiple parallel algorithms are running to analyze the data and make an intrusion detection, in concrete 16 algorithms are designed for weighted probability to confirm attack, and then filtering rules for black-holing and local-redirect. The detection and mitigation rules are sent back to the switch in the experiments. For the real implementation, SDN controller is used to distribute the rules, which also includes monitoring, as well as real-time, high speed, data collection on the data plane. These tests are run on a real-life data from the Hungarian network of scientific institutes with results showing a reaction time of 0.4-2 ms and no impact on the production traffic. Decision is made based on the utilization of the mirror port (using N:1 mirroring), while the mixing of traffic with- and without an attack is done in the switch. It was shown that 9 of the main Akamai detected DDoS attack can be mitigated with their solution. In their consecutive project, they based on network anomaly detection instead of fixed rules detection in order to enforce an automatic detection. This is done for the infrastructure, but not yet for the applications.

Rüdiger Birkner presented a shared work between ETH Zurich and Princeton University in the SDX domain. This is a solution using both SDN and IXP inter-domain traffic routing in order to achieve more efficient traffic delivery. The idea is inspired by the limitation of BGP as a sole protocol for managing IXP and the advantages that SDN brings as a complement. The question raised in respect is, how to deploy SDN in network with thousands of sub-nets? Since this in unfeasible, the way to go is to deploy SDN only in critical locations. In IXP domain, BGP routes traffic among the route server and the participants (no need for full mesh). Their solution replaced the traditional L2 fabric with SDN controller as a Route Server in order to augment the data-plane with SDN capabilities. This introduced so called SDX policies that consist of both, pattern match and action. Each participant can inject policies in the switch, which are retrieved by the SDN controller to create the forwarding rules. Before installing the policy, the controller augments the policy with BGP local information to preserve correctness and prevent black-holing. Such approach however faces scalability issues due to the large increment of augmented policies. To address it, they introduced the idea of setting TAGs to indicate the next hops for the BGP edge router. A bit-wise matching is latter applied to parts of the TAG, once it reaches the SDX fabric. The SDN policy is augmented to also include the tag in the match field, which successfully reduces the number of policies from 68M to 65K. When taking in consideration multiple SDXs around the world, a problem of forwarding loops occurs due to concatenation of multiple policies, so to avoid this – an SDX state exchange is necessary. But in this case we run onto the problem of SDX competitiveness and lack of policy exchanges as a result. Safe Inter-domain Deflection based Routing (SIDR) addresses this by finding a tradeoff between the shared info and the false positives in order to achieve global correctness. Information is exchanged with the directly connected SDXs and the path is safe when there is no SDX-crossing. Is has been measured that SIDR can activate 91 of the safe policies and it takes an order of milliseconds to compute the new rules. The states are synced in the same way as in BGP – propagated with time. In terms of external cooperation, this work was found interesting by 2 ISPs that offered hardware capabilities that are unsupported by their current implementation. They don’t focus on assisted communication between SDX in order to avoid problem of cooperation. In future and for dataplane measurements, they are considering using P4.

Patrick Mosimann from Cisco gave a talk on Software Defined Access (SDA). This approach is based on the concept of Intent-based network infrastructure built in the Cisco’s Digital Network Architecture (DNA). The orchestrator in the DNA center pushes identity-based policy in the network related to, for instance, Quality of Service (QoS). If the application requires more bandwidth, the policy is updated and afterwards, the context information from the network is fed back to the application. This approach uses VxLAN for the overlay network isolation and group information based on tags. In such case, the customers don’t need to change policies because they are not bound to IP address. There is also the option for stretched subnets with IPs ranges offered for certain customer needs. The L3 underlay avoids the spanning tree and the equal cost multipath enables redundancy. The use of LISP as a control plane mechanism acts as a DNS for routing and enables to register and look for each device into the database. Despite that the SDA segmentation and access control allows for automated end-to-end segregation of user, device and application traffic, it provides also a monitoring capability. The information from the network and the telemetry streaming is based on NETCONF and machine learning is used to avoid duplications and crowdsourcing. Based on the feedback, customers can share specific problems of their network in a feedback loop to help other customers. Patrick made a short demo involving Lufthansa and Swiss airport management. First he pushed info from Lufthansa virtual network to intent based network, and after that the DNA (large collector of information) pushed the info in a form of a policy to the rest of the devices. Then he showed a policy of IP camera having connection denied to the ventilation system. Policy is defined with name, source and destination addresses of the camera and of the ventilator – all of them representing the group information, and then the policy is pushed into the network. The last step focused on the user who is connected to the network and the tools he uses in order to get the health score of the network. It showed the issue inputs from three different companies and the actions suggested via crowdsourcing. Northbound APIs are offered by the DNS center, as well as APIs are offered by the network components individually via Netconf yang. As answers to some of the questions, the editing of the policy is done in the DNA center as a CLI-readable configuration, the intermediate routers are vendor-independent, and privacy concerns is addressed in the Active Directory.

The last two presenters came from the GEANT project to present the main concept and advances made over the project duration, as well as the various tools offered to researches and network administrators. Susanne Naegele-Jackson presented the new GTS service from GÉANT that allows automatic provisioning of infrastructures. The GEANT’s GTS assembles a tool that provides environments to carry out network experiments with great flexibility used for rapid prototyping and innovation. It has been based on the principles of the ETSI-NFV community, but not fully conforming as it does not implement the defined interfaces. The way how GTS operates is via agencies that are in charge of providing a specific infrastructure setup upon request. The topology requirements are defined in a description (DSL) document specifying the required setup and network resources (virtual machines, virtual links, Virtual Switch Instances and Bare Metal Servers). Persistent storage can be provisioned as well. In order to make the hardware available and virtualized, the agencies talk to infrastructure agents to arrange the provisioning of the resources. Currently GTS has 57 servers, 10Gbps, OpenFlow switches, bare-metal and default VMs. The OpenFlow hardware allocates OVS instances to each user and OpenStack is used as virtualization manager. Their future work will include providing automation, high performance storage and resilience.

Finally Jovana Vuleta from the University of Belgrade followed up with a demonstration on the SDN pilots in GÉANT: SDX (Software Defined internet eXchange point) and SDN-BoD (Bandwidth on Demand). Initially Jovana, introduced some general figures on the GEANT project, for instance it currently connects 41 research and educational networks, interconnected through 26 Points of Presence and 2 Open eXchange Points extended worldwide. Some of the services include: Connectivity of R&D in an isolated high-speed network, Cloud, Testbed (GTS), security, network management and monitoring etc. In particular, the projects related to SDN include: GÉANT SDX – Software-Defined Internet Exchange (SDX), SDN BoD – SDN-based Bandwidth on Demand service with advanced path computation capabilities and Transport SDN (Optical) – OpenFlow in Optical Layer. The first one is based on L2/L3 services and uses ONOS as SDN controller with a direct upstream contribution (DynPaC application). The Pilot infrastructure involved overlay network deployed using CORSA DP2100 switches, deployed on 5 locations using Virtual Switch Context (VCS) methodology. The VCS are OpenFlow enabled and a dedicated L3VPN is used for connectivity with the controller. Some of the future steps is to analyze and address the SDX-L2 limitations and the poor SDX-L3 scalability. The SDN-BoS Pilot is to be released in near future.

Once again we are happy that you joined us or presented, and see you soon at the 10th Jubiläum of our workshop, together with our Swiss SDN community.