In our previous blog post we explained how networking works in Rancher in a Cattle environment. There we also mentioned that we have been working on enabling Rancher to operate on heterogeneous compute infrastructures – for example, a mixed environment comprised of ARM based edge devices connected to VMs running in the cloud. In this blog post we go into more detail on how we built rancher-agent service for aarch64 ARM based devices.

Before we begin, the following list of hardware and OS was used during this work:

- Raspeberry Pi 3 model B

- Suse Leap 42.2 aarch64

- Docker v1.10.3

Rancher Labs had done some work already on supporting multi-arch hosts – most of it on enabling rancher-agent to work on ARM based devices – but as the Rancher platform evolved this has been deprioritized. Back then most of the rancher-agent scheduling and networking services running in the host were consolidated into a single container (agent-instance) and this was ported to ARM based devices as described in this blog post. From rancher-agent version v1.1.0, these micro services were split into separate containers giving the user the option to select which scheduling or networking solution to use. Once this (significant) change to rancher-agent was made, Rancher Labs stopped progressing support for ARM devices.

This was the starting point for our work: to get something up and running as quickly as possible, we started working with the older version of rancher-agent. We followed the steps in the blog post mentioned above to get it up and running in the raspberry pi. We had to build the images from scratch as we were running an aarch64 OS and a few modifications were required to the source code.

After building those images and tagging them accordingly we were able to start the rancher-agent container in our raspberry pi such that it was recognized in the proper environment within rancher-server.

Note that Rancher is still evolving rapidly and the development team has chosen to evolve rancher-server and rancher-agent together. The upshot of this is that a given version of rancher-agent will only work with a specific version of rancher-server. rancher-agent v.0.8.3 (which we were working with) only works with rancher-server v1.1.0.

After successfully building and running rancher-agent v0.8.3 in the raspberry pi, we then decided to investigate if it would be possible to port a newer version of rancher-agent – v1.2.1 – to ARM devices as there are clear advantages to the design incorporating decoupled microservices in the newer rancher-agent. We started from the very beginning building images from the rancher/rancher repository (agent-base and agent), then the containers which are started after rancher-instance are launched – scheduler, healthcheck, net, dns and plugin-manager (network manager). Some of these repositories have dependencies on other binaries – e.g docker, strongswan, rancher-cni and more – but luckily enough almost all of these dependencies are Go binaries which, we found, can be easily ported to aarch64.

To get the system up and running, it was necessary to pull all these containers to the Raspberry Pi: it was also necessary to trick rancher-agent into using the aarch64 containers by giving them the same names (locally) as the x86_64 containers have in dockerhub. In this way, rancher-agent started the aarch64 containers which were already on the device rather than pulling down the x86_64 containers.

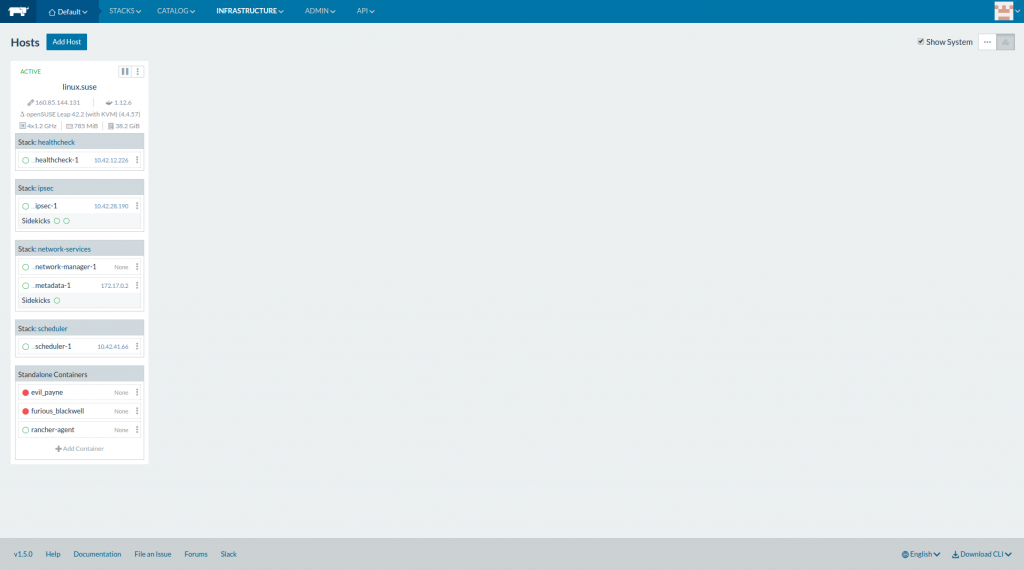

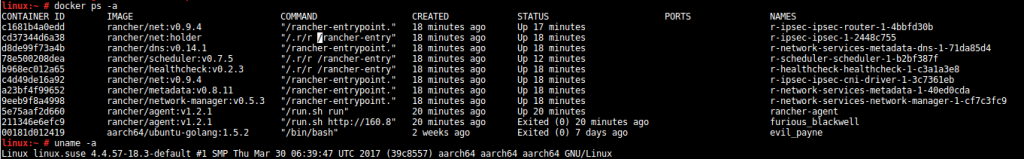

Containers running on Raspberry PI – Rancher view and view from inside the Raspberry Pi

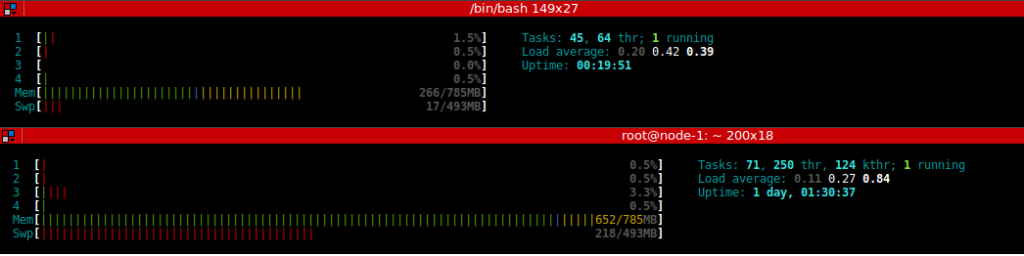

It is worth noting that the infrastructure containers created by rancher-agent increase significantly the memory consumption in the Raspberry Pi which depending in the pi model used may cause performance issues.

Memory consumption on Raspberry Pi

You can find all images we built for rancher-agent in our Dockerhub account and instructions to set it up here. Note that this is an experimental work and there are known issues related to networking between containers. More specifically, scheduler and healthcheck containers could present an issue bringing up the network entering in an endless loop of restart/recreation of the containers. The only workaround we found so far is to clean up the host completely and starting over but we are still investigating a way to solve this in a cleaner way.

Managing application deployment over heterogeneous computing resources with Rancher

Rancher allows easy deployment and management of applications over a set of hosts, in a multi arch environment: support for this is provided via an additional rule which needs to be added to the scheduling intelligence to support the deployment of each piece of the application to the appropriate place. Adding labels to hosts with specific variables – e.g. type=rpi – allows us to specify a scheduling rule in Cattle. This rule can be added to any docker-compose file as a label in the application which then will be processed by Cattle and mapped to the right host. See the example below:

wordpress:

image: aarch64/wordpress

links:

mariadb:mysql

labels:

io.rancher.scheduler.affinity:host_label: type=rpi

ports:

8080:80

Although this is a very useful mechanism which can be used when working with heterogeneous architectures, it is clear that it needs to evolve significantly to provide proper support for this context. For example, it is easy to envisage contexts in which conditional deployment on nodes may be a requirement and this may have implications for the container that gets used – eg deploy the aarch64 container to the Raspberry Pi if there is sufficient memory, but deploy the x86_64 container to a compute node close to the edge if not.

Final Thoughts

Generally, getting Rancher up and running on a Raspberry Pi was not such a painful process and did not require deep embedded systems knowledge or experience. Also, building rancher-agent binaries and docker images was fairly simple and only needed basic Golang understanding to get all dependencies and build the binaries. Moreover, there has been a focus on bringing the container world to ARM based devices – Hypriot and Resin.io are good examples of it – so we can envisage that this world of heterogeneous container based systems connecting edge devices is not far away.