In our previous blog post we described our experience enabling floating ips through modifications to nova python libraries in an Openstack Cells deployment using nova network. That solution was not robust enough and hence we had a go at installing neutron networking, although there is very little documentation specifically addressing Neutron and Cells. Neutron’s configuration offers better support and integration with the Cells architecture than we expected; unlike nova-network operations such as floating IP association succeed without any modifications to the source code. Here, we present an overview of the neutron networking architecture in Cells as well as main takeaways we learnt from installing it in our (small) Cells deployment.

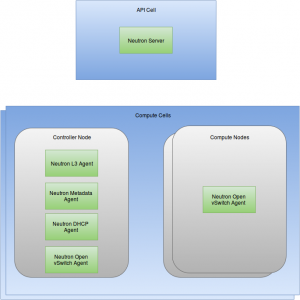

Generally, communication between Cells is done through multiple message buses where each cell has its own message bus. Neutron, on the other hand, requires a shared message bus between all entities under the control of one neutron-server – which includes the Compute and API Cells. Thus, a new message bus dedicated to Neutron was created on the API Cell and each Compute Cell connects to this bus. We then installed the neutron-server service on the API Cell and the remaining neutron services were split across Compute and Controller nodes in the Compute Cells. Following Neutron’s installation guide, we then decided to use the standard neutron networking services (dhcp agent, l3 agent, metadata agent, openvswitch agent) on the Controller node in each cell for performance reasons; the compute nodes used the standard neutron services (openvswitch agent). The above configuration is illustrated in the figure below. For sure other configurations are possible and this configuration may not be suited to many situations, but it works well for what we are trying to do.

The installation process was generally in line with the instructions provided in the installation guide; the only change required was to configure the message broker to use the dedicated Neutron message bus on the API Cell instead a of nova cells message bus on each of the Compute Cells.

As described in this blog post neutron port notification needs to be disabled in order to launch a VM successfully; generally, neutron notifies nova when an instance is plugged into the network, but as both services are running on different message buses, nova will never get the notification message and thus the task will never succeed. Adding the following parameters to nova.conf on the Compute nodes avoids this issue as nova will not wait for this notification from neutron.

vif_plugging_is_fatal = false

vif_plugging_timeout = 5

Using this approach, we got Neutron networking running on our experimental Cells deployment. Neutron certainly plays nicer with the cells architecture than nova-network does; networks and floating IPs are created and managed through the API, instead of needing to be inserted manually into the database using nova-manage commands as in our previous setup. Obviously, it is still not bullet-proof and we observed a race condition when launching a new VM; in case an instance is not plugged into the network by the time vif_plugging_timeout expires the VM launch will fail (it’s not critical, as running it again is usually successful).

We are still investigating whether Nova Cells meets the requirements of our pilot deployment, but our experience so far looks promising.

More soon!

Nice post

We have a openstack cloud in near Zürich.

We planing to enhance the stack with cells and neutron Server by Q1 2016.