As stated in a previous blog-post, at the beginning of this year we started with the project of migrating the open-source application Zurmo CRM into the cloud. In this first blog-post on the progress of this process we will describe our initial plan, show the basic components of a cloud-optimized application and talk about whether to stick with the monolithic architecture style of Zurmo or migrate it to a microservices architecture. We’ll furthermore discuss the first steps we took in migrating Zurmo to the cloud and how we plan to continue.

TL;DR

- We use the term cloud-optimized to describe an application which has the same characteristics as a cloud-native applicaton but is the result of a migration process.

- The basic components of a cloud-optimized application are: Application Core, Enabling Systems, Monitoring- and Management-Systems. All of which have to be scalable and resilient.

- A monolithic architecture style is best suited for smaller applications (little functionality / low number of developers) resp. when starting to build a new application.

- A microservices architecture style is best suited for large applications (lots of functionality / high number of developers). Its benefits are less of a technical nature but more of the way it helps to manage the development and deployment of applications.

- We decided to stick with the monolithic architecture. The first change we did to the application was to horizontally scale the web server with the use of a load balancer and make the application core stateless.

Cloud-Native vs. Cloud-Optimized

Before starting with the description of the migration process let’s quickly discuss the terms cloud-native and cloud-optimized. In this blog-post we’ll describe the application which should be the result of the migration as cloud-optimized. The reason for this is that Zurmo – the application we’re migrating into the cloud – was not designed for the cloud and can therefore never really be called ‘cloud-native’. So we were thinking about which term to use to describe an application which exhibits the same characteristics as a cloud-native application but was migrated to the cloud rather than built for it. We chose the term ‘cloud-optimized’ since in the end it is really all about how efficiently an application uses the resources and possibilities the cloud environment offers. Therefore the only difference in the characteristics of a cloud-native and cloud-optimized application is that the former was designed from the ground up for the cloud while the other is the result of a migration process.

At the beginning of the migration process

When we started with the process of migrating Zurmo onto the cloud our initial plan was as follows (the first two points being done in parallel):

- Analyze Application (Architecture / Logical Structure)

- Generate Load-Tests for Application

- Test how much load application can handle

- Plan Migration

- Define new Architecture

- Define necessary changes to current application

- Migrate Application

The idea behind this plan is quite straightforward. First get a picture of how the application works and how much load it can handle, then think about ways on how to change or optimize the application for a deployment in a cloud-environment. Optimizing an application for the cloud essentially means that the application needs to be changed in such a way that it becomes scalable and resilient as these are the basic characteristics every cloud-optimized application should possess. The scalability and resiliency of the application should furthermore be ensured in an automated fashion. This means that some monitoring and management systems need to be added in order to observe the health as well as the load of the application and react when certain events happen (e.g. when a machine or a component fails or when the response time of the application grows above a certain threshold).

Basic Components of a Cloud-Optimized Application

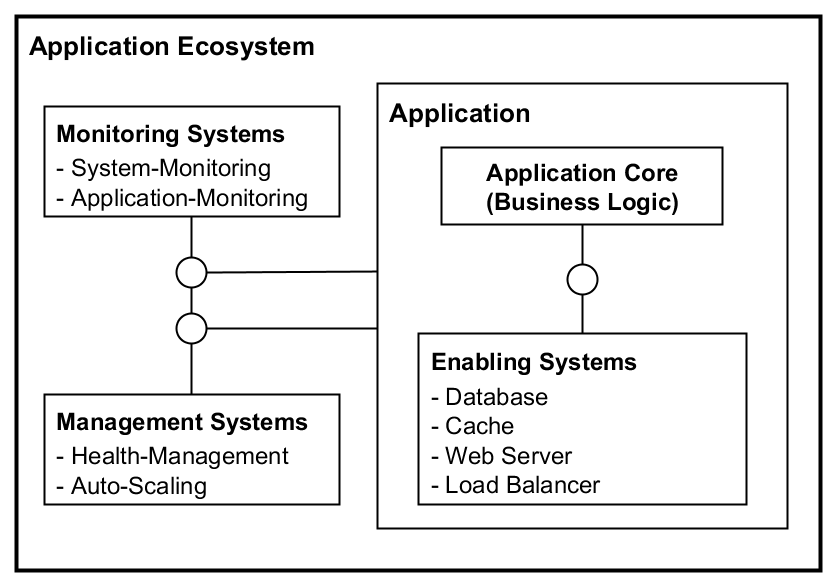

This leads us to a basic view of how a cloud-optimized application should look like and which its main components should be:

Instead of only speaking of an application we have to think of an application ecosystem which is composed of several different parts. At the core of this ecosystem lies the application core, the part that implements the business logic. It is the part which essentially defines the application – it’s raison d’être. In most cases this component cannot stand by itself and needs some enabling systems. Enabling systems are commonly used software components such as databases, caching systems, web servers or load balancers. So when we talk about the application we mean the application core as well as the enabling systems. As mentioned previously we additionally need monitoring systems which check the health of the application as well as management systems which take care of the scaling of the various parts of the application and generally keeping it functioning as expected.

This is just the most basic way to depict a cloud-optimized application. Depending on whether the application has a monolithic or a microservices architecture this picture could change quite a bit. But it suffices for our current needs of explaining what we want to achieve with our application. We’ll add more details and extend this architecture as the migration progresses.

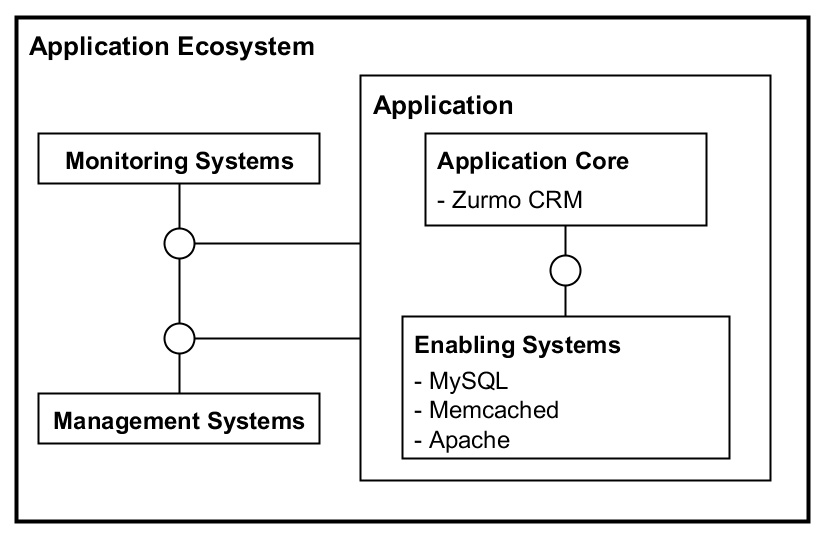

Lets have a look at how the elements in Fig. 1 match to the basic setup of Zurmo:

At the moment Zurmo only consists of the application core (some PHP-code), an apache web server, a memcached cache and a mysql database. There are no monitoring or management systems so this is something we’ll need to add to the application ecosystem at some later point.

Migration Path: Monolithic or Microservices Architecture

Lets think about what our first steps should be in optimizing this application for the cloud. We know that a cloud-optimized application needs to be scalable and resilient and we furthermore know that it currently consists of an application core and some enabling systems and that at some point we will need to add a monitoring as well as a management system. This leads to two conclusions:

- We need to make every part of the application scalable and resilient.

- We need to add monitoring and management systems to the application ecosystem.

What options do we have to achieve this? As we see it there are basically two ways:

- Leave the application as it is (Monolith) and make every part independently scalable and resilient.

- Split up the application core and build a set of microservices each of which will be scalable and resilient.

There is of course also the option of first doing step one and then step two.

The question which we basically had to ask ourselves is if we wanted to continue with the current monolithic architecture style of Zurmo or if we already wanted to change it to a microservices architecture style. What would be the pro and the cons of each choice?

– Option 1: Keeping monolithic architecture

- + No or very little change to application core.

- + Keeps architecture complexity low.

- +/- Only one solution (programming language, database, caching) used for every aspect of the application-core. In our case PHP.

- – If the application becomes too big, coordinating the development process can become very complex and cumbersome. (More of a general point)

More in general: The main benefits are visible on a short-term basis. Better suited for smaller applications (little functionality / low number of developers) resp. when starting to build a new application.

– Option 2: Changing to microservices architecture

- + Best suited tooling can be used for each microservice.

- + Microservices can be developed and deployed independently of other microservices (no dependencies).

- + Failure of a microservice should not influence other microservices → Higher granularity of failure isolation.

- – Extensive change to application core → Lot of effort.

- – Adds complexity to architecture which needs to be managed.

More in general: High upfront effort to change existing application. High overhead and extra complexity for smaller applications. High benefits in case of large application (lots of functionality / high number of developers)

As we currently see it, the reason for choosing the microservices over the monolithic architecture style is not so much a technical but rather an organization/process one. The microservice architecture style seems to be better suited to manage large applications which constitute of a multitude of different functionalities and have a high number of developers working on them. When starting developing a new application it seems more efficient to work on a monolith which you start to split up once the need actually arises.

In our case we saw that Zurmo is a quite complex application. Especially the dependencies multiple modules have on the same database tables (of which there are about 150 at the moment). Deeply understanding this application and trying to separate out specific parts would require a fair amount of effort. Furthermore we are no big team, just three guys. We therefore decided to first keep the monolithic architecture of Zurmo and see how much we can optimize the application in this way. The plan is to transform the architecture to a microservices architecture in a second step.

First Steps: Scaling the Web Server & Making Application-Core Stateless

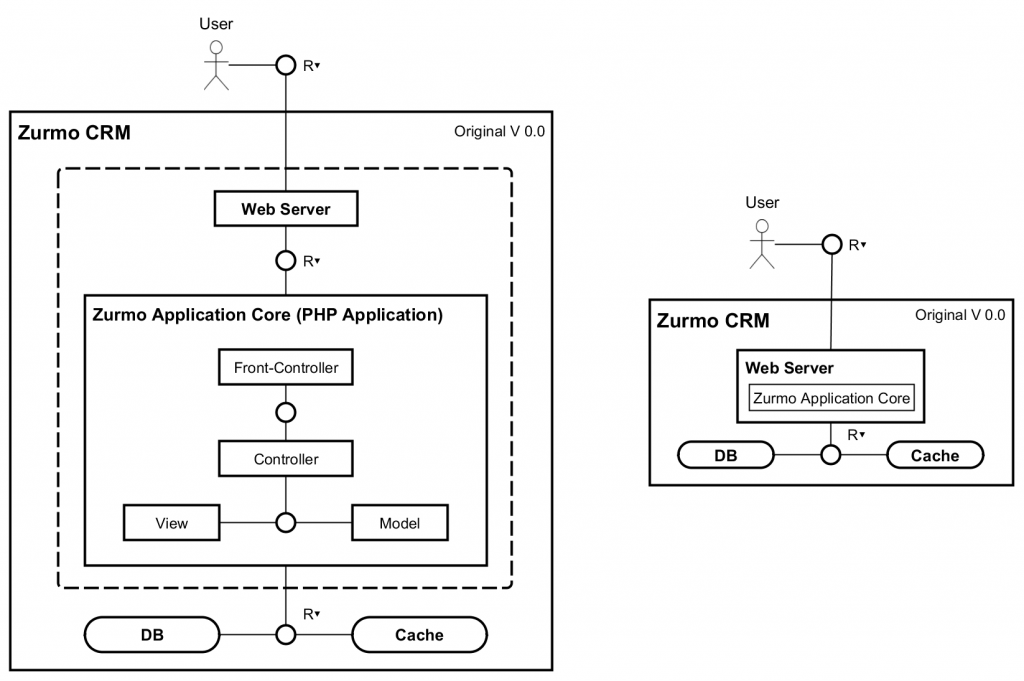

After we decided to stick with the monolithic architecture lets talk about the first steps we took in optimizing the application for the cloud. Lets start with Zurmo’s current architecture:

Zurmo CRM is a PHP application employing the MVC pattern (plus a front-controller which is responsible for handling/processing the incoming HTTP requests) based on the Yii framework. Apache is the recommended web server, MySQL is used as the backend datastore and Memcached for the caching. It is pretty much a typical monolithic 3-tier application with an additional caching layer. The recommended way of running Zurmo is via Apache’s PHP-module. So the logic that does handle the HTTP-requests and the actual application logic are somewhat tightly coupled. In the rest of this article we’ll continue with the simplified architecture.

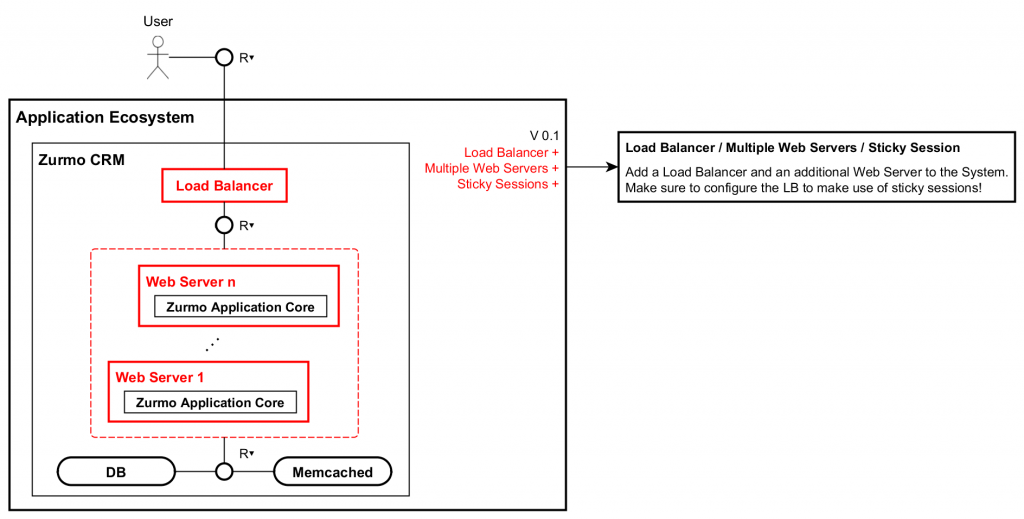

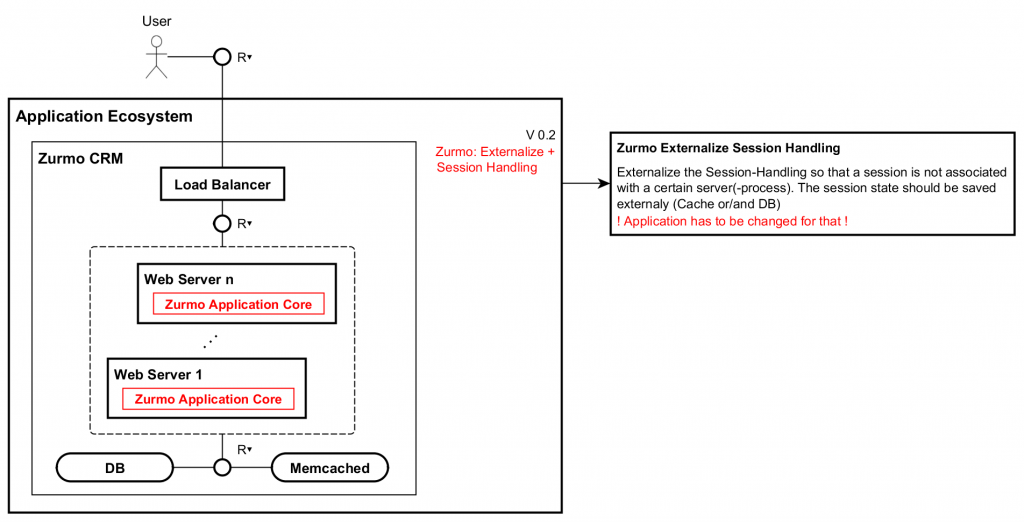

We know that we need to make every part of the application scalable and resilient and that we need to add monitoring and managing systems to the application ecosystem. We decided to first scale out the web server. Since the application-core is in its current configuration tightly coupled to the web server – every apache process basically comes with a php interpreter – when we scale the webserver we automatically also scale the application core. To achieve this, all we need is a load balancer which forwards incoming HTTP requests to the web servers. Currently Zurmo saves sessions based information locally in the web server. This means that the load balancer needs to ensure that requests from a specific user are always forwarded to the same server. We can achieve this with the use of sticky sessions. After this step the architecture looks something like this:

The drawback of this setup is of course that if one of the web servers would crash, users which were connected to that server would lose their session information. So we added scalability but did not really make the solution resilient. The cause for this problem is the use of sticky sessions. To fix this, the session handling of the application-core needs to be externalized. That means that the application-core has to be changed in a way that instead of saving the session information locally it saves it externally in a database or a cache.

Even if we originally planned to not really touch/change the application-core, in this case there was no way around it. But since session information is accessed from only one point from within Zurmo, the modification did not require too much effort. We modified the session handling in such a way that it now saves the session state in the cache as well as in the database. We can now access it in a quick manner from the cache, or should the cache fail still recover it from the database. After this change the architecture looks exactly the same but now the overall application is already considerably more scalable and resilient. We don’t have to use sticky sessions anymore, users can be evenly distributed among the existing web servers and if one one of the web servers or the caching system should crash the users won’t be interrupted by it.

Another point not to be forgotten is the newly added load balancer. We now also need to ensure to make this part scalable and resilient and of course the database and the cache. How we do this will be part of a later blog-post.

Next Steps

The changes we did so far are of course just a small part of the overall modifications that need to be done in order to optimize Zurmo for the cloud.

At the same time as we started analyzing Zurmo and thinking of ways on how we could optimize it for the cloud, we started with some basic load tests to see how much requests it could actually handle. In the next post of this series we will take about those load tests and how they’ll help us to further optimize Zurmo for the cloud.

Links

- Microservice and Monolithic Architecture Style

- Cloud-Native Application Initiative