The goal of the Cloud Robotics initiative of the SPLab is to ease the integration of Cloud Computing and Robotics workloads. One of the first things we need to sort out is how to leverage different networking models available on the cloud to support these mixed workloads.

In this blog post we’ll see one little handy trick to have ROS nodes run as pods (and services) in any Kubernetes cluster so that they can transparently communicate using a ROS topic.

We’re using the Robot Operating System (ROS) on both robots and the cloud. As you (might not) know, ROS 1 uses a ROS master to act as registrar of other ROS nodes that act as publishers or subscribers on a topic. When a publisher creates a topic, and a subscriber subscribes to it, what actually happens is that the master informs the publisher, so that it directly sends unicast messages to the subscriber when there’s something to say (a pretty low-level pub/sub implementation). See figure below (credits Clearpath Robotics, for more info click here).

One of the assumptions of the figure above is that publishers and subscribers can directly address each other. ROS has some very specific networking requirements for topic messages to be relayed correctly.

Now, we want to deploy the same thing on a Kubernetes cluster running in the cloud, but, following the best practices of current cloud development, we’re packaging each ROS node in a separated Docker container. Consequently, we’re going to use a separate pod for each ROS node so as to allow nodes to be running on any cluster server. Also, we’d be very happy not to need any specific network setup and use Kubernetes overlay networking to do the work for us.

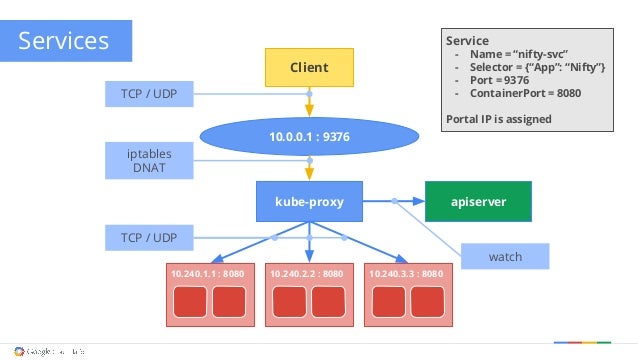

Kubernetes has a nifty discovery mechanism based on DNS that lets your pods find services by name rather than meddling with IPs. This is a very important feature in a cloud where the assumption is that pods might disappear any time, but services are entities that might exist independently of the supporting implementation in a pod.

According to the Kubernetes DNS documentation, “the only objects to which we are assigning DNS names are Services”, hence if we want our ROS nodes to find each other without implementing a discovery mechanism, we need to create a service for each ROS node we intend to deploy.

This is quite straightforward for the ROS Master. Here’s the pod specification:

apiVersion: v1

kind: Pod

metadata:

name: master

labels:

name: master

spec:

containers:

- name: master

image: ros:indigo

ports:

- containerPort: 11311

name: master

args:

- roscoreNo big surprises. We’re using the excellent “official” ROS containers from Docker Hub (https://hub.docker.com/_/ros/) and exposing port 11311 where the ROS master will accept incoming connections from other nodes.

Here’s the ROS Master service specification:

apiVersion: v1

kind: Service

metadata:

name: master

spec:

clusterIP: None

ports:

- port: 11311

protocol: TCP

selector:

name: master

type: ClusterIP

We are creating a service on port 11311 of type “ClusterIP”. This will create a Virtual IP in the Kubernetes network and make sure that all the traffic to that IP and port are forwarded by the Kube-Proxies to the right pod(s).

However, the problem comes when trying to do the same with the publisher and the subscriber. In fact, if one takes a look at the Python implementation of ROS publishers and subscribers, they use dynamic ports and register those as reachable endpoints to the master. Unfortunately Kubernetes services currently do not support dynamic ports (nor port ranges for that matter) and routing would not work.

Luckily for us, the concept of headless services lets us:

- Still register a pod so that it can be resolved by DNS, and

- Avoid forwarding only a specific set of ports through Kube Proxies

So, here’s the publisher pod:

apiVersion: v1

kind: Pod

metadata:

name: talker

labels:

name: talker

spec:

containers:

- name: talker

image: ros:indigo-ros-core

env:

- name: ROS_HOSTNAME

value: talker

- name: ROS_MASTER_URI

value: http://master:11311

args:

- rostopic

- pub

- "-r"

- "1"

- my_topic

- std_msgs/String

- "SPLab+ICClab"Notice how we use environmental variables to tell the publisher the URI of the ROS master, and its own ROS_HOSTNAME which has to be equal to the service name we’ll declare next.

Here’s the publisher service specification:

apiVersion: v1

kind: Service

metadata:

name: talker

spec:

clusterIP: None

ports:

- port: 11311

protocol: TCP

selector:

name: talker

type: ClusterIPNotice the “clusterIP: None” entry. That’s what makes the service “headless”. So, any pod that might ask the DNS to respond with the IP of a service called “talker”, will receive as a response the list of IPs of the pods matching the “talker” service selector. Since pods can directly each other by IP, this means that publisher and subscriber can now directly communicate with each other by name. As for the port, we are still required to expose a port, we just left the same as for the master.

You’ll find the repository with the whole example implementation here on our github.

TL;DR here’s how you run it:

Clone the repo:

git clone https://github.com/icclab/ros_on_kubernetes.git

Create all pods and services (“ros-on-kubernetes” is the directory you just cloned)

kubectl create -f ros_on_kubernetes

Check that pods and services are created

kubectl get all

Connect to the listener pod and listen to the talker:

kubectl exec -it listener bash

root@listener:/# source /opt/ros/indigo/setup.bash

root@listener:/# rostopic echo /my_topic

You should see the messages coming through the topic:

root@listener:/# rostopic echo /my_topic

data: SPLab+ICClab

---

data: SPLab+ICClab

---

data: SPLab+ICClab

We’re currently already working at the components needed to bridge a ROS environment in the cloud with ROS environments on premises, in robots or edge nodes. Those will be the actual enablers of cloud robotics using ROS.

Stay tuned for more!

Thanks for the tutorial, very helpful. I extended your setup with kubernetes deployments, replicasets and livenessprobes in this repository: https://github.com/diegoferigo/ros-kubernetes.

Thank you Diego, nice work!

We’ll definitely pick up some of your ideas, liveness probes had been on our todo list for a while