The Problem

Cloud networking bases on tech and protocols that were not initially designed for it. This has lead to unnecessary overhead and complexity in all phases of a cloud service. Tunneling protocols generate inherent cascading and encapsulation especially in multi tenant systems. The problem increases by vendor specific configuration requirements and heterogenous architectures. This complexity leads to systems which are hard to reason about, prone to errors, energy inefficient and increases the difficulty of configuration and maintenance.

SDN allows us to reduce that complexity by not only unifying and centralizing network configuration, but also by cutting down the protocol overhead. To address this challenge, our goal goal is to develop an SDK and a set of libraries which exploit the power of SDN to enable cloud native networking systems.

State of the Art in Openstack Neutron

Tenant isolation in a default Neutron setup is achieved by combining VLAN and (GRE/VXLAN) encapsulation. Traffic coming from a specific VM tenant carrying a VLAN ID is tagged with a unique GRE key when it leaves the compute host. The packet then gets decapsulated at the destination host and they GRE key is translated again into VLAN ID. Such kind of setup leads to inefficiencies on many levels. You can find an in depth article about Neutron networking here.

In the case when SDN controller e.g. OpenDaylight (ODL) is wired up with Neutron via ML2 plugin, the network architecture stays roughly the same. This is because the network virtualization component of ODL does not fundamentally change the communication between VM instances and compute hosts.

Our idea is to design and implement a network architecture, powered by an ODL SDN application, which will provide tenant isolation without using encapsulation and VLAN tagging.

Topology Adjustments

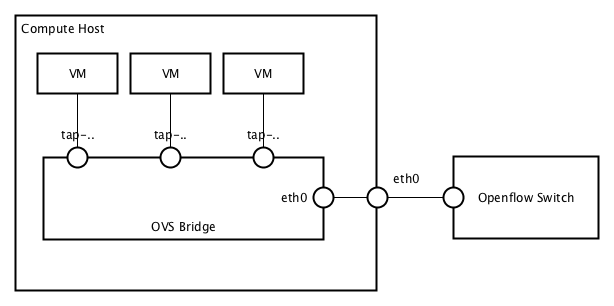

The OpenvSwitch (OVS) layout in our design has mainly one qualitative difference compared to the standard implementation. We plan to attach the OVS ports directly to the physical network interfaces on each compute host respectively. This means all the communication stays on Layer 2 domain.

Forwarding

OpenFlow (OF) allows to do exact matching and forwarding on all OVS devices, which means we don’t have to rely on separating traffic via VLAN IDs. Our forwarding flows will instead be a filtered subset of all the communication paths, since ODL gets all the information required to relate and match datagrams with the network logic provided by Neutron.

Note that in this case, the forwarding rules in OVS are completely dependent on the controller application. Furthermore it is not always possible to conclude the network logic just from the OF instructions and OVS layouts.

To understand clearly the approach, a workload example would look like this:

- An OpenStack user creates a Neutron object such as VM instance.

- The Neutron northbound API from ODL gets notified and passes the information to our Application A.

- At the same time OpenStack instantiates the VM and creates a corresponding tap interface connected to an OVS.

- OVSDB southbound notifies Application A about the new port.

- Application A matches the Neutron call with the OVSDB call.

- Application A installs Layer 2 flows based on the Neutron networks and tenants.

The difference here is again, that we don’t let the OVS act independently in any way. As soon as we know that there is a Layer 2 connection inside a network or tenant, we can push the needed flows without ever seeing a datagram passing through the OVS.

Security Concerns

In terms of security we will provide additional basic flow patterns, which will prevent any kind spoofing. We think this is a necessity as our design relies heavily on the correctness of each datagram passing multiple hops. We accomplish this by matching OVS ingress ports with the information we get from the Neutron API.

Address Resolution

In the default network architecture, ARP broadcast messages get filtered by multicasting them to ports that should be reachable by their respective tenant. This is not necessary as we have a complete global view of the network and all their hosts from the SDN controller side. Instead, ARP responses can be formed directly in the controller or by OVS specific extensions to the OpenFlow protocol and passed back to their request origin. This way they never have to be handled by the network itself.

Implementation

We are currently writing and testing this application as an ODL Helium Karaf feature which is wired up between the Neutron northbound and the OVSDB southbound service. We are looking forward to write more about this topic and the nitty gritty of implementing an ODL application in an OpenStack environment.