If you ever tried to implement High Availability in OpenStack by using Pacemaker, you might be disappointed by Pacemaker’s extremely slow recovery speed. Pacemaker recovers OpenStack at a very low pace – and even worse: it sometimes detects outages when they do not occur. As a result Pacemaker starts unnecessary computationally intensive recovery actions which are very slow and decrease OpenStack’s availability. This article describes why Pacemaker recovery actions are sometimes slow and what we can do against it.

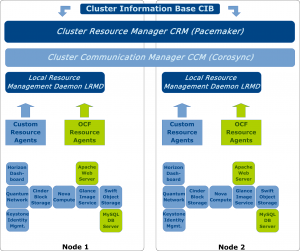

Pacemaker is a distributed software that monitors and controls execution of programs or services on different computers in a cluster. The controlled services are called “resources” and Pacemaker needs a “resource agent” interface in order to be able to manage a resource. Resource management actions are performed by programs that run locally on each computer of the cluster: the “Local Resource Management Daemons” (LRMDs). LRMDs are programs that can monitor execution of services and restart them in case of failure. The LRMD actions are orchestrated by the “Cluster Resource Manager” (CRM). LRMDs know how to manage resources (from the resource agent specifications), but they do not monitor, stop or restart local IT services autonomously: the CRM has to tell them when and at what time interval they have to perform failover actions. The CRM can be configured by a distributed XML-file: the “Cluster Information Base” (CIB). The CIB contains all information that is necessary to orchestrate the LRMD actions. The communication between CRM and LRMDs is performed by a “Cluster Communication Manager” (CCM). Typical CCMs that are used in combination with Pacemaker are Corosync or Heartbeat.

OpenStack can be made highly available by installing redundant OpenStack services (Keystone, Nova, Glance etc.) on different machines and let Pacemaker control execution of the OpenStack services. Custom resource agents must be installed in order to allow the LRMDs to manage OpenStack resources. Then the CIB must be configured so the CRM can orchestrate the LRMD actions. An example of such a OpenStack HA architecture using Pacemaker is shown in Fig. 1.

Why is Pacemaker slow?

Sometimes one can experience that Pacemaker failover actions are very slow. There could be several reasons why the Pacemaker recovery of OpenStack is such a time-consuming task. The most common ones are these:

- Suboptimal initialization scripts: OpenStack services do not generate a file containing the process identification (pid) in a pid file per default. Therefore Pacemaker is not able to identify OpenStack services as manageable entities or resources. Some hacking is necessary in order to make OpenStack services Pacemaker-compliant.

- Custom resource agents: there are no OCF-compliant OpenStack resource agents delivered out of the box. Pacemaker’s Local Resource Management Daemons (LRMDs) are therefore not able to manage OpenStack services.

- Bad Cluster Information Base (CIB) configuration: The worst thing is a messy CIB configuration. If e. g. recovery tasks are kept in large groups and monitoring intervals are too long to discover outages very fast, the Pacemaker recovery will act very slowly, because Pacemaker has to recover large resource groups and recovery actions are started lately.

What can be done to make Pacemaker faster?

The first and most important step to make Pacemaker recovery faster is to identify the cause of the slowness. Once you have done that, you can take one of the following actions:

- Optimize initialization scripts: Depending on your initialization system (Init-V, Upstart, Systemd), you must customize the upstart of services in order to generate pid files which help Pacemaker to identify the service on the system. OpenStack services in Ubuntu are upstarted by the Init-V system. If you run OpenStack on Ubuntu, you must customize the upstart scripts so they will generate pid files automatically. This can be done by changing the configuration files in /etc/init. For the quantum server e. g. you have to change the /etc/init/quantum-server.conf file to contain several lines which tell the upstart daemon to create a pidfile and place it in a specified folder (typically /var/run). Creation of pid files can be performed using the start-stop-daemon. For more information on the start-stop-daemon read the manpage.

- Create custom resource agents: there are no OpenStack resource agents delivered out of the box, but you can create them if you want. Resource agents must be placed in the /usr/lib/ocf/resource.d/ folder. They must contain methods to monitor, start and stop services as well as a method to control the execution status of the service. Some good examples for OpenStack resource agents can be found on the Hastexo website.

- Improve Cluster Information Base (CIB) configuration: Most improvements can be done by changing the CIB configuration. Ideally OpenStack services should run redundantly at the same time on two different OpenStack nodes which can be reached by using a shared virtual IP. In case of a service failure on one node, Pacemaker just has to route traffic to the node where the service is still running. If the service is not running redundantly on the fallback node before the failure occurs, Pacemaker has to upstart the service on at least one of the nodes. A small context switch is usually faster than the upstart of whole services. Therefore redundant nodes must always keep redundant OpenStack services up and running. It is really important to ensure that parallel execution of redundant services is configured in the CIB file.

If you improve OpenStack initialization scripts, optimize OpenStack resource agents and improve the CIB configuration, Pacemaker should be a great tool to make OpenStack services highly available.

Looking for Openstack Expert! New OpenStack Working Group starting June 1st: http://www.cloudcredential.org/working-groups/openstack/

If you are interested please visit the website and fill the form.

Thank you