In our previous blog post we presented an overview of Nova Cells describing its architecture and how a basic configuration can be set up. After some further investigation it is clear why this is still considered experimental and unstable; some basic operations are not supported as yet e.g. floating ip association as well as inconsistencies in management of security groups between API and Compute Cells. Here, we focused on using only the key projects in OpenStack i.e nova, glance and keystone and avoided adding extra complexity to the system; for this reason legacy networking (nova-network) was chosen instead of Neutron – Neutron is generally more complex and we had seen problems reported with between neutron and cells. In this blog post we describe our experience enabling floating ips in an Openstack Cells architecture using nova network which required making some small modifications to the nova python libraries.

The default installation of nova-network in this setup requires a private network in each Compute Cell – this can be created via nova-manage commands; the network-related information is stored only within Compute Cells and is out of the scope of the API Cell. Floating IPs, however, are managed in the API Cell and although is possible to allocate them to a specific project, when a floating IP is associated with a VM, the operation fails. We were not satisfied with this, and consequently, we looked into how to fix it.

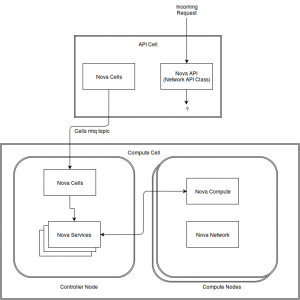

Fixing this is far from trivial: first it was necessary to understand the call flow between the API Cell and the Compute Cell. As a starting point info in log files and the content of this blog post led us to find the first call done by nova api; we then followed the call flow to determine how the mechanism worked. Generally (see Figure above), requests are received on the nova-api; these are passed to the nova-cells service in the API cell. Nova-cells determines which cell the request should be routed to: it then passes it to the nova-cells service within the appropriate Compute Cell which puts it on the Compute Cell message bus to be picked up by the appropriate nova service. The nova service then acts on the compute nodes.

It is noteworthy that the API Cell has a special configuration option in nova.conf which replaces the standard compute api class in nova-api to the ComputeCellsAPI class. This means that the same nova-api code can operate in a cell configuration as a standard controller configuration. The ComputeCellsAPI class handles calls, for example, to boot, suspend and terminate VMs: it then pushes the instruction to the API cell message bus with the ‘cells’ topic, which is received by the API Cell nova-cells service.

The problem we encountered is that this design does not have specific support for network functions: the solution for capturing compute operations and rerouting them to the cells functions is not replicated for network functions. More specifically, there is no analog for networking functions in which calls to the NetworkAPI are replaced by a NetworkCellsAPI. The picture is made more cloudy by the fact that the ComputeCellsAPI has support for some basic networking functions including floating IP association, but as network functions are dealt with slightly differently, these are never exercised.

To enable floating IP operations in this setup two modifications to the nova python libraries were required. First we had to map the call to the network API to the analogous call to the compute API which involved correcting the parameters of the call. This had the effect of directing network-related calls to network-related functions of the ComputeCellAPI with the proper parameters. This enabled the call to be passed on the appropriate compute cell. The second change involved changes to the standard compute api class within the compute cell: the api had to be extended to support the floating ip associate operation that is supported by the ComputeCellsAPI. This routed the call back to the network API within the compute cell which ultimately performed the floating ip association.

Having made those changes, a couple of other steps must be performed manually:

- Create floating IPs on both Compute and API Cell via nova-manage commands

- Update the floatingip table changing the project_id field in the database on the Compute Cell to the user tenant id

This small hack – which took some time to figure out – enables floating ip association to a given instance in a specific Compute Cell. There are many missing points not tested yet and clearly this solution is not robust, as the API Cell does not know about the new floating ip allocation changes and the floating IP is still available for use in the dashboard although it is actually allocated within the Compute Cell and can be used to ping and ssh the vm.

We are still investigating whether Cells meets the requirements for our pilot deployment and as next steps we will have a look whether neutron networking offers better support for the system, so stay tuned there’s much more to come!