As noted elsewhere, we’re looking at Rancher in the context of one of our projects. We’ve been doing some work on enabling it to work over heterogeneous compute infrastructures – one of which could be an ARM based edge device and one a standard x86_64 cloud execution environment. Some of our colleagues were asking how the networking works – we had not looked into this in much detail, so we decided to find – turns out it’s pretty complex.

We were working with the standard environment which Rancher supports – so-called Cattle – this uses docker-machine to set up hosts and provides its own networking solution. Rancher supports docker swarm and kubernetes (as well as other management systems such as Mesos and Windows) – the network configuration for those environments is likely different.

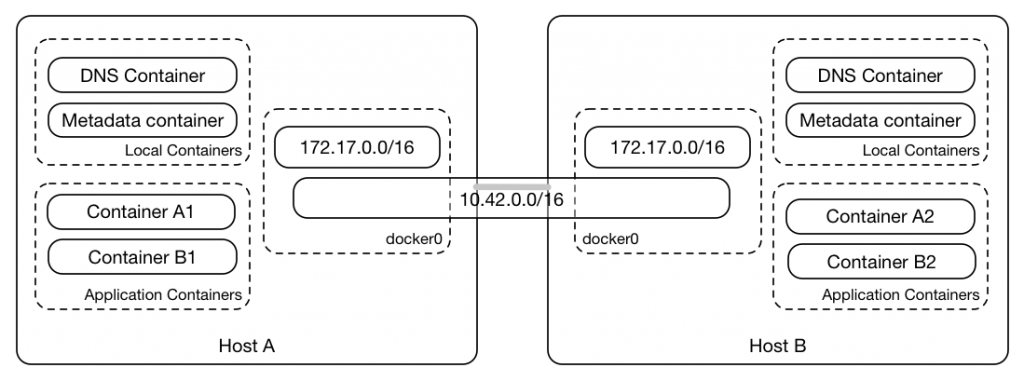

The basic networking concept which is employed in the standard Rancher/Cattle configuration is illustrated in Figure 1 below. There, it can be seen that there is a single, flat /16 network which is used for all containers within a single Environment – by default, this is the 10.42.0.0/16 network; it spans all the hosts within the Environment.

Figure 1: Basic overview of network infrastructure in Rancher – there is a single flat network for all containers and each node has its own internal network; these networks both operate within the docker0 bridge in the nodes.

Each host also has its own internal network – by default, this is on the 172.17.0.0/16 network. This is used by the services which are local to each host in the Environment – the internal DNS server and a metadata server for example. Obviously, it is not possible to route from the 172.17.0.0 network on one host to the 172.17.0.0 network on another host.

Both of these networks operate within a single linux bridge – by default this is the docker0 bridge. Routing between these networks is possible: traffic between these networks is terminated on the bridge and passed through to the docker host kernel where the routing table sends it back to the same bridge. Then it is forwarded on to the appropriate destination container.

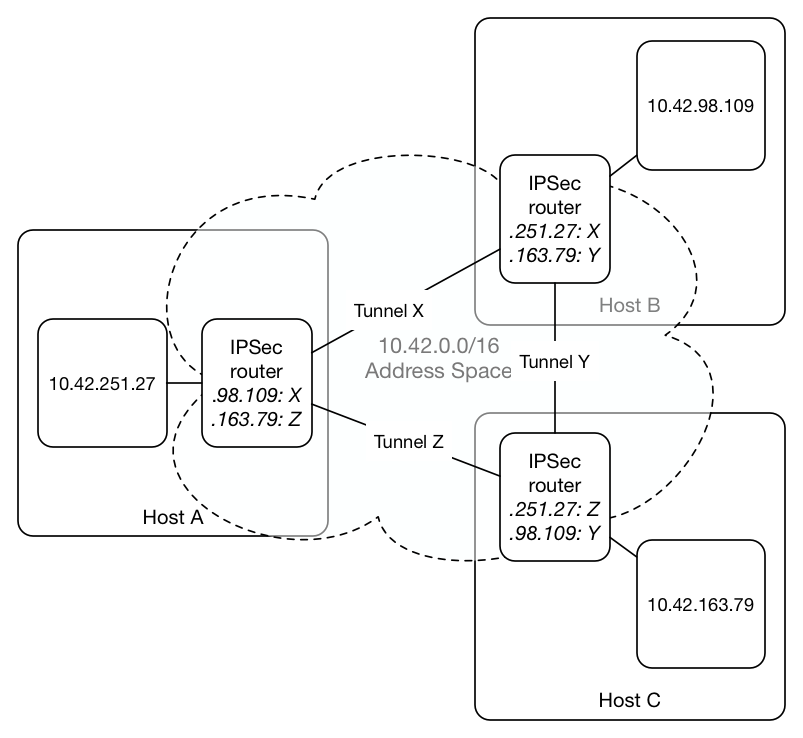

Figure 2: IPSec router configuration in Rancher/Cattle – all nodes in the Environment are connected via a fully connected mesh; all routers contain routing tables with entries for all other containers in the system, pointing at the tunnel that connects to the host on which the container is running.

Hosts are connected to each other via IPSec tunnels: Rancher sets up such tunnels between all nodes within the Environment, resulting in a potentially large mesh of tunnel connections. At each end of the tunnel there is a router – inside a specific container – which determines how traffic should be routed. When a new container is added to the system, the IPSec routers on all the other nodes are notified and the new container is added to their routing tables via ip xfrm policy mechanisms. These IPSec routers also have a specific ARP related configuration such that they respond to ARP messages for any hosts that are in their routing tables (see here for more details): as their routing tables contain entries for all containers on the other hosts, they respond to ARP requests for any hosts not on the local node. In this way, the IPSec router becomes the next hop when a container needs to communicate with a container on a different node. The IPSec router uses its policy to determine which tunnel should be the next hop for the packet and then on the destination node the packet is passed into the docker0 bridge where ARP resolution enables it to find its way to its ultimate destination. The basic configuration of the IPSec routers can be seen in Figure 2 above.

It is worth noting that the above flat network configuration enables all containers in the Environment to communicate with each other – there is no specific isolation between applications running within the Environment.

Understanding the network configuration within Rancher took some time – it is complex and clearly it is not suited to all use cases: obviously the solution cannot scale to very large numbers of hosts in an Environment, but then it is likely that the number of hosts in an Environment would not get so large in most cases. Troubleshooting or debugging networking issues is always tricky – doing so in this context even more so.

With some understanding of the basic Rancher/Cattle networking, we’re now going to turn our attention to Swarm and see how networking works in this context and, in particular, how it integrates with Rancher.

Thanks for such a good article!