[Update 8.12.2014] Since OpenStack’s Juno release hasn’t introduced any changes regarding live migration, Juno users should be able to follow this tutorial as well as the Icehouse users. If you experience any issues let us know. The same setup can be used for newer versions of QEMU and Libvirt as well. Currently we are using QEMU 2.1.5 with Libvirt 1.2.11.

The Green IT theme here in ICCLab is working on monitoring and reducing datacenter energy consumption by leveraging Openstack’s live migration feature. We’ve already experimented a little with live migration in the Havana release (mostly with no luck), but since live migration is touted as one of the new stable features in the Icehouse release, we decided to investigate how it has evolved. This blogpost, largely based on official Openstack documentation, provides step-by-step walkthrough of how to setup and perform virtual machine live migration with servers running the Openstack Icehouse release and KVM/QEMU hypervisor with libvirt.

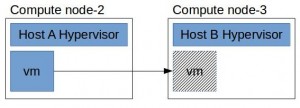

Virtual machine (VM) live migration is a process, where a VM instance, comprising of its states, memory and emulated devices, is moved from one hypervisor to another with ideally no downtime. It can come handy in many situations such as basic system maintenance, VM consolidation and more complex load management systems designed to reduce data center energy consumption. Following system configuration was used for our testing:

- 3 nodes: 1 control node (node-1), 2 compute nodes (node-2, node-3)

- Mirantis Openstack 5.0 (which contains a set of sensible deployment options and the Fuel deployment tool)

- Openstack Icehouse release

- Nova 2.18.1

- QEMU 1.2.1

- Libvirt 0.10.2

The default Openstack live migration process requires a shared file system (e.g. NFS, GlusterFS) across both source and destination computing hosts to copy the VM via disk. Openstack also supports “Block live migration” where a VM disk is copied via TCP and hence no shared file system is needed. Shared files system usually ensure better migration performance while the block migration approach provides better security due to file system separation.

System setup

1. Network configuration

Make sure all hosts (hypervisors) run in the same network/subnet.

1.1. DNS configuration

Check configuration and consistency of /etc/hosts file across all hosts.

192.168.0.2 node-1 node-1.domain.tld

192.168.0.3 node-2 node-2.domain.tld

192.168.0.4 node-3 node-3.domain.tld

1.2. Firewall configuration

Configure /etc/sysconfig/iptables file to allow libvirt listen on TCP port 16509 and don’t forget to add a record accepting KVM communication on TCP port within the range from 49152 to 49261.

-A INPUT -p tcp -m multiport --ports 16509 -m comment --comment "libvirt" -j ACCEPT

-A INPUT -p tcp -m multiport --ports 49152:49216 -m comment --comment "migration" -j ACCEPT

2. Libvirt configuration

Enable libvirt listen flag at /etc/sysconfig/libvirtd file.

LIBVIRTD_ARGS=”–listen”

Configure /etc/libvirt/libvirtd.conf file to make the hypervisor listen tcp communication with none athentication. Since authentication is set to NONE it’s strongly recommended to use SSH keys for authentication.

listen_tls = 0

listen_tcp = 1

auth_tcp = “none”

3. Nova configuration

Openstack doesn’t use real live migration mechanism as a default setting, because there is no guarantee that the migration is successful. An example of situation in which a migration never ends is one in which memory pages are dirtied faster than they are transfered to destination host.

To enable real live migration set up live_migration flag in /etc/nova/nova.conf file as follows:

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE

Once these settings are configured, it should be possible to perform a live migration.

Live Migration Execution

First, list available VMs:

$ nova list

+-------------------------+------+--------+-------------+---------------+

| ID | Name | Status | Power State | Networks |

+-------------------------+------+--------+-------------+---------------+

| 4cfe0dfb-f28f-43e9-.... | vm | ACTIVE | Running | 10.0.0.2 |

+-------------------------+------+--------+-------------+---------------+

Next, show VM details and determine which host an instance running on:

nova show <VM-ID>

+--------------------------------------+--------------------------------+

| Property | Value |

+--------------------------------------+--------------------------------+

| OS-EXT-SRV-ATTR:host | node-2.domain.tld |

+--------------------------------------+--------------------------------+

After that, list the available compute hosts and choose the host you want to migrate the instance to:

$ nova host-list

+-------------------+-------------+----------+

| host_name | service | zone |

+-------------------+-------------+----------+

| node-2.domain.tld | compute | nova |

| node-3.domain.tld | compute | nova |

+-------------------+-------------+----------+

Then, migrate the instance to new host.

For live migration using shared file system use:

$ nova live-migration <VM-ID> <DEST-HOST-NAME>

For block live migration use the same command with block_migrate flag enabled:

$ nova live-migration --block_migrate <VM-ID> <DEST-HOST-NAME>

Finally, show the VM details and check if it has been migrated successfully:

$ nova show <VM-ID>

+--------------------------------------+----------------------------+

| Property | Value |

+--------------------------------------+----------------------------+

| OS-EXT-SRV-ATTR:host | node-3.domain.tld |

+--------------------------------------+----------------------------+

Note: If you don’t specify target compute node explicitly nova-scheduler chooses suitable one from available nodes automatically.

Congratulations, you’ve just migrated your VM.

[UPDATE]

If you are more interested in live migration performance in OpenStack you can check out our newer blog posts:

- An analysis of the performance of block live migration in Openstack, An analysis of the performance of live migration in Openstack,

- Performance of Live Migration in Openstack under CPU and network load and

- The impact of ephemeral VM disk usage on the performance of Live Migration in Openstack.

Hi,

I have tried to setup the openStack icehouse with three different nodes where one node acts as controller node, and other two nodes act as compute nodes. I have followed the instructions that is mentioned here to enable live block migration. But I am getting this following error:

NoLiveMigrationForConfigDriveInLibVirt: Live migration of instances with config drives is not supported in libvirt unless libvirt instance path and drive data is shared across compute nodes.

Thanks for your any kind of suggestion.

Hello,

first of all please note, that there are 2 types of live migrations in Openstack – live migration (LM) and block live migration (BLM).

LM requires shared file system between compute nodes and instance path should be the same in both cases (default is /var/lib/nova/instances).

If you don’t use shared file system make sure that you are running the live migration with –block-migrate parameter (or check block migration option in Horizon). We also experienced some issues trying to BLOCK live migrate instance on SHARED storage. So I would suggest try using BLM only for NON-SHARED filesystem first.

Otherwise you might be experiencing the bug described here and can apply released fix – https://bugs.launchpad.net/nova/+bug/1351002/

Hi,

Thanks for replying, I am trying live migration with block migrate parameter without sharing any storage but libvirt is complaining about this “NoLiveMigrationForConfigDriveInLibVir”. I am wondering is there any configuration missing in libvirt or qemu?

Here are ours libvirt and QEMU settings:

/etc/libvirt/libvirtd.conf:

listen_tls = 0

listen_tcp = 1

auth_tcp = “none”

/etc/libvirt/qemu.conf:

security_driver=”none”

Hope it helps.

Thanks a lot for the config file but I have already this parameters. But I have found the problem in the nova.conf, the parameter should be “force_config_drive = None” .

I have one more query as you already did some tests with the live migration, I am wondering how you keep track of the total migration time? How the migration traffic is different from other traffics? The log file is generating lots of messages, so how can I pick the right time?

Do you have some scripts to do the measurements like for calculating the downtime, total data transfer? If possible can you please share with me.

Thanks again for your cordial response.

Hi,

Thanks for the reply. It was the problem in nova.conf file, the parameter force_config_drive need to be false.

I was wondering how can I keep track of the migration time as nova is producing lots of lines in log file and its hard to guess the correct time?

Glad that you’ve solved your problem.

Concerning the testing scripts, since all these test were quite complex and not successful in every case, the best (fastest) option was to keep tracking the results manually. Here are the methods we used.

Migration time:

Migration iniciation generates following record in /var/log/nova-all.log at the DESTIONATION host – “Oct 10 12:49:04 node-x nova-nova.virt.libvirt.driver INFO: Instance launched has CPU info:…”

END of the migration is recorded in the same file on the SOURCE node by record- “Oct 10 12:49:28 node-x nova-nova.compute.manager INFO: Migrating instance to node-y.domain.tld finished successfully.”

Downtime:

You can simply get the migration time by subtracting these two values, but please note that “Instance launched” record is generated every time when any instance is spawned on the node and not only in the case of VM migration..

You can GREP for Migration or Instance to get only these records.

Downtime is calculated easily – you can just multiply number of lost packets by the interval between two following packets.

Anyway, the idea of having these tests scripted is really tempting and can save some time in our future work. So I guess I’ll code it down. I’ll keep you updated.

Thanks a ton. It saves lots of time for me. Also this blog is very much informative. You did a nice job.

May be I am asking too much but I have one more query as you did BLM and LM, and in one case you mentioned that you have used 5 GB in all the cases, what does it mean? All the instances (small, medium, large, etc.) have openStack configurations except root drive is 5 GB? And what is the size of your VM OS (I mean size of Ubuntu 14.04 in the VM)?

Hello, sorry for tardy reply. We’ve just published a new blog post that should answer your questions – http://blog.zhaw.ch/icclab/the-impact-of-ephemeral-vm-disk-usage-on-the-performance-of-live-migration-in-openstack/

Thanks, the new blog is also very useful and answers my queries.

Hi,, Thanks for the article, this is helpfull. I try to follow your instruction, but I got a problem and this is log from /var/log/libvirt/libvirtd.log

2014-12-16 04:36:08.505+0000: 1935: info : libvirt version: 1.2.2

2014-12-16 04:36:08.505+0000: 1935: error : virNetSocketNewConnectTCP:484 : unable to connect to server at ‘compute2:16509’: No route to host

2014-12-16 04:36:08.506+0000: 1935: error : doPeer2PeerMigrate:4040 : operation failed: Failed to connect to remote libvirt URI qemu+tcp://compute2/system: unable to connect to server at ‘compute2:16509’: No route to host

Can you help me? thanks before.

Hi, it seems there is no route to the destination machine. Can you ping the destination host from the source (‘ping compute2’)? If not check /etc/hosts whether it contains record ‘ compute2’ (This file should contain ips and hostnames of every host) or try to use host’s ip address directly instead of the hostname in the configuration files (/etc/nova/nova.conf). Also check out the routing configuration on your hosts by the ‘route’ command. Hope it helps.

Hi,

I tried live migration

I get an error like

$nova live-migration bf441c35-d7e4-4ffa-926e-523690bd815d celestial7

ERROR (ClientException): Live migration of instance bf441c35-d7e4-4ffa-926e-523690bd815d to host celestial7 failed (HTTP 500) (Request-ID: req-69bd07e6-3318-4f50-8663-217b98ba7564)

Overview of instance :

Message

Remote error: libvirtError Requested operation is not valid: no CPU model specified [u’Traceback (most recent call last):\n’, u’ File “/usr/local/lib/python2.7/dist-packages/oslo_messaging/rpc/dispatcher.py”, line 142, in _dispatch_and_reply\n executo

Code

500

Details

File “/opt/stack/nova/nova/conductor/manager.py”, line 606, in _live_migrate block_migration, disk_over_commit) File “/opt/stack/nova/nova/conductor/tasks/live_migrate.py”, line 194, in execute return task.execute() File “/opt/stack/nova/nova/conductor/tasks/live_migrate.py”, line 62, in execute self._check_requested_destination() File “/opt/stack/nova/nova/conductor/tasks/live_migrate.py”, line 100, in _check_requested_destination self._call_livem_checks_on_host(self.destination) File “/opt/stack/nova/nova/conductor/tasks/live_migrate.py”, line 142, in _call_livem_checks_on_host destination, self.block_migration, self.disk_over_commit) File “/opt/stack/nova/nova/compute/rpcapi.py”, line 391, in check_can_live_migrate_destination disk_over_commit=disk_over_commit) File “/usr/local/lib/python2.7/dist-packages/oslo_messaging/rpc/client.py”, line 156, in call retry=self.retry) File “/usr/local/lib/python2.7/dist-packages/oslo_messaging/transport.py”, line 90, in _send timeout=timeout, retry=retry) File “/usr/local/lib/python2.7/dist-packages/oslo_messaging/_drivers/amqpdriver.py”, line 417, in send retry=retry) File “/usr/local/lib/python2.7/dist-packages/oslo_messaging/_drivers/amqpdriver.py”, line 408, in _send raise result

Created

Feb. 17, 2015, 8:20 a.m.

Can anybody help me with this.

Thanks

Hello,

you can try to specify libvirt_cpu_mode and libvirt_cpu_model flags in nova.conf file (https://wiki.openstack.org/wiki/LibvirtXMLCPUModel) as follows:

libvirt_cpu_mode = customlibvirt_cpu_model = cpu64_rhel6

Don’t forget to restart nova-compute service afterwards.

You can also find more error related messages directly in the libvirtd.log on the source/destination hosts.

Hope it helps.

Cheers!

Thanks a lot for the suggestion

I found my cpu model in cpu_max.xml and did changes accordingly

Still giving same error.

libvirtd.log after error:

2015-02-17 10:36:37.293+0000: 17166: warning : qemuOpenVhostNet:522 : Unable to open vhost-net. Opened so far 0, requested 1

How do I solve this ?

Thanks in advance.

and this

libvirtd.log

error : netcfStateCleanup:109 : internal error: Attempt to close netcf state driver with open connections

Hello again, unfortunately I have never seen that behavior before. You could try to use directly model “cpu64_rhel6” or “kvm64” and try if it fits your configuration otherwise I would suggest to start looking at the logs from nova (nova-all.log and nova/nova-compute.log), libvirt (see the previous post) and QEMU (libvirt/qemu/instance/[instance.id].log) from both the source and destination. Could you please paste relevant parts of the those logs somewhere online or send it to me directly (cima[at]zhaw.ch) together with nova.conf file and your setup configuration?

Thanks a lot Cima.

Will be in touch with you ,

Hi,

Please clarify…

I do not have the directory /etc/sysconfig on ubuntu 14.04, is this something I create manually?

If the answer is yes, should I then create the file /etc/sysconfig/libvirtd manually as well?

Thanks

Hi Bobby, /etc/sysconfig is normally present in Redhat Linux distributions (RHEL, Centos, Fedora), but you are using Ubuntu which is a different operating system.

libvirt is configured differently in Ubuntu.

In Ubuntu the libvirtd configuration file is found under /etc/default and it is called “libvirt-bin” (instead of “libvirtd”). So your file should be “/etc/default/libvirt-bin” (instead of “/etc/sysconfig/libvirtd”).

In this file you should add:

libvirtd_opts=”-d -l”

(Instead of:

LIBVIRTD_ARGS=”–listen”)

The rest of the process should be similar.

Hi Cima,

I am running Devstack multinode setup(one controller,2 compute) from master branch.

I am trying to do block migration with the steps provided by you, but i am not seeing the instance migration.

can you please help me to resolve this.

I am observing that my nova-compute service on destination node is going offline after triggering block migration.

Here are my setup details.

One VM running controller+network+compute node (i had disabled nova-compute on this node).

Two other VMs are running as compute nodes.

I bring up VM on 1st compute node and trying to migrate to another compute node.

Interestingly i am not seeing error when i trigger block live migration from OpenStack dashboard, but after some time my destination compute node is going offline.

below is my nova.conf on compute node.

[DEFAULT]

vif_plugging_timeout = 300

vif_plugging_is_fatal = True

linuxnet_interface_driver =

security_group_api = neutron

network_api_class = nova.network.neutronv2.api.API

firewall_driver = nova.virt.firewall.NoopFirewallDriver

compute_driver = libvirt.LibvirtDriver

default_ephemeral_format = ext4

metadata_workers = 8

ec2_workers = 8

osapi_compute_workers = 8

rpc_backend = rabbit

keystone_ec2_url = http://10.212.24.106:5000/v2.0/ec2tokens

ec2_dmz_host = 10.212.24.106

xvpvncproxy_host = 0.0.0.0

novncproxy_host = 0.0.0.0

vncserver_proxyclient_address = 127.0.0.1

vncserver_listen = 127.0.0.1

vnc_enabled = true

xvpvncproxy_base_url = http://10.212.24.106:6081/console

novncproxy_base_url = http://10.212.24.106:6080/vnc_auto.html

logging_exception_prefix = %(color)s%(asctime)s.%(msecs)03d TRACE %(name)s ^[[01;35m%(instance)s^[[00m

logging_debug_format_suffix = ^[[00;33mfrom (pid=%(process)d) %(funcName)s %(pathname)s:%(lineno)d^[[00m

logging_default_format_string = %(asctime)s.%(msecs)03d %(color)s%(levelname)s %(name)s [^[[00;36m-%(color)s] ^[[01;35m%(instance)s%(color)s%(message)s^[[00m

logging_context_format_string = %(asctime)s.%(msecs)03d %(color)s%(levelname)s %(name)s [^[[01;36m%(request_id)s ^[[00;36m%(user_name)s %(project_name)s%(color)s] ^[[01;35m%(instance)s%(color)s%(message)s^[[00m

#force_config_drive = True

force_config_drive = False

send_arp_for_ha = True

multi_host = True

instances_path = /opt/stack/data/nova/instances

state_path = /opt/stack/data/nova

s3_listen = 0.0.0.0

metadata_listen = 0.0.0.0

ec2_listen = 0.0.0.0

osapi_compute_listen = 0.0.0.0

instance_name_template = instance-%08x

my_ip = 10.212.24.108

s3_port = 3333

s3_host = 10.212.24.106

default_floating_pool = public

force_dhcp_release = True

dhcpbridge_flagfile = /etc/nova/nova.conf

scheduler_driver = nova.scheduler.filter_scheduler.FilterScheduler

rootwrap_config = /etc/nova/rootwrap.conf

api_paste_config = /etc/nova/api-paste.ini

allow_resize_to_same_host = True

debug = True

verbose = True

[database]

connection =

[api_database]

connection =

[oslo_concurrency]

lock_path = /opt/stack/data/nova

[spice]

enabled = false

html5proxy_base_url = http://10.212.24.106:6082/spice_auto.html

[oslo_messaging_rabbit]

rabbit_userid = stackrabbit

rabbit_password = cloud

rabbit_hosts = 10.212.24.106

[glance]

api_servers = http://10.212.24.106:9292

[cinder]

os_region_name = RegionOne

[libvirt]

vif_driver = nova.virt.libvirt.vif.LibvirtGenericVIFDriver

inject_partition = -2

live_migration_uri = qemu+ssh://stack@%s/system

#live_migration_uri = qemu+tcp://%s/system

use_usb_tablet = False

cpu_mode = none

virt_type = qemu

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_TUNNELLED

[neutron]

url = http://10.212.24.106:9696

region_name = RegionOne

admin_tenant_name = service

auth_strategy = keystone

admin_auth_url = http://10.212.24.106:35357/v2.0

admin_password = cloud

admin_username = neutron

[keymgr]

fixed_key = f1a0b6c1ce848e4ed2dad31abb3e7fd49183dc558e34931c22eb4884a9096ddc

I am able to ssh without password through stack user from controller node to all compute nodes.

Correction in above comment:

nova-compute on source compute node is going offline not destination.

Hi Cima,

Below is the output from libvirt.log

2015-08-25 06:34:32.323+0000: 4216: debug : virConnectGetLibVersion:1590 : conn=0x7f7b14002460, libVir=0x7f7b3bde1b90

2015-08-25 06:34:32.325+0000: 4212: debug : virDomainLookupByName:2121 : conn=0x7f7b14002460, name=instance-00000014

2015-08-25 06:34:32.325+0000: 4212: debug : qemuDomainLookupByName:1402 : Domain not found: no domain with matching name ‘instance-00000014’

2015-08-25 06:34:32.327+0000: 4213: debug : virConnectGetLibVersion:1590 : conn=0x7f7b14002460, libVir=0x7f7b3d5e4b90

2015-08-25 06:34:32.328+0000: 4214: debug : virDomainLookupByName:2121 : conn=0x7f7b14002460, name=instance-00000014

2015-08-25 06:34:32.328+0000: 4214: debug : qemuDomainLookupByName:1402 : Domain not found: no domain with matching name ‘instance-00000014’

2015-08-25 06:34:32.612+0000: 4218: debug : virConnectGetLibVersion:1590 : conn=0x7f7b14002460, libVir=0x7f7b3addfb90

2015-08-25 06:34:32.614+0000: 4216: debug : virDomainLookupByName:2121 : conn=0x7f7b14002460, name=instance-00000014

2015-08-25 06:34:32.614+0000: 4216: debug : qemuDomainLookupByName:1402 : Domain not found: no domain with matching name ‘instance-00000014’

The above one is libvirtd.log snapshot from destination host, but i didn’t see any issue on source compute node where instance is running

Hello, I would suggest to take a look “one level up” and check nova-compute.log on both the source and the destination. Also Qemu logs could be useful (note that Qemu records logs per instance. Default location is /var/log/libvirt/qemu/[instance_name].log). Log is created after the instance is spawned. You can get the instance name using “virsh list” command on the host it runs. Virsh command also gives you an insight on the instance state and whether the instance is being created on the destination on removed from the source.

Hi Cima,

There was ssh key issue, I replace ssh with tcp in nova configuration and now it is working fine.

Thanks,

Vasu.

Hello,

I am trying block migration in Juno and running into the below error:

Starting monitoring of live migration _live_migration /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5798

2015-09-09 14:32:03.939 12766 DEBUG nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Operation thread is still running _live_migration_monitor /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5652

2015-09-09 14:32:03.940 12766 DEBUG nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Migration not running yet _live_migration_monitor /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5683

2015-09-09 14:32:03.948 12766 ERROR nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Live Migration failure: Unable to pre-create chardev file ‘/var/lib/nova/instances/9bb19e79-a8a2-481f-aefb-e05d0567c198/console.log’: No such file or directory

2015-09-09 14:32:03.948 12766 DEBUG nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Migration operation thread notification thread_finished /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5789

2015-09-09 14:32:04.441 12766 DEBUG nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] VM running on src, migration failed _live_migration_monitor /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5658

2015-09-09 14:32:04.442 12766 DEBUG nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Fixed incorrect job type to be 4 _live_migration_monitor /usr/lib/python2.7/site-packages/nova/virt/libvirt/driver.py:5678

2015-09-09 14:32:04.442 12766 ERROR nova.virt.libvirt.driver [-] [instance: 9bb19e79-a8a2-481f-aefb-e05d0567c198] Migration operation has aborted

It used to work well for me with the Icehouse version.Not sure whats wrong here.Am i hitting the bug mentioned below,any idea?

https://bugs.launchpad.net/nova/+bug/1392773

Pls ignore the above.Turns out the DNS entry was wrong.It worked for me.

which dns entry was wrong? I am having the same problem in Juno when the VM is over 64GB.