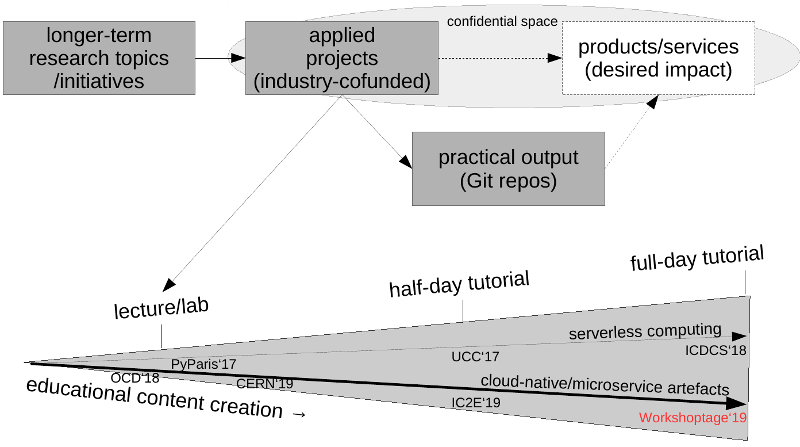

Our work in the Service Prototyping Lab at Zurich University of Applied Sciences consists of applied research, prototype development and conveying knowledge to industry. In this context, we have worked hard over the previous two years to gather educational and hands-on material, including our own contributions, for increasingly valuable tutorials. From single lectures to half-day and eventually full-day tutorials, we aim at both technology enthusiasts and experienced engineers who are open for new ideas and sometimes surprising facts. In this reflective blog post, we report on this week’s experience of giving the full-day tutorial on microservice artefact observation and quality assessment.

At the 2019 Swiss workshop days organised by CH Open, we offered a tutorial on «Data-driven quality analysis of microservice artefacts in software development». While some other topics had to be cancelled due to lack of interest, we are grateful to have attracted eight persons with a software engineering, testing and quality assurance background from various companies and institutional data centres. As instructors, our aim is to convey knowledge on off-the-shelf tools, including potential weaknesses and omissions and prototypical ideas on how to improve the tooling. As researchers, we are taking their input on potential use cases and technological priorities serious to advance our prototypes for eventual commercially relevant use as open source in production.

The tutorial sessions were divided into slidedeck inputs and hands-on sessions, all occurring on a single OpenStack-hosted VM with Docker, Kubernetes, Helm and similar tools installed. The main inputs were around these topics:

- SaaSification and cloudification of software development; polyglot and polytype artefact trends towards mixed-technology applications.

- Schematic views and potential quality issues in several microservice-related technologies: Dockerfiles, Docker images, Docker Compose files, Helm charts, Kubernetes operators, Lambda functions and SAM applications, Blockchain DApps.

- Software evolution patterns and the significance of historic artefact data for trend and regression detection. Quantified quality issues in various artefact types.

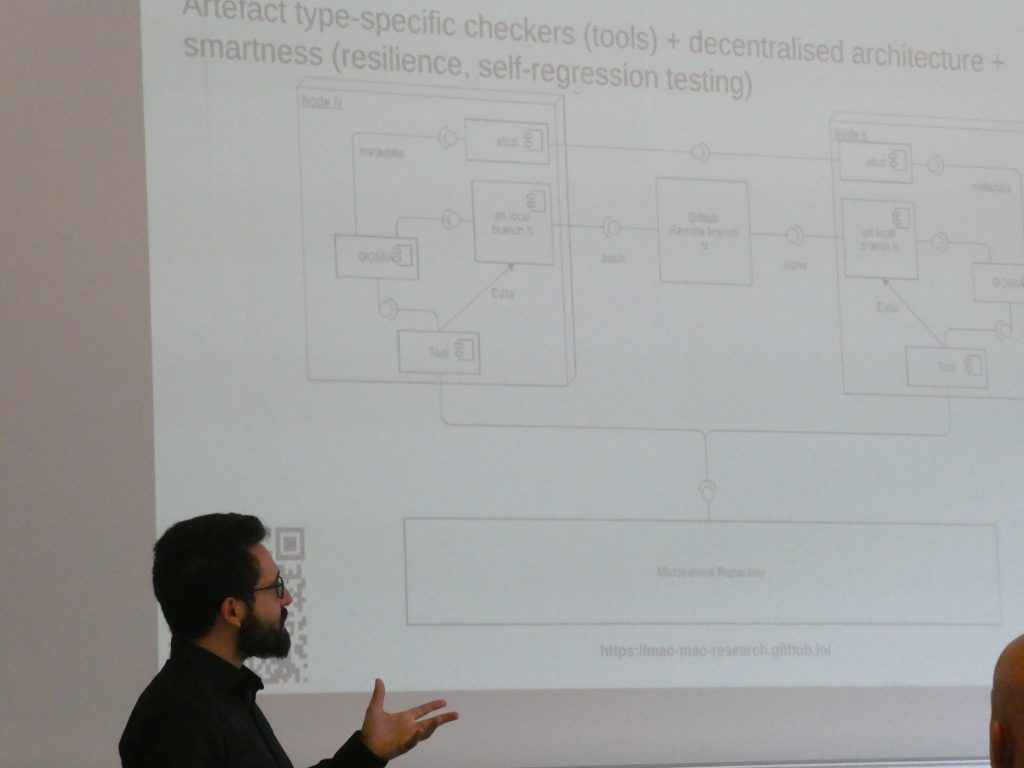

- Integration of quality assessment and improvement tools into CI/CD systems and other development environments. Dual approach of MAO to combine real-time analysis and historic data analysis.

- Quality improvements through round-tripping including visualisation, actionable advice and keeping developers in the loop instead of using metrics to annoy them.

- Graphical dashboards and convenience tools to check quality of single or potentially thousands of artefacts.

Among the hands-on tools, we demonstrated hadolint for checking the quality of Dockerfiles and helm lint to do the same for Helm charts. We outlined different maturity levels in checkers and validation tools, up to the ability to suggest, generate and apply improvements automatically. Among the emerging use-inspired research tools, we presented HelmQA and the Docker/Kubernetes label consistency checker, as well as the microservice artefact metrics observation dashboard.

Together with the participants, we concluded a number of important follow-up steps. There were suggestions to further integrate the validation tools into software development workflows, including CI/CD and testing tools. Instead of technology-specific checks, a holistic multi-artefact type checker should be integrated at various points, including as plugin to code scanners such as SonarQube. To the extent possible, we will in the contact of the MAO project invest efforts to work on these integrations. We invite other researchers as well as representatives from companies who want to share their requirements to join the project.