In previous blog posts – here and here – we showed how to set up OpenWhisk and deploy a sample application on the platform. We also provided a comparison between the two open-source serverless platforms OpenWhisk and Knative in this blog post. In progressing this work, we shifted focus slightly to that other critical component of realistic serverless platforms, the services that they integrate with – so-called Backend-as-a-service – which are (arguably) more important. For this reason, in this blog post we look at how to integrate widely used databases with Knative and potentially OpenWhisk in future.

Our initial thoughts were to leverage database trigger mechanisms and write components which would listen to these events and publish them to a Kafka bus. Indeed, we started to write code that targeted PostgreSQL to do just that, but then we came across the Debezium project which essentially solves the same problem, albeit not in the same context, but with a much more mature codebase and support for multiple database systems. It didn’t make sense to reinvent the wheel so the objective then turned into how to best integrate Debezium with Knative.

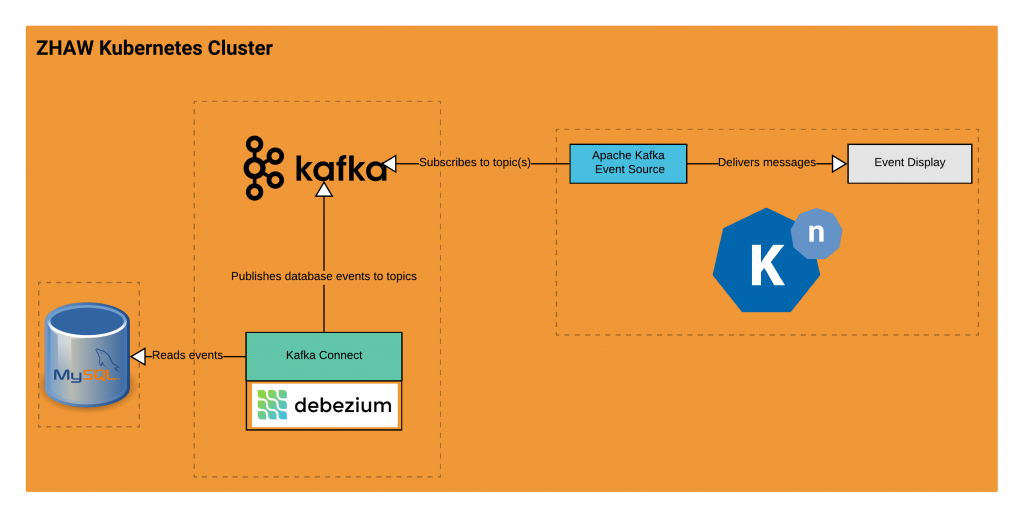

After a bit of reading and experimentation we ended up with the solution shown in figure 1. As can be seen, it comprises of a MySQL database which is connected to a Kafka Connect service; this uses the Debezium MySQL plugin to produce messages onto the Kafka bus; Knative’s Apache Kafka Event Source connects to specific topics on the Kafka bus specified in its manifest; finally, Event Display prints out the messages it receives from the Apache Kafka Event Source.

Kafka Connect – a framework for connecting external systems to Kafka clusters – supports diverse plugins via a plugin architecture, one of which is Debezium. Debezium in fact comprises of multiple plugins, one for each database it supports. In our case, we used the MySQL plugin which communicates with a MySQL database via the standard port and operates on the database’s binary log (binlog). The binlog is essentially an ordered record of all operations that occur on the database. The Debezium connector sifts through the binlog, filtering as appropriate, and generates events out of the entries in the binlog file which it then streams to Kafka topics, creating a new topic per database table.

Finally, to consume the messages in the newly created topics, we utilize Knative’s Apache Kafka Event Source which provides an integration between Knative and Kafka and can consume messages from one or more Kafka topics. It then forwards the messages to a Knative service (a function in standard FaaS terminology) which can act on the database change. In our case, we had a simple Event Display service which just dumped events received to output.

Deploying MySQL

For simplicity, we used the Docker image for the pre-configured MySQL server provided by Debezium – which can be found here – and deployed that as a pod on our Kubernetes cluster in a new namespace. The configuration changes are not so substantial – it is necessary to enable binlog support and ensure that the database can be accessed remotely. More info on the required configuration is documented in-detail here. Note that Debezium does not really impose constraints regarding where the database is hosted; you can use your self-managed or cloud-managed MySQL instances with it, for example, and it can operate with multiple databases simultaneously. Debezium also supports monitoring other database systems such as MongoDB, PostgreSQL, Oracle and SQL Server.

Deploying Kafka and Kafka Connect Clusters

The next step was to deploy an Apache Kafka cluster and a Kafka Connect cluster with the Debezium MySQL plugin in order to consume events from our MySQL server. We used Strimzi which provides a simple way to get up and running with Kafka and Kafka Connect. Specifically, we followed the instructions for the 0.11.2 release to deploy both Kafka and Kafka Connect in the same namespace.

Kafka provides a configuration setting which controls whether topics can be automatically created if a producer tries to write to a non-existing topic. In the standard Strimzi Kafka configuration this was disabled by default. This meant that Kafka Connect wasn’t able to create topics, thus failing to monitor our database instance. To fix the issue, we had to to set the auto.create.topics.enable option to true in the Kafka Strimzi manifest. More on adding configuration parameters to Kafka here.

When deploying Kafka Connect we used a custom-built Docker image that included the Debezium MySQL connector.

Kafka Connect and Debezium

As noted above, Kafka Connect uses the Debezium connector for MySQL to read the binary log of the MySQL database – this records all operations in the same order they are committed by the database, including changes to the schemas of tables or changes to data stored within the tables. The MySQL connector produces a change event for each row-level INSERT, DELETE and UPDATE operation in the binlog. Change events for each table in the database are published to a separate Kafka topic and an additional topic is used to record the changes to the database schema.

Kafka Connect exposes a RESTful API by which you can manage the list of databases to monitor. To monitor a new database, a POST request is made against Kafka Connect’s /connectors resource with the necessary configuration in JSON format. The JSON configuration is explained here.

At this point, we were ready to tell Debezium to monitor our MySQL database. We sent a POST request to the Kafka Connect REST API with the information pertaining to the database and got a HTTP 201 response indicating that the request was successfully accepted.

To test the configuration, we monitored the Kafka Connect logs to see that it was receiving data from our database instance and we viewed the Kafka topics created by Kafka Connect to see that messages were being produced while we manually executed some SQL INSERT, UPDATE and DELETE queries.

Using Kafka Event Source for Knative

With Kafka Connect/Debezium and Kafka set up, we were ready to connect it to Knative. We used the latest version of Knative – v0.5 as of writing this post – and followed these instructions to install it.

To consume messages from the Kafka topics that were created by Debezium, we used the Apache Kafka Event Source for Knative which provides integration between Knative and Apache Kafka. The Kafka Event Source is a Knative resource that can subscribe to one or more Kafka topics and starts receiving messages. It can then start relaying the messages it receives to Knative services or channels – referred to as sinks in Knative terminology. A sample manifest file of Kafka Event Source can be viewed here.

Finally, to do something with the received messages, we created the Event Display Knative service that prints out the messages it receives from the Kafka Event Source. Viola! In this way, we were able to make changes to the MySQL database, which triggered the service on Knative.

It’s worth noting that this solution is somewhat simplistic and was only created for demonstration purposes. Knative supports much more complex eventing pipelines that may involve multiple services connected together via channels and should be used in more real world contexts. More on Knative eventing here.

Next Steps

This solution highlights essential building blocks to support database integration with serverless platforms. However, many more questions arise, with quite a few around security – how do the database AAA mechanisms map to those of Kubernetes and the serverless platform? How can we ensure that functions/applications can only access authorized data? How can we ensure that the Kafka cluster is well configured as we add more and more databases? How can this solution integrate with other serverless platforms which put more emphasis on functions?

We will look into these and other questions in future, but in our next post, we will describe how we integrate a file storage service with Knative so that events can be triggered if, for example, a file is uploaded analogously to an AWS Lambda function being triggered when a file is dropped into an S3 bucket. Woot!