The eternal software circle of life continues to pose non-trivial challenges. Developers write code, run tests, push and/or deploy, perhaps leading to more tests, and finally see their software used in production. Eventually, they might see everything working out correctly or rather not, as indicated by log messages, user complaints and other side channels, and even more eventually, when nothing else gets in the way, they might even attempt to fix the problem at any code location which might have a probability of contributing to the issue.

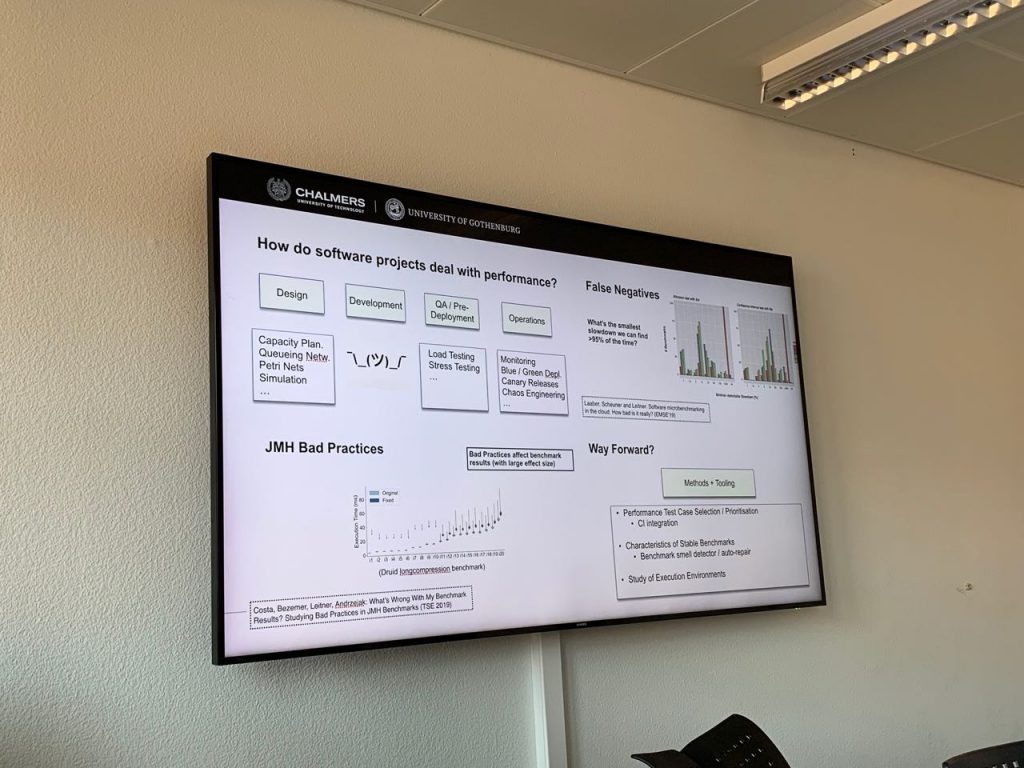

At the Service Prototyping Lab, we received the sixth international speaker in our colloquium series with Philipp Leitner from Chalmers and University of Gothenburg (ICET Lab) who elaborated on the performance aspects of this roundtripping and showed techniques to improve the process. In the simplified phase view of Design, Development, QA/Pre-Deployment and Operations, he and his fellow researchers focus on the development phase and analyse software frameworks which aim to tackle performance issue detection.

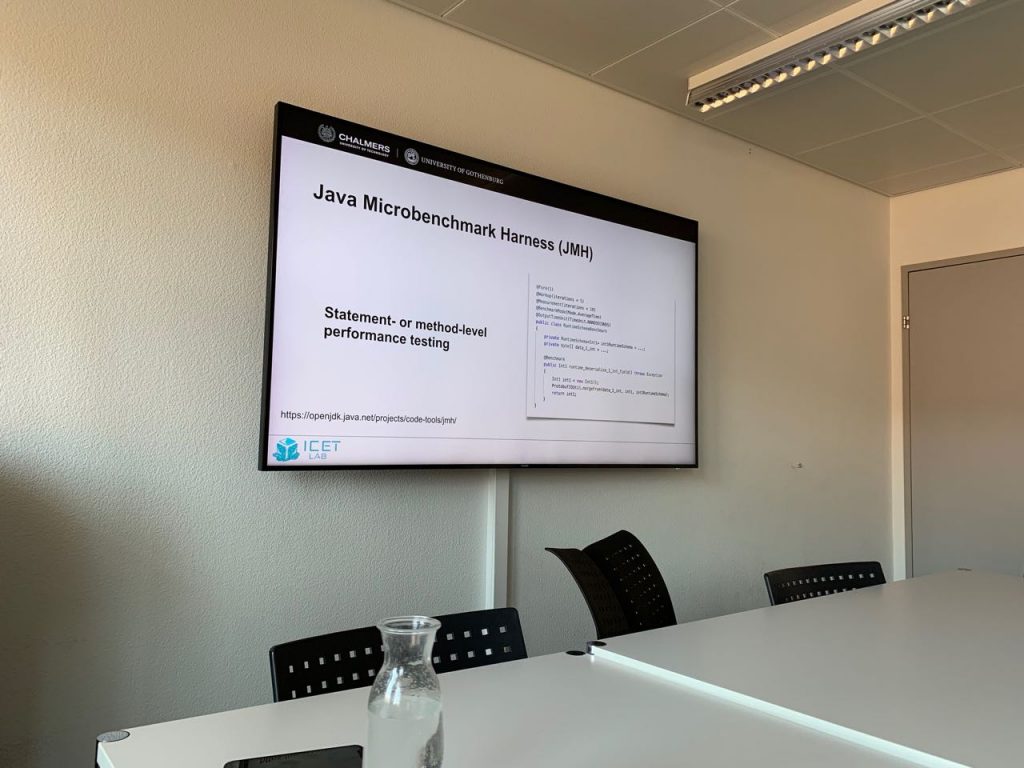

Using the Java Microbenchmark Harness (JMH) as an example, he pointed out that more often than not (really: almost always), microbenchmarks are used incorrectly on the code level by falling into many antipattern traps despite documentation to the contrary. Moreover, even correctly annotated code is often not run regularly, or not correctly, e.g. on developer notebooks or shaky cloud instances, and on equally timing-unpredictable language virtual machines (e.g. JVM), which raise a lot of questions about the interpretability of the benchmark results.

By using cloud resources, benchmarks can however be repeated multiple times and averaged. Moreover, regressions over time can be identified or the crossing of predefined thresholds can be noted. This means that promising integrations of microbenchmarks, for example through visualisation in IDEs or through automation in CI/CD pipelines, might eventually emerge as standard development technique which we should teach in our software development courses.

The state of tooling as a result of the research work is already helpful, as evidenced by the many accepted Git pull requests of issues identified in many microbenchmark-using open source projects with static code analysis. However, runtime and integration tooling needs to be improved, and given the lax state of technology, it is likely that real impact and relieve on the side of software developers is caused by the upcoming research work.