In our research group, we have for many years observed and systematically explored how cloud applications are being developed. In particular, we focus our investigations on cloud-native applications whose properties are largely determined by exploiting the capabilities of modern cloud platforms for both their development and operation. As we are involved in European research on testing cloud applications (Elastest), our aim was to look at the current project results through the cloud-native glasses. This blog post reports about end-to-end testing of composite containerised applications from this perspective.

Software testing is of profound importance in a world where more and more processes are software-supported, software-defined and software-controlled. Ideally, one could formally verify the correctness of any single piece of software and any composed system, but in practice, testing is as far as industrial software development even for safety-critical domains gets these years. Starting from basic unit-testing, integration testing and end-to-end testing are adequate means of checking if a system or application does what it is supposed to do. An example would be a public transport system whose usage is regulated by turnstyles and rechargeable tickets. The specification would say that (a) persons can only enter the system with a valid (charged) ticket, (b) the ticket would be charged upon entering or leaving, (c) any entering and leaving would be recorded, and (d) the invariant saying that no persons would ever be trapped in the system because upon successful entering the leaving would be guaranteed. In reality, due to bugs in the system design or implementation, these safety guarantees do not hold. Swiss researchers have recently shown in a carefully prepared undercover study that indeed one can get stuck in such systems and can only leave via undocumented means.

Related to our example, most researchers associated with the Elastest consortium met in Móstoles close to Madrid in Spain for a consortium meeting which informed about the current technical state of Elastest and aimed at advancing testing so that the mentioned issues can be avoided. From a user perspective, the Elastest software lets developers, testers and QA experts define, execute and analyse tests on various types of applications, including interactive web browser sessions, remote services and Docker compositions. In simple terms, Elastest is downloaded as a Docker container which, when started, downloads other containers to set up a sophisticated composition including a web frontend called TORM.

While in the first two years under development Elastest was not yet ready for wider use, this is changing now with the more recent releases. Hence, we are looking forward to use Elastest for testing our dockerised tools (e.g. Snafu, HelmQA) for assuring quality while these tools are under development. This work will happen within the next few weeks, and we invite other developers of containerised applications to try out Elastest for themselves, too.

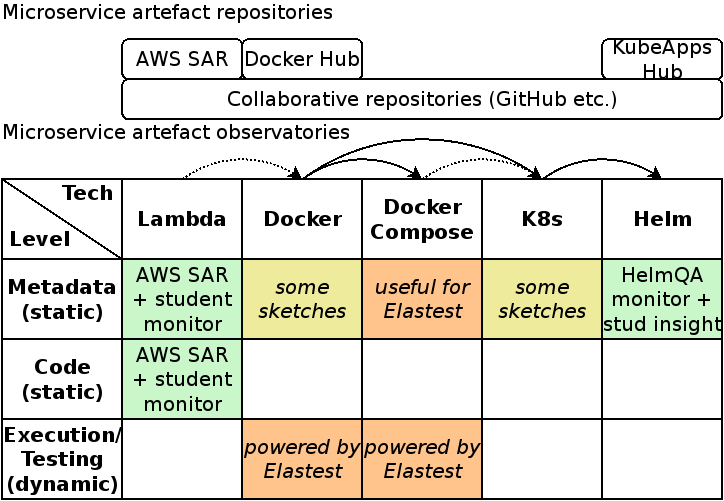

Many of the Elastest components are set up using Docker Compose. The corresponding compose files come with labels to differentiate the type of component. Some labels also carry test-related information. An obvious challenge during the development of Elastest itself is that these labels must be used consistently, and unlabelled or improperly components need to be reported. Such consistency checks relate to our previous research on microservice metadata. Therefore, we came up with a refined “big picture” of our activities related to microservices including the bidirectional possibilities of using Elastest for service assessment and assessing the Elastest services.

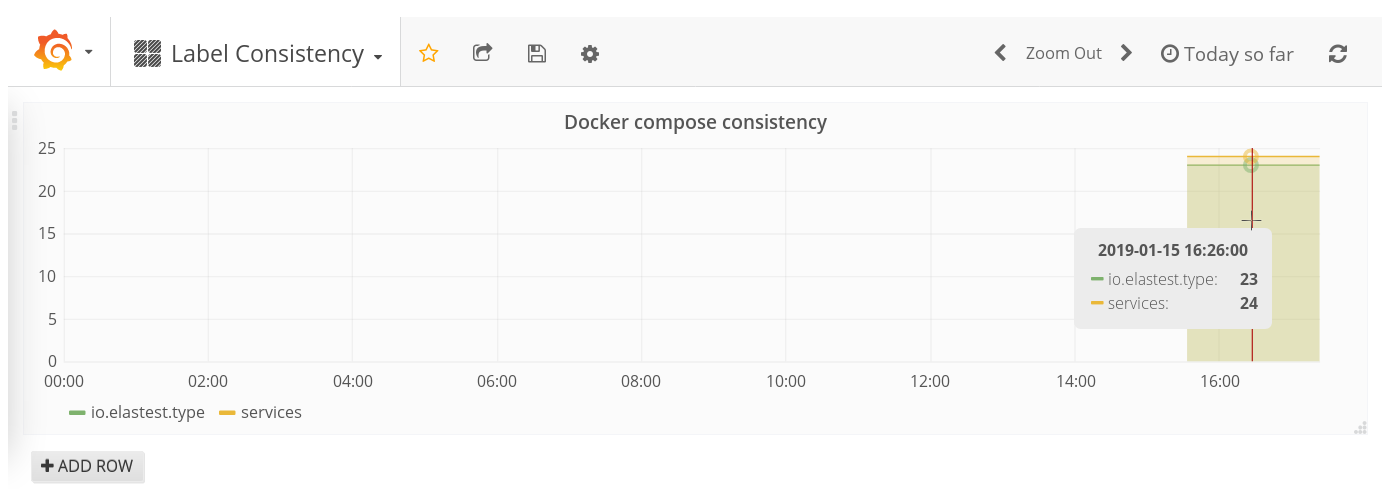

From this brainstorming, it did not take long before we had the first proof-of-concept prototype on consistency checking running. The script would run nightly to pull the Docker Compose deployment files from the Elastest GitHub repository, check the presence and validity of labels, and report the results to a Kafka endpoint which can be visualised with Grafana. As the output below shows, the current state of development is not bad among the services with proper deployment information, with only one label missing. On the other hand, many services are currently not checked as they still miss the Docker Compose file or have it in a non-standard location.

This technique is also applicable to many cloud-native applications. These applications are primarily composed of microservices in order to achieve certain guarantees related to resilience and elasticity. On the implementation level, container compositions are often used for this purpose, and a subset of those use Docker Compose whereas others may use Kubernetes deployment descriptors which support a similar label syntax for all objects. While appropriate techniques for testing of cloud-native applications still need to be defined and evaluated, we can assume that the following ingredients contribute to avoid issues: Static tests on metadata, code and configuration, including dependency analysis; unit tests; deployment and integration tests; runtime tests under controlled imperfect conditions; controlled testing (e.g. A/B testing) in production. We are looking forward to discuss how the testability of applications can be improved and the degree of testing can be expressed across the application development lifecycle.

The script is now available from here: https://github.com/serviceprototypinglab/label-consistency