Note

This post was first published on Medium by Leonardas (Badrie) Persaud – one of the students who was involved in this project. The post is republished here as the project was run within the context of the Software Maintenance and Evolution course run by Sebastiano and the project itself was supervised by Seán. The students involved in the project were UZH CS Master’s students: Badrie L. Persaud, Bill Bosshard, and, William Martini and all project related content is in the project’s github repo.

Introduction

WebAssembly (WASM) is a binary instruction format for a stack-based virtual machine. Though initially designed for the web browser [see 1], it can be used in applications outside the web. One particular use case which is of interest to us is the use of wasm in a serverless context, the advantage of this being is the ability of WASM to support different languages and compile down to a lightweight runnable entity which is in principle portable across different processors/platforms. Also, WASM is designed as being safe, efficient and fast.

Running WASM in Serverside Context

Although WASM support for many languages is ongoing via extensions to widely used compilers, we observed issues working with Golang and ended up focusing on C/C++ and Rust for the purposes of this work. A list of supported languages can be found here.

C/C++ code can be compiled to wasm using either Emscripten or Clang with wasm32-wasi compilation tags. Having a WASM binary of course is not enough — a means to run it on the native processor is also required. In this work, we looked at two approaches: one based on using a WASM runtime which maps from WASM instructions to native processor instructions during execution and an alternative which is based on a priori compiling the WASM executable to native processor instructions. Wasmer, Wasmtime, and Lucet were considered here — the former two are runtimes which execute an arbitrary WASM binary; the latter compiles the WASM binary to native code. A full list of WASM runtimes can be found here.

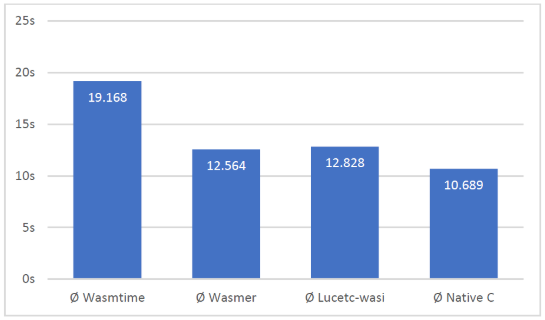

To see how these different approaches perform we compared performance using a simple primes benchmark.

Here we see that Wasmer’s performance is similar to Lucet, both performing faster than Wasmtime. We performed a number of other comparisons using memory bound and compute bound computation and this conclusion largely held across all the experiments we performed. For this reason, as well as the fact that it has a substantial developer interest We choose to go ahead with using Wasmer

Edit: Till Schneidereit from the bytecode alliance reached out to note that Wasmtime’s performance was due to optimizations being disabled by default in previous versions of Wasmtime.

Running WASM in Serverless Context

In most of today’s serverless contexts, functions run in docker containers. For this reason, we investigated how to run serverless functions easily in docker containers — in principle, these could be deployed on arbitrary serverless platforms.

We first looked at the possibility of using OpenWhisk which allows running Docker containers [see 6]. One disadvantage is that the container needs to be uploaded publically to Docker Hub and openwhisk requires that the container runs a service which exposes specific endpoints. We used a basic python-flask based service which triggered the wasmer runtime and the wasm application -this approach had the undesirable side effect of generating a large container image. However, we managed to get it working even with this larger container image.

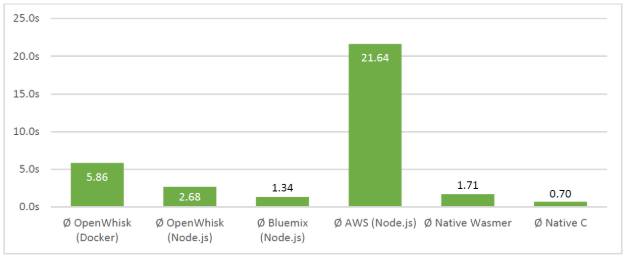

While investigating the docker based approach, we found that it is possible to invoke WASM functionality directly from the Node.js runtimes available on the different serverless platforms. [see 7], this is useful since Node is supported by most Serverless platforms. We did a simple benchmark by computing fibonacci N = 42.

As time was running out, we were unable to perform a very comprehensive analysis of the relative merits of the Docker solution and the Node.js solution. However, we did perform a basic comparison in which the WASM based function was invoked in different ways as shown in Figure 2. We observed that the Node.js solution works better than the Docker container in the scenarios we explored. However, one draw-back of the Node solution is that it only supports data types of ints at the moment. We did not manage to find an explanation for the slow run time on AWS was not found during the research.

Conclusion

The project produced a performance comparison of different solutions for running wasm on the server side and then considered how wasm can be deployed to different serverless platforms (AWS and Openwhisk). We found that NodeJS had some advantages over a Docker based solution — better performance, easier packaging etc. However, there could be other benefits from a Docker based solution, eg better integration with existing serverless platforms. Ultimately, we believe it is likely that supporting native WASM within the serverless platform will be a requirement without the need for encapsulating them within Docker containers. In any case, this technology is still in the early days of its evolution and there remain many interesting issues to be explored and addressed.

Quick Links

- WebAssembly with Go

- Compiling C to wasm and running on Wasmtime

- Compiling C to wasm and running on Lucet

- Compiling Rust to wasm

- Putting Wasmer binary into a Docker Container

- Creating an OpenWisk compliant Docker Container

- Using Node.js to deploy wasm on Serverless