Many providers of hosted services, including cloud applications, are subject to a contradiction in handling log data. On the one hand, storing logs consumes resources and should be minimised or avoided altogether to save resource cost. On the other hand, regulatory constraints such as keeping the data for the purpose of future audits exist. A smart solution to encode the data appropriately needs to be found. The coding encompasses both compression, to keep resource use low, and encryption, to prevent leaking information to unauthorised parties, for instance when logging for the purpose of intrusion detection. On an algorithmic level, the encoded data should still be usable for computation, in particular comparison and search. In this blog post, based on the didactic log example shown in the figure below, we present algorithms and architectures to handle cloud log files in a smart way.

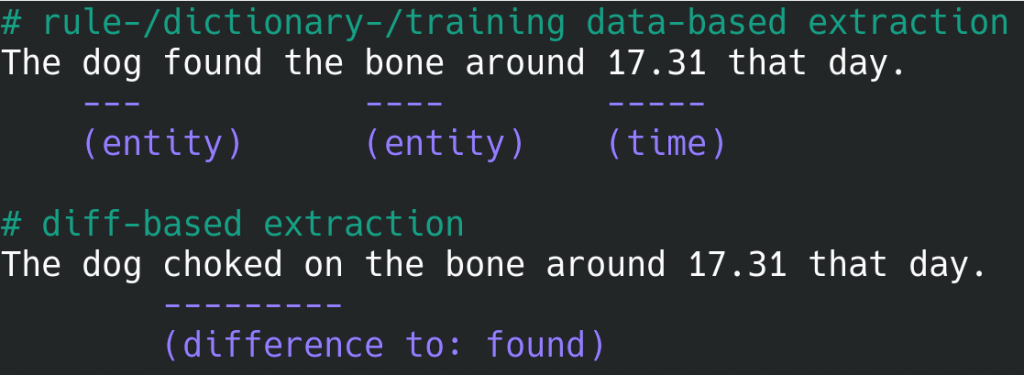

The example shows two hypothetic log lines, both simplified by not carrying timestamp or associated device information. When the first line is parsed, the significance of any information contained therein can only be evaluated by using named-entity recognition and rule-/pattern-based entity extraction. When the second line is parsed, byte-level and contextual differences to the previous one can also be taken into account. A sound approach is therefore to combine both techniques, extract the significant data parts, and compress the remainder with good to excellent compression ratios, aided by long log files with almost monotonic but not quite identical messages.

Finding differences the right way is not trivial. Apart from byte-level identification of changes, insertions or removals, context elements such as separators (spaces, commas, semicolons) need to be considered to reduce unnecessary diffs.

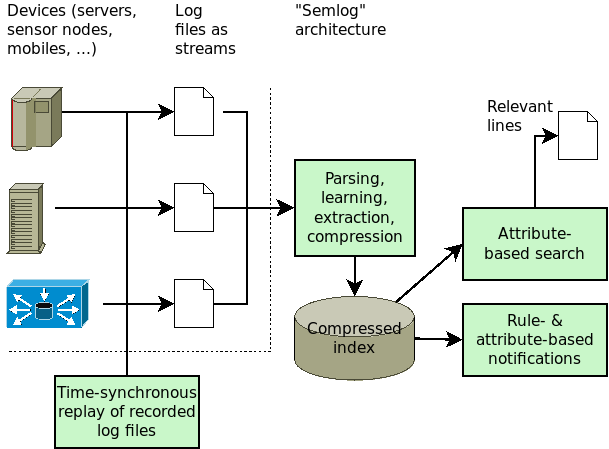

Beyond the algorithm, an architectural integration is needed. A sound approach is to divide the system into four parts: log file provisioning (this part is optional and is typically implemented already with network logging), file parsing and semantic detection of attributes along with attribute-based compression, interactive attribute-based search, and attribute-based alerting and notification. The following figure shows the architecture, again in simplified form, of the algorithmic-architectural approach called “Semlog” for semantic log handling.

All of this appears to be over-engineered at first. Why not just compress with gzip, use zgrep for search, and something based on ztail for notification? Oh wait, there is no ztail (yet)… and the other “Z commands” may also have their drawbacks, however established they may be on today’s systems, in particular regarding memory use and operation speed.

Furthermore, the system should not only use with static logfiles, but instead also with continuous log streams. Given the modular architecture, which could be implemented as command-line tools or microservices, and the preference for JSON for structured messaging among developers, partial JSON stream processing using JSONpath is another suitable ingredient for convenient programmatic access. In contrast to typical DOM-style document parsing, the document can still be incomplete, yet in contrast to SAX-style stream parsing, not every opening and closing brace needs to be accounted for in code. Instead, triggers are set on whole blocks identified with these paths.

The implementation thus consists of four parts:

- A netlogservice convenience script that reads a static log file and replays it in original logging speed, taking the time offset into consideration.

- An slc (semantic log compression) script that reads from a static file or a stream (including stdin), identifies attributes, and creates or updates a compressed index for search in addition to possible structured output (on stdout).

- Another script slcgrep that reads the index to find matching log lines.

- Finally, a script slcnotify that installs conditional triggers and reads a file (including stdin) to notify upon the continued or first-time presence of conditions.

The scripts can be connected through pipes to combine their functionality. In scalable environments, they can further be set up as interconnected microservices with additional wrapper code, even though the integration of CLI tools into cloud platforms (e.g. PaaS or cloud functions) is still often not seamless.

netlogserve examples/admin.log | slc -j - | slcnotify portnumber:8008

The emerging implementation is publicly available via Git.