by Fernando Benites (ZHAW and SpinningBytes)

cross-posted from github

We explain here, step by step, how to reproduce results of the approach and discuss parts of the paper. The approach was aimed at building a strong baseline for the task, which should be beaten by deep learning approaches, but we did not achieve that, so we submitted this baseline, and got second in the flat problem and 1st in the hierarchical task (subtask B). This baseline builds on strong placements in different shared tasks, and although it only is a clever way for keyword spotting, it performs a very good job. Code and data can be accessed in the repository GermEval_2019

Task Description

The task was basically to classify blurbs (small summaries/advertorial texts/?) into 8 classes (subtask A) or into hierarchical structured 343 labels (subtask B). There were 11638 samples in the training set, 2910 in the development and 4157 in the test set. There are some indicators that the development and test set were similar to the training data, as the average number of labels were similar (3.1). See https://www.inf.uni-hamburg.de/en/inst/ab/lt/resources/data/germeval-2019-hmc/gest19-1-description.pdf for a (very) detailed description of the task. The task is not especially useful for real applications, but one can see what can be performed by hierarchical classifiers for document classification even with few texts and a large number of labels on German text. Still, a future application would be that a publishing house receive a book, create the blurb, classify it automatically and put online.

Literature

Please see the detailed Task description for a general Literature. For the model, we were inspired by the architecture from https://www.researchgate.net/publication/316602155_MAZA_Submissions_to_VarDial_2017 . Also check out the our approach which shaped this approach used in VarDial 2018: https://blog.zhaw.ch/datascience/twist-bytes-vardial-2018/

Approach

First, we load the libraries (we need to install also the sklearn from my fork). We discuss here the hierarchical approach (Subtask B), to solve the 343 labels problem. The root node solution is similar but has more ngrams and higher number of maximum features. We go step by step in a jupyter session over the code.

#!/usr/bin/env python """ GermEval 2019 Hierarchical classification shared task Twistbytes Approach (Fernando Benites) """ from sklearn.model_selection import train_test_split from sklearn.pipeline import make_pipeline # needs the one from pip install git+https://github.com/fbenites/sklearn-hierarchical-classification.git #or the developer branch from sklearn_hierarchical_classification.classifier import HierarchicalClassifier from sklearn_hierarchical_classification.constants import ROOT from sklearn.pipeline import Pipeline, FeatureUnion from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.metrics import f1_score, classification_report, make_scorer from sklearn.model_selection import train_test_split from sklearn import preprocessing from sklearn.preprocessing import MultiLabelBinarizer from sklearn.svm import LinearSVC import numpy as np from sklearn.multiclass import OneVsRestClassifier import nltk import sys #read data utilities from parse_data import * # Used for seeding random state RANDOM_STATE = 42

We now introduce the build for the feature extractor. It uses many TfidfVectorizers for different n-grams (1-7 and 1-3 with German stopwords removal, and 2-3 char n-gram) to create a count matrix (Tf=> Term frequency) and weight the matrix with the inverse document frequency (idf). This creates a matrix which gives high weights/values for n-grams which occur in specific documents, and penalizes words that occur often (no document specificity). A further note, we changed here from the competition the values from 2-5 used in the submitted predictions, to 1-7 which gives a plus of 0.002 f-1 micro score. The FeatureUnion construct allows us to glue every thing in a big matrix, so the sklearn-like classifier can process it in one run.

def build_feature_extractor():

context_features = FeatureUnion(

transformer_list=[

('word', TfidfVectorizer(

strip_accents=None,

lowercase=True,

analyzer='word',

ngram_range=(1, 7),

max_df=1.0,

min_df=0.0,

binary=False,

use_idf=True,

smooth_idf=True,

sublinear_tf=True,

max_features=70000

)),

('word3', TfidfVectorizer(

strip_accents=None,

lowercase=True,

analyzer='word',

ngram_range=(1, 3),

max_df=1.0,

min_df=0.0,

binary=False,

use_idf=True,

smooth_idf=True,

sublinear_tf=True,

stop_words=nltk.corpus.stopwords.words('german'),

max_features=70000

)),

('char', TfidfVectorizer(

strip_accents=None,

lowercase=False,

analyzer='char',

ngram_range=(2, 3),

max_df=1.0,

min_df=0.0,

binary=False,

use_idf=True,

smooth_idf=True,

sublinear_tf=True,

)),

]

)

features = FeatureUnion(

transformer_list=[

('context', Pipeline(

steps=[('vect', context_features)]

)),

]

)

return features

We create now a function to nicely print the results for uploading to the competition website:

def print_results(fname,hierarchy,y_pred,mlb,ids,graph):

it_hi=[tj for tk in hierarchy.values() for tj in tk]

roots=[tk for tk in hierarchy if tk not in it_hi]

prec=lambda x: [tk for tk in graph.predecessors(x )]+ [tk for tj in graph.predecessors(x )for tk in prec(tj)]

with open(fname, "w") as f1:

for task in range(2):

if task==0:

f1.write("subtask_a\n")

for i in range(y_pred.shape[0]):

f1.write(ids[i]+"\t")

st1=""

labs=set()

for j in y_pred[i,:].nonzero()[0]:

if mlb.classes_[j] in roots:

st1+=mlb.classes_[j][2:]+"\t"

else:

for tk in prec(mlb.classes_[j]):

if tk==-1:

continue

if tk[0]=="0":

labs.add(tk[2:])

f1.write(st1[:-1]+"\t".join(labs)+"\n")

if task==1:

f1.write("subtask_b\n")

for i in range(y_pred.shape[0]):

f1.write(ids[i]+"\t")

st1=""

for j in y_pred[i,:].nonzero()[0]:

st1+=mlb.classes_[j][2:]+"\t"

f1.write(st1[:-1]+"\n")

Let’s move to the main part, first we load the data. Important here, the blurbs*.txt are in fact xml, which we load with a helper script. For that we diverge from the original data, by wrapping the content of the files with a root node. This is already fixed in the data from my github directory.

if __name__ == "__main__":

train=1

if "data" not in globals():

if train==1:

data,labels=read_data("blurbs_train.txt")

data_dev,labels_dev=read_data("blurbs_dev.txt")

else:

data,labels=read_data("blurbs_train_and_dev.txt")

data_dev,labels_dev=read_data("blurbs_test_nolabel.txt")

hierarchy, levels=read_hierarchy("hierarchy.txt")

r"""Test that a nontrivial hierarchy leaf classification behaves as expected.

We build the following class hierarchy along with data from the handwritten digits dataset:

<ROOT>

/ \

A B

/ \ | \

1 7 C 9

/ \

3 8

"""

if "ROOT" in hierarchy:

hierarchy[ROOT] = hierarchy["ROOT"]

del hierarchy["ROOT"]

class_hierarchy = hierarchy

keywords = ["title","authors","body","copyright","isbn"]

Then, we transform the labels and divide train and test set. Here, we differentiate between the training and the test set blocks, since they depend on different data chunks.

mlb = MultiLabelBinarizer()

#depending on the mode load different data

if train==1:

data_train=["\n".join([tk[ky] for ky in keywords if tk[ky]!=None]) for tk in data ]

labels_train=mlb.fit_transform(labels)

X_train_raw, X_dev_raw, y_train, y_dev = train_test_split(data_train,labels_train,test_size=0.2,random_state=42)

else:

X_train_raw=["\n".join([tk[ky] for ky in keywords if tk[ky]!=None]) for tk in data ]

y_train=mlb.fit_transform(labels)

y_train = mlb.transform(labels)

del data

ids= [tk["isbn"] for tk in data_dev]

X_dev_raw=["\n".join([tk[ky] for ky in keywords if tk[ky]!=None]) for tk in data_dev ]

We now initialize the classification pipeline. First the vectorizer, then a linear SVM in a one versus rest manner. We glue this together in a pipeline creating a base classifier. The pipeline ensures that the result of the vectorizer is given as input to the classifier. For subtask A we could use just this base classifier (using more ngrams would be helpful, though) and set the right y-labels. For subtask B, we call the hierarchical classifier with this as base classifier. The selection of algorithm lcn and training_strategy siblings makes that the base classifier is trained in each node so that it decides which child of the parent is likely to be predicted.

vectorizer = build_feature_extractor()

bclf = OneVsRestClassifier(LinearSVC())

base_estimator = make_pipeline(

vectorizer, bclf)

clf = HierarchicalClassifier(

base_estimator=base_estimator,

class_hierarchy=class_hierarchy,

algorithm="lcn", training_strategy="siblings",

preprocessing=True,

mlb=mlb,

use_decision_function=True

)

To execute the training and prediction methods is really easy:

print("training classifier")

clf.fit(X_train_raw, y_train[:,:])

print("predicting")

y_pred_scores = clf.predict_proba(X_dev_raw)

training classifier

/home/fbenites/virtualenvs/germeval/lib/python3.6/site-packages/sklearn/multiclass.py:76: UserWarning: Label not 8 is present in all training examples. str(classes[c])) /home/fbenites/virtualenvs/germeval/lib/python3.6/site-packages/sklearn/multiclass.py:76: UserWarning: Label not 9 is present in all training examples. str(classes[c])) .... (lots of warnings, no worries)

predicting

However, what gave us the edge over the other approaches was to set a very good threshold for turning the confidence outputs into crisp predictions. First, we need to post-process the predictions of the SVM-hierarchical classifier.

The classifier linearSVC outputs values between -1 and 1. But for nodes which did not have any prediction, the hierarchical classifier let it to 0. So first we set every value which is zero to a lower value than -1 (here -10).

y_pred_scores[np.where(y_pred_scores==0)]=-10

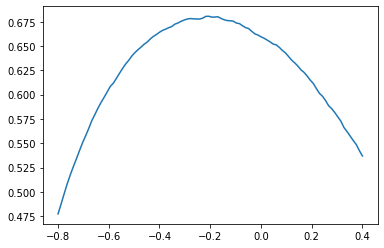

Now we choose a good threshold. Lets see how the graph was for the development set, if we change the threshold.

import matplotlib.pyplot as plt x_graph=np.linspace(-0.8,0.4,100) y_graph=[f1_score(y_true=y_dev, y_pred=y_pred_scores>tx, average='micro') for tx in x_graph] plt.plot(x_graph,y_graph)

[<matplotlib.lines.Line2D at 0x7f8945845cf8>]

We can see that the F1-score-curve is almost convex and the top is around -0.2. We chose -0.25 because it seemed more stable on the right side of the top. Using the standard threshold at 0 would give only 0.65 instead of 0.67 (0.02 was a lot in this competition).

y_pred=y_pred_scores>-0.25

We print it out (if you want you can use train=0 and get the predictions for the final result).

if train==1:

print('f1 micro:',

f1_score(y_true=y_dev, y_pred=y_pred, average='micro'))

print('f1 macro:',

f1_score(y_true=y_dev, y_pred=y_pred, average='macro'))

print(classification_report(y_true=y_dev, y_pred=y_pred))

else:

import networkx as nx

graph = nx.DiGraph(hierarchy)

print_results("submission_baseline.txt",hierarchy,y_pred>-0.25,mlb,ids,graph)

f1 micro: 0.677735500980053

f1 macro: 0.24667277524584916

precision recall f1-score support

0 1.00 0.72 0.84 32

1 0.71 0.80 0.75 138

2 0.86 0.69 0.76 112

3 0.88 0.85 0.87 412

4 0.94 0.77 0.85 22

5 0.87 0.98 0.92 1608

6 0.79 0.86 0.82 394

7 0.70 0.72 0.71 412

8 0.62 0.56 0.59 61

9 0.57 0.58 0.57 106

10 0.00 0.00 0.00 16

11 1.00 0.45 0.62 11

12 0.00 0.00 0.00 5

13 0.56 0.71 0.63 14

14 0.00 0.00 0.00 1

15 0.60 0.45 0.51 83

16 0.00 0.00 0.00 4

17 1.00 0.36 0.53 14

18 0.00 0.00 0.00 2

19 0.63 0.71 0.67 17

20 0.47 0.62 0.54 193

21 0.81 0.74 0.77 57

22 0.50 0.18 0.27 11

23 0.92 0.65 0.76 34

24 0.00 0.00 0.00 0

25 0.88 0.90 0.89 71

26 0.33 0.10 0.15 31

27 0.82 0.81 0.81 154

28 0.78 0.56 0.65 82

29 0.85 0.63 0.72 27

30 1.00 0.57 0.73 7

31 0.70 0.83 0.76 243

32 0.80 0.64 0.71 44

33 0.00 0.00 0.00 9

34 0.64 0.60 0.62 62

35 1.00 0.44 0.62 9

36 1.00 0.46 0.63 13

37 0.00 0.00 0.00 4

38 0.74 0.74 0.74 19

39 1.00 0.06 0.12 16

40 0.77 0.90 0.83 78

41 1.00 0.17 0.29 6

42 0.00 0.00 0.00 6

43 0.00 0.00 0.00 4

44 0.89 0.54 0.67 76

45 0.00 0.00 0.00 11

46 0.93 0.64 0.76 22

47 1.00 0.05 0.09 21

48 0.62 0.16 0.25 32

49 0.52 0.38 0.44 29

50 0.67 0.14 0.24 14

51 0.79 0.90 0.84 377

52 0.86 0.21 0.34 28

53 0.80 0.67 0.73 12

54 0.50 0.05 0.09 20

55 0.89 0.53 0.67 15

56 0.58 0.45 0.51 42

57 0.00 0.00 0.00 19

58 0.52 0.51 0.52 110

59 0.57 0.63 0.60 128

60 0.00 0.00 0.00 18

61 0.80 0.25 0.38 16

62 0.00 0.00 0.00 8

63 0.00 0.00 0.00 1

64 0.00 0.00 0.00 1

65 1.00 1.00 1.00 2

66 0.00 0.00 0.00 4

67 0.86 0.71 0.77 17

68 0.20 0.11 0.14 9

69 0.33 0.32 0.32 19

70 0.70 0.19 0.30 37

71 0.33 0.08 0.12 13

72 0.59 0.60 0.60 112

73 0.62 0.28 0.38 65

74 0.00 0.00 0.00 10

75 0.74 0.48 0.58 29

76 0.00 0.00 0.00 1

77 0.00 0.00 0.00 1

78 0.00 0.00 0.00 3

79 1.00 0.25 0.40 4

80 0.00 0.00 0.00 0

81 0.93 0.46 0.62 28

82 0.61 0.75 0.67 492

83 0.92 0.32 0.47 38

84 0.75 0.21 0.33 14

85 0.27 0.12 0.17 25

86 0.00 0.00 0.00 1

87 0.00 0.00 0.00 5

88 0.43 0.18 0.25 17

89 0.93 0.87 0.90 231

90 0.60 0.47 0.53 51

91 1.00 0.11 0.20 9

92 0.67 0.40 0.50 5

93 0.75 0.33 0.46 9

94 0.78 0.76 0.77 38

95 0.45 0.22 0.29 23

96 0.82 0.44 0.57 32

97 1.00 0.06 0.12 16

98 0.00 0.00 0.00 14

99 0.67 0.20 0.31 10

100 0.80 0.33 0.47 12

101 0.00 0.00 0.00 2

102 1.00 0.10 0.18 10

103 0.00 0.00 0.00 1

104 0.00 0.00 0.00 2

105 0.85 0.57 0.68 30

106 0.00 0.00 0.00 4

107 1.00 0.29 0.44 7

108 0.00 0.00 0.00 1

109 0.60 0.19 0.29 16

110 0.38 0.62 0.47 37

111 0.86 0.50 0.63 12

112 0.00 0.00 0.00 0

113 0.00 0.00 0.00 4

114 0.00 0.00 0.00 5

115 0.00 0.00 0.00 0

116 0.00 0.00 0.00 1

117 0.00 0.00 0.00 0

118 0.00 0.00 0.00 0

119 0.00 0.00 0.00 2

120 0.00 0.00 0.00 1

121 0.71 0.42 0.53 12

122 0.00 0.00 0.00 1

123 0.00 0.00 0.00 1

124 0.00 0.00 0.00 1

125 0.00 0.00 0.00 4

126 0.32 0.50 0.39 44

127 0.00 0.00 0.00 2

128 0.00 0.00 0.00 0

129 0.00 0.00 0.00 0

130 0.00 0.00 0.00 1

131 1.00 0.60 0.75 5

132 0.00 0.00 0.00 4

133 1.00 0.25 0.40 4

134 0.45 0.48 0.47 21

135 1.00 0.33 0.50 3

136 1.00 0.22 0.36 9

137 0.00 0.00 0.00 6

138 0.15 0.17 0.16 35

139 0.00 0.00 0.00 2

140 0.00 0.00 0.00 2

141 0.00 0.00 0.00 2

142 1.00 0.35 0.52 23

143 0.75 0.75 0.75 4

144 0.00 0.00 0.00 10

145 1.00 0.50 0.67 2

146 0.22 0.09 0.13 22

147 0.37 0.35 0.36 20

148 0.00 0.00 0.00 0

149 0.00 0.00 0.00 4

150 0.00 0.00 0.00 0

151 0.67 0.36 0.47 11

152 0.20 0.07 0.11 28

153 0.00 0.00 0.00 0

154 0.00 0.00 0.00 1

155 0.00 0.00 0.00 12

156 0.00 0.00 0.00 3

157 0.00 0.00 0.00 3

158 0.00 0.00 0.00 1

159 0.00 0.00 0.00 2

160 0.00 0.00 0.00 0

161 0.00 0.00 0.00 9

162 0.83 0.71 0.77 7

163 0.00 0.00 0.00 6

164 0.00 0.00 0.00 9

165 1.00 0.17 0.29 6

166 0.67 0.73 0.70 11

167 0.94 0.47 0.63 34

168 0.40 0.55 0.46 33

169 0.00 0.00 0.00 2

170 0.67 0.29 0.40 7

171 0.00 0.00 0.00 2

172 0.00 0.00 0.00 0

173 0.00 0.00 0.00 5

174 1.00 0.50 0.67 2

175 1.00 0.70 0.82 10

176 1.00 0.33 0.50 3

177 0.00 0.00 0.00 2

178 0.33 0.08 0.12 13

179 0.00 0.00 0.00 1

180 0.32 0.46 0.38 41

181 0.44 0.84 0.57 37

182 1.00 0.50 0.67 4

183 0.00 0.00 0.00 1

184 1.00 0.75 0.86 4

185 0.00 0.00 0.00 0

186 0.29 0.68 0.41 65

187 0.00 0.00 0.00 1

188 0.00 0.00 0.00 3

189 0.00 0.00 0.00 2

190 0.00 0.00 0.00 2

191 0.00 0.00 0.00 1

192 0.00 0.00 0.00 1

193 0.75 0.19 0.30 16

194 0.00 0.00 0.00 2

195 0.59 0.78 0.67 51

196 0.71 0.62 0.67 32

197 0.00 0.00 0.00 2

198 0.67 0.40 0.50 15

199 0.00 0.00 0.00 2

200 0.00 0.00 0.00 3

201 0.24 0.19 0.21 26

202 0.00 0.00 0.00 1

203 0.50 0.50 0.50 2

204 0.00 0.00 0.00 6

205 0.00 0.00 0.00 4

206 0.00 0.00 0.00 3

207 0.00 0.00 0.00 0

208 0.00 0.00 0.00 5

209 0.00 0.00 0.00 12

210 1.00 0.12 0.22 8

211 0.00 0.00 0.00 0

212 0.48 0.81 0.60 16

213 0.00 0.00 0.00 5

214 0.00 0.00 0.00 3

215 0.62 0.80 0.70 10

216 0.00 0.00 0.00 1

217 0.00 0.00 0.00 2

218 0.00 0.00 0.00 0

219 0.00 0.00 0.00 7

220 0.00 0.00 0.00 3

221 0.00 0.00 0.00 2

222 0.69 0.65 0.67 17

223 0.83 0.71 0.77 7

224 0.45 0.21 0.29 24

225 0.00 0.00 0.00 0

226 0.67 0.36 0.47 11

227 0.29 0.11 0.15 19

228 0.67 0.67 0.67 6

229 0.00 0.00 0.00 0

230 1.00 0.33 0.50 3

231 0.00 0.00 0.00 3

232 0.67 0.33 0.44 12

233 0.00 0.00 0.00 4

234 0.00 0.00 0.00 5

235 0.00 0.00 0.00 2

236 0.00 0.00 0.00 1

237 0.00 0.00 0.00 4

238 0.55 0.55 0.55 11

239 0.00 0.00 0.00 3

240 0.00 0.00 0.00 2

241 0.00 0.00 0.00 3

242 0.00 0.00 0.00 2

243 0.00 0.00 0.00 4

244 0.00 0.00 0.00 5

245 0.00 0.00 0.00 2

246 0.00 0.00 0.00 1

247 0.00 0.00 0.00 1

248 0.57 0.33 0.42 12

249 0.00 0.00 0.00 1

250 0.50 0.10 0.17 10

251 0.17 0.25 0.20 4

252 0.00 0.00 0.00 5

253 1.00 0.20 0.33 5

254 0.50 0.40 0.44 10

255 0.00 0.00 0.00 6

256 0.00 0.00 0.00 1

257 0.00 0.00 0.00 5

258 0.00 0.00 0.00 2

259 0.25 0.04 0.07 25

260 0.80 0.42 0.55 19

261 0.00 0.00 0.00 4

262 0.00 0.00 0.00 5

263 0.00 0.00 0.00 20

264 0.00 0.00 0.00 6

265 1.00 0.20 0.33 20

266 0.00 0.00 0.00 0

267 0.00 0.00 0.00 13

268 0.60 0.60 0.60 5

269 0.00 0.00 0.00 5

270 0.93 0.43 0.59 30

271 0.00 0.00 0.00 4

272 0.00 0.00 0.00 5

273 0.00 0.00 0.00 3

274 0.21 0.14 0.17 22

275 0.50 0.11 0.18 9

276 0.00 0.00 0.00 10

277 0.24 0.60 0.34 42

278 0.00 0.00 0.00 2

279 0.33 0.50 0.40 2

280 0.00 0.00 0.00 0

281 0.83 0.38 0.53 13

282 0.00 0.00 0.00 8

283 0.00 0.00 0.00 12

284 0.58 0.71 0.64 80

285 1.00 0.89 0.94 9

286 1.00 0.50 0.67 4

287 0.00 0.00 0.00 7

288 0.00 0.00 0.00 3

289 0.00 0.00 0.00 7

290 1.00 0.33 0.50 9

291 0.00 0.00 0.00 2

292 0.00 0.00 0.00 0

293 0.00 0.00 0.00 4

294 1.00 0.75 0.86 8

295 0.00 0.00 0.00 10

296 0.32 0.31 0.31 26

297 0.00 0.00 0.00 23

298 0.52 0.71 0.60 76

299 0.80 0.40 0.53 10

300 0.00 0.00 0.00 1

301 1.00 0.20 0.33 10

302 0.00 0.00 0.00 3

303 0.50 0.39 0.44 18

304 0.00 0.00 0.00 0

305 0.00 0.00 0.00 0

306 0.00 0.00 0.00 4

307 1.00 0.17 0.29 6

308 0.00 0.00 0.00 3

309 1.00 0.25 0.40 4

310 0.00 0.00 0.00 1

311 0.00 0.00 0.00 1

312 0.00 0.00 0.00 2

313 0.60 0.43 0.50 7

314 0.00 0.00 0.00 1

315 0.00 0.00 0.00 0

316 0.00 0.00 0.00 1

317 0.66 0.73 0.69 48

318 0.00 0.00 0.00 2

319 0.00 0.00 0.00 2

320 0.00 0.00 0.00 1

321 0.00 0.00 0.00 5

322 0.00 0.00 0.00 7

323 0.00 0.00 0.00 2

324 0.00 0.00 0.00 3

325 0.00 0.00 0.00 5

326 0.00 0.00 0.00 8

327 0.00 0.00 0.00 2

328 0.00 0.00 0.00 0

329 1.00 1.00 1.00 2

330 0.00 0.00 0.00 0

331 0.00 0.00 0.00 1

332 0.67 0.17 0.27 12

333 1.00 0.50 0.67 2

334 0.00 0.00 0.00 1

335 1.00 0.12 0.22 8

336 0.00 0.00 0.00 1

337 0.00 0.00 0.00 3

338 0.50 0.33 0.40 3

339 0.67 0.60 0.63 10

340 0.00 0.00 0.00 9

341 0.00 0.00 0.00 2

342 0.62 0.33 0.43 15

micro avg 0.71 0.65 0.68 9034

macro avg 0.34 0.22 0.25 9034

weighted avg 0.67 0.65 0.64 9034

samples avg 0.73 0.71 0.68 9034

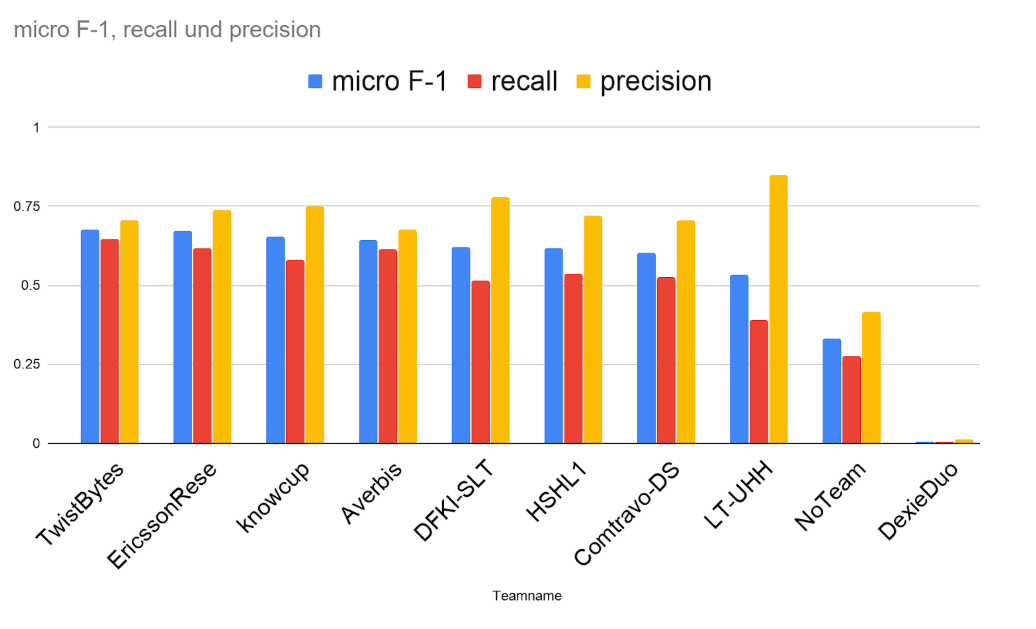

At the end in the competition, in the figure below, we see that in this subtask the micro-F1 was linked to recall, since most approaches focused in precision, we could achieve with the threshold strategy the best result.

We thank the organizers of the GermEval 2019 Task 1 shared task, it was a fun competition.