Introduction

I have introduced Disaster Recovery (DR) services in the tutorial of last ICCLAB newsletter which also made an overview of possible OpenStack configurations. Several configuration options could be considered. In particular, in case of a stakeholder having both the role of Cloud provider and DR service provider, a suitable safe configuration consists in distributing the infrastructures in different geographic locations. OpenStack gives the possibility to organise the controllers in different Regions which are sharing the same keystone. Here you will find the an overall specification using heat, I will simulate same configurations using devstack on Virtual BoX environments. One of the scope of this blog post is to support the students who are using Juno devstack.

There will be a second part of this tutorial to show a possible implementation of DR services lifecycle between two regions.

Goals

This post provides devstack (Juno release) support to intall OpenStack on two controllers in two separate regions. The second region, for example, might be used as a replica and backup infrastructure for DR services. A single keystone will be shared between the two controllers. In particular, following other tutorials, I will use Virtual Box hosting the two VMs of the two controllers.

It will be needed then to create endpoints in keystone with two different regions (RegionOne and RegionTwo). RegionTwo will always pass through the keystone of RegionOne. The Horizon dashboard will be only serving in RegionOne.

I will split the tutorial in two parts, this first part will be dedicated to the two controllers.

RegionOne controller

Let’s install and configure the first controller in RegionOne using Virtual Box. Therefore please install Virtual Box on your host system and Install Ubuntu Linux from www.Ubuntu.com/download/desktop on a Virtual Machine (VM) (storage 40GB and RAM 3GB (minimum). Call this VM “RegioneOne”.

Install Guest additions for Linux.

I suggest to start with a VM having initially a NAT Network attached to the Adapter 1 which allows to download all packages from the internet.

Any line below starting with $ is a command line on the Ubuntu controller node all the others are comments to the following commands.

Make all the updates and upgrades of Ubuntu then reboot:

$sudo apt-get update $sudo apt-get upgrade $sudo apt-get dist-upgrade $sudo apt-get install virtualbox-guest-dkms $sudo apt-get install vim $sudo reboot

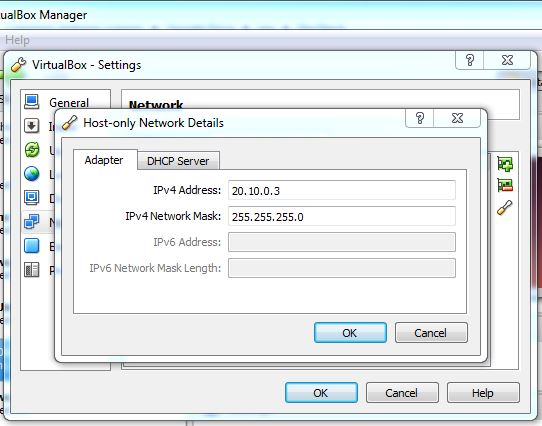

Virtual Box configuration (Figure -1) .

The intention is to have two Virtual Machines having internal addresses 20.10.0.1 and 20.10.0.2. Therefore at Virtual Box settings (Control-G) configuration, Network tab: Create a Host-Only Ethernet Adapter #1 with IPv4 address 20.10.0.3 and IPv4 Network Mask 255.255.255.0

Figure-1

Then configure the interfaces of the Virtual Machine as follows:

eth0: Host-only Adapter #1

IPv4=20.10.0.1 and IPv4 Network Mask 255.255.255.0

eth1: NAT

Install git to allow cloning of the devstack from the repository

$sudo apt-get install git

Retrieve devstack source (juno master branch):

$git clone -b stable/juno https://github.com/openstack-dev/devstack.git $cd devstack

I will use the configuration of devstack through the localrc file.

I have found a very good tutorial made by best friend of mine Ian Y. Choi who proposed a good localrc file stored on http://goo.gl/OeOGqL and another on here

The localrc file is read during the stack.sh phase of devstack installation.

Please, play the video tutorials of Ian to understand some of the syntax of localrc file and all the Ubuntu installation commands. I have used instead the Juno master branch which handles correctly the regions and keystone service host.

The localrc file that i suggest to use is following (to be created with vim):

REGION_NAME=RegioneOne

OFFLINE=False

GIT_BASE=https://github.com

HOST_IP=20.10.0.1

# Logging

LOGDAYS=1

LOGFILE=$DEST/logs/stack.sh.log

SCREEN_LOGDIR=$DEST/logs/screen

VERBOSE=TRUE

# Credentials

DATABASE_PASSWORD=password

ADMIN_PASSWORD=password

SERVICE_PASSWORD=password

SERVICE_TOKEN=password

RABBIT_PASSWORD=password

RECLONE=yes

# Services

ENABLED_SERVICES=rabbit,mysql,key

ENABLED_SERVICES+=,n-api,n-crt,n-obj,n-cpu,n-cond,n-sch,n-novnc,n-cauth

ENABLED_SERVICES+=,neutron,q-svc,q-agt,q-dhcp,q-l3,q-meta

ENABLED_SERVICES+=,g-api,g-reg

ENABLED_SERVICES+=,cinder,c-api,c-vol,c-sch,c-bak

ENABLED_SERVICES+=,horizon

enable_service ceilometer-acompute ceilometer-acentral ceilometer-anotification ceilometer-collector

enable_service ceilometer-alarm-evaluator,ceilometer-alarm-notifier

enable_service ceilometer-api

enable_service heat h-api h-api-cfn h-api-cw h-eng

In icehouse release keystone will not read the REGION_NAME=RegioneOne. It is hardcoded to RegionOne.

Let’s start stacking in RegioneOne. (We have used RegioneOne instead of the default RegionOne )

$./stack.sh

After about 5 min on my I7 machine!, the stack displays:

$Horizon is now available at http://20.10.0.1/ $Keystone is serving at http://20.10.0.1:5000/v2.0/ $Examples on using novaclient command line is in exercise.sh $The default users are: admin and demo $The password: password $This is your host ip: 20.10.0.1

Let’s source the environment with openrc file as descibed in the readme.

$source openrc admin $env | grep OS_

The results are:

OS_REGION_NAME=RegioneOne OS_IDENTITY_API_VERSION=2.0 OS_PASSWORD=password OS_AUTH_URL=http://20.10.0.1:5000/v2.0 OS_USERNAME=admin OS_TENANT_NAME=demo OS_VOLUME_API_VERSION=2 OS_CACERT=/opt/stack/data/CA/int-ca/ca-chain.pem OS_NO_CACHE=1

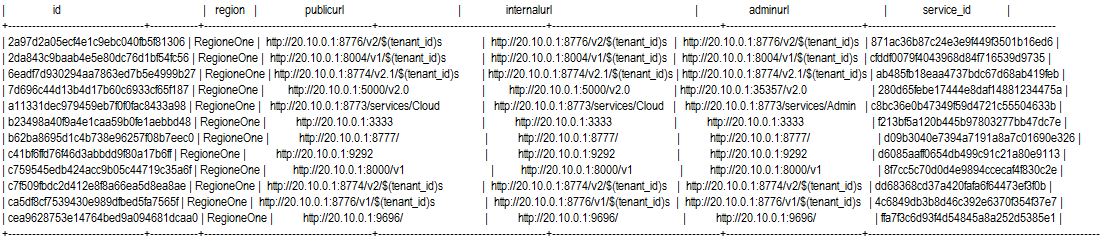

Try now to Get the current endpoints from keystone:

$keystone endpoint-list

You should get all the endpoints in RegioneOne on the IP: 20.10.0.1 controller one.

You should get all the endpoints in RegioneOne on the IP: 20.10.0.1 controller one.

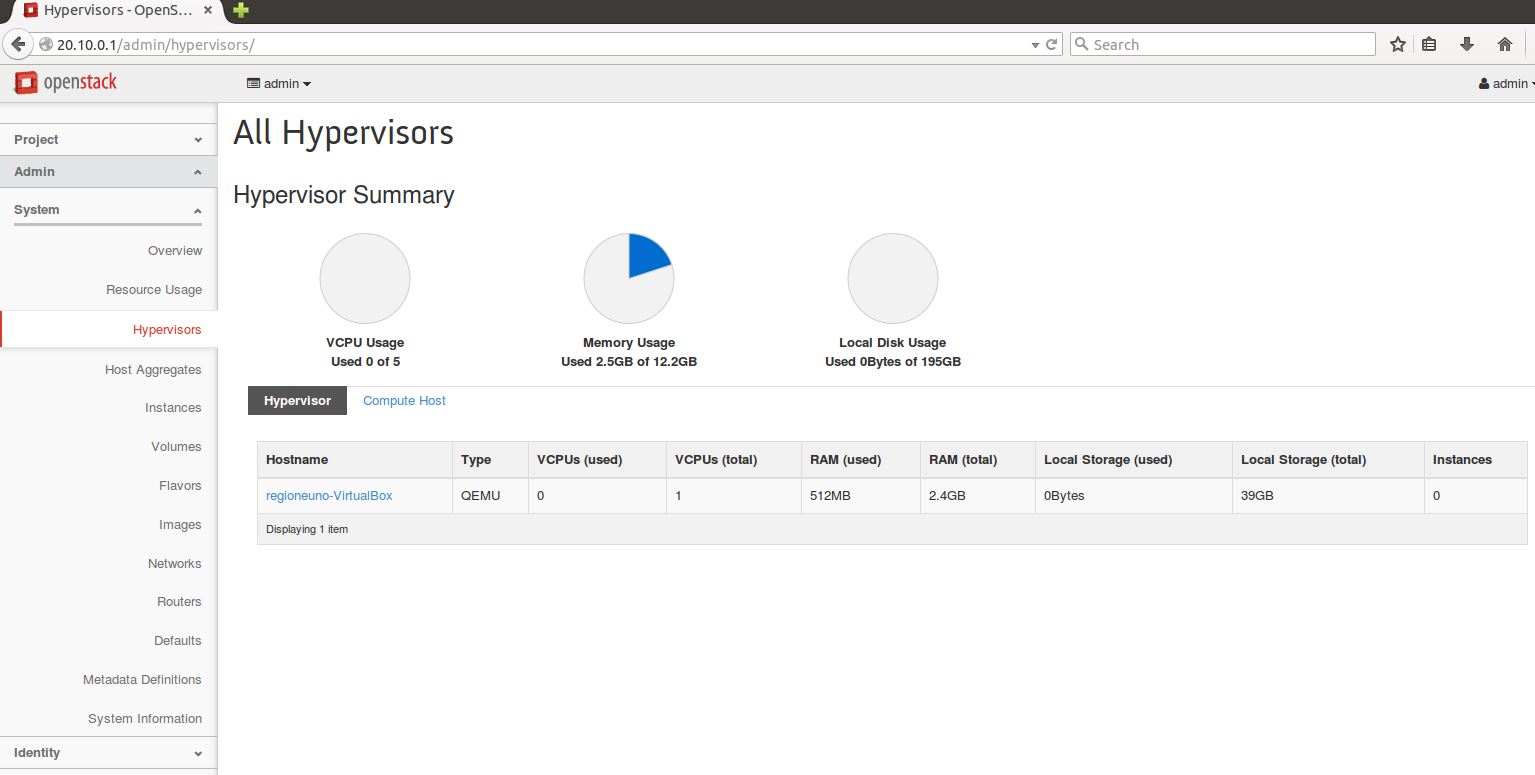

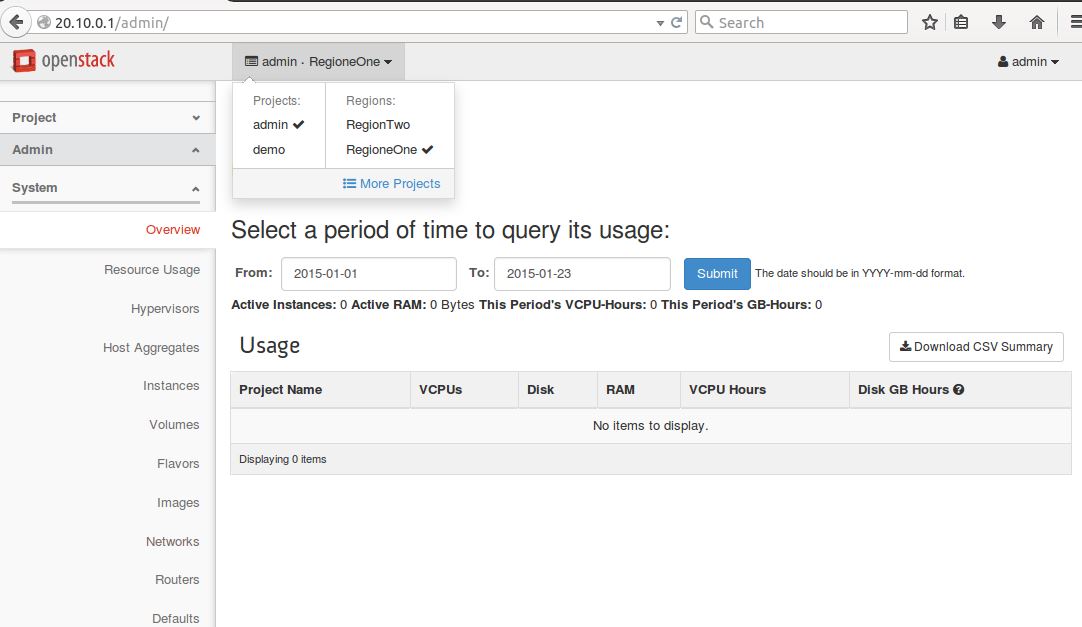

The Horizon is also working fine on 20.10.0.1 (Figure-2)

Figure-2

RegionTwo controller

Let’s install and configure the second controller in RegionTwo using Virtual Box as well. Install Virtual Box on your host system and Install Ubuntu Linux from www.Ubuntu.com/download/desktop on a VM (called “RegionTwo”) in Virtual Box (storage 40GB and RAM 3GB (minimum). Install Guest additions for Linux.

Start with a VM having initially a NAT Network attached to the Adapter 1 which allows to download all packages from the internet.

Make all the updates and upgrades of Ubuntu then reboot.

$sudo apt-get update $sudo apt-get upgrade $sudo apt-get dist-upgrade $sudo apt-get install virtualbox-guest-dkms $sudo apt-get install vim $sudo reboot

Then configure the interfaces of this second Machine as follows:

eth0: Host-only Adapter #1

IPv4=20.10.0.2 and IPv4 Network Mask 255.255.255.0

eth1: NAT

Install git to allow cloning of the devstack from the repository

$sudo apt-get install git

Retrieve devstack source (juno master branch)

$git clone -b stable/juno https://github.com/openstack-dev/devstack.git $cd devstack

Use again the configuration of devstack through the localrc file, create it with vim.

#Keystone service on controller 1

KEYSTONE_SERVICE_HOST=20.10.0.1

KEYSTONE_AUTH_HOST=20.10.0.1

REGION_NAME=RegionTwo

OFFLINE=False

GIT_BASE=https://github.com

HOST_IP=20.10.0.2

# Logging

LOGDAYS=1

LOGFILE=$DEST/logs/stack.sh.log

SCREEN_LOGDIR=$DEST/logs/screen

VERBOSE=TRUE

# Credentials

DATABASE_PASSWORD=password

ADMIN_PASSWORD=password

SERVICE_PASSWORD=password

SERVICE_TOKEN=password

RABBIT_PASSWORD=password

RECLONE=yes

# Services

ENABLED_SERVICES=rabbit,mysql,key

ENABLED_SERVICES+=,n-api,n-crt,n-obj,n-cpu,n-cond,n-sch,n-novnc,n-cauth

ENABLED_SERVICES+=,neutron,q-svc,q-agt,q-dhcp,q-l3,q-meta

ENABLED_SERVICES+=,g-api,g-reg

ENABLED_SERVICES+=,cinder,c-api,c-vol,c-sch,c-bak

enable_service heat h-api h-api-cfn h-api-cw h-eng

Now stack it:

$./stack.sh

We have almost the same results of the controller one:

$Keystone is serving at http://20.10.0.1:5000/v2.0/ $Examples on using novaclient command line is in exercise.sh $The default users are: admin and demo $The password: password $This is your host ip: 20.10.0.2

Let’s show the environment on RegionTwo:

$source openrc admin $env | grep OS_

The results are now:

OS_REGION_NAME=RegionTwo OS_IDENTITY_API_VERSION=2.0 OS_PASSWORD=password OS_AUTH_URL=http://20.10.0.1:5000/v2.0 OS_USERNAME=admin OS_TENANT_NAME=demo OS_VOLUME_API_VERSION=2 OS_CACERT=/opt/stack/data/CA/int-ca/ca-chain.pem OS_NO_CACHE=1

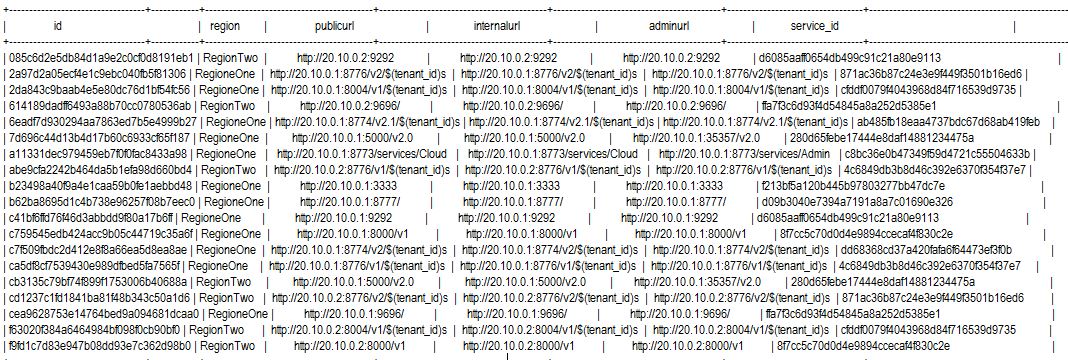

Let’s test the keystone now:

Fantastic!, keystone has been populated with all the endpoints of RegionTwo. This was done during the ./stack.sh in RegionTwo.

Let’s also utilise Horizon (Figure-3) from the web browser of the controller two: http://20.10.0.1/

Figure-3

Now the dashboard offers on the admin tab, on the left top corner, the possibility to select RegioneOne or RegionTwo. The second part of this tutorial will also make some use cases of the dashboard.

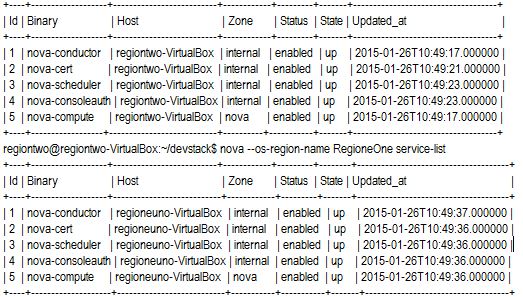

Let’s now check the nova services with these commands, from Regiontwo CLI.

$nova service-list $nova --os-region-name RegionTwo service-list

You can verify that all nova services are running on the two Hosts of RegionTwo and RegioneOne. TheHhost names being displayed are the Virtual Box VM names.

Let’s conclude this post with an example on how to boot VMs (OpenStack), from RegioneOne on RegionTwo and RegioneOne.

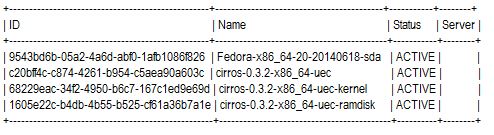

Controller One:

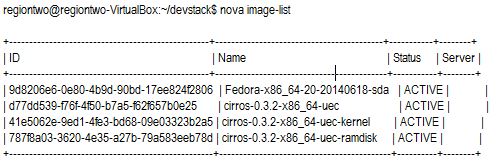

$nova image-list

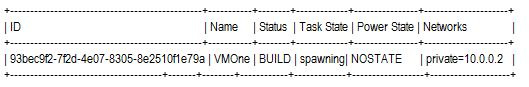

$ nova --os-regione-name RegioneOne boot VMOne --flavor=m1.tiny --image c20bff4c-c874-4261-b954-c5aea90a603c

$nova list

The VM: VMOne is in Build status on RegioneOne. Let’s try to make the same command to boot an image on RegionTwo. In this case image id needs to be taken from the RegionTwo:

Controller Two:

$nova image-list

If you make a $nova list on this secons controller, there will be no running VMs. You can verify this.

Let’s boot from Controller One a VM called VMTwo (cirros-0.3.2-x86_64-uec) on controller two:

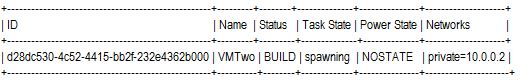

$ nova --os-region-name RegionTwo boot VMTwo --flavor=m1.tiny --image d77dd539-f76f-4f50-b7a5-f62f657b0e25

$nova --os-region-name RegionTwo list $nova --os-region-name RegioneOne list

As you can verify we have two VMs (VMTwo, VMOne) running on RegionTwo and RegioneOne respectively.

Conclusions

We have seen how to install two controllers in two different OpenStack regions having the same keystone which is populated during the stacking phases. We have also made some example of booting servers on the two separate regions. The horizon dasboard is also centralised and allows to manage from a web interface the infrastructures by selecting a specific region. Make yourself further tests between the two controllers. The second part of this post will be again utilising the two regions together with a middleware that will be acting as a survaivibility manager implementing a simple Disaster Recovery lifecycle according last ICCLab newsletter.

Some improvemens of the network configurations will be needed, this will be also part of the next blog. Stay tuned.

Many thanks to Y. Choi and devstack community

launched instances are in spawning state only, not moving to active state.

its a bug in neutron, this issue can be solved by following

https://bugs.launchpad.net/devstack/+bug/1409589

I made corrections. It presents the errors below. Can you help me?

Build of instance 872c1652-6b97-4793-be69-6023b9fe82e2 aborted: Failed to allocate the network(s), not rescheduling.

500

File “/opt/stack/nova/nova/compute/manager.py”, line 2103, in _do_build_and_run_instance filter_properties) File “/opt/stack/nova/nova/compute/manager.py”, line 2221, in _build_and_run_instance reason=msg)

Hi there,

Can you tell me how would I configure multiple region in liberty version step by step. I have tried few times but its not going through.

while instaling RegionTwo(exactly following the steps mentione above) ie while running stack.sh for RegionTwo gives error(regionone ip is 10.10.1.80 and regiontwo is 10.10.1.106):

2017-07-05 05:42:08.770 | ++userrc_early:source:5 OS_AUTH_URL=http://10.10.1.80:35357

2017-07-05 05:42:08.779 | ++userrc_early:source:6 export OS_USERNAME=admin

2017-07-05 05:42:08.805 | ++userrc_early:source:6 OS_USERNAME=admin

2017-07-05 05:42:08.825 | ++userrc_early:source:7 export OS_USER_DOMAIN_ID=default

2017-07-05 05:42:08.830 | ++userrc_early:source:7 OS_USER_DOMAIN_ID=default

2017-07-05 05:42:08.849 | ++userrc_early:source:8 export OS_PASSWORD=stack

2017-07-05 05:42:08.861 | ++userrc_early:source:8 OS_PASSWORD=stack

2017-07-05 05:42:08.867 | ++userrc_early:source:9 export OS_PROJECT_NAME=admin

2017-07-05 05:42:08.877 | ++userrc_early:source:9 OS_PROJECT_NAME=admin

2017-07-05 05:42:08.884 | ++userrc_early:source:10 export OS_PROJECT_DOMAIN_ID=default

2017-07-05 05:42:08.906 | ++userrc_early:source:10 OS_PROJECT_DOMAIN_ID=default

2017-07-05 05:42:08.911 | ++userrc_early:source:11 export OS_REGION_NAME=RegionTwo

2017-07-05 05:42:08.933 | ++userrc_early:source:11 OS_REGION_NAME=RegionTwo

2017-07-05 05:42:08.942 | +./stack.sh:main:1033 create_keystone_accounts

2017-07-05 05:42:08.965 | +lib/keystone:create_keystone_accounts:372 local admin_tenant

2017-07-05 05:42:08.985 | ++lib/keystone:create_keystone_accounts:373 openstack project show admin -f value -c id

2017-07-05 05:42:13.235 | Could not find resource admin

2017-07-05 05:42:13.305 | +lib/keystone:create_keystone_accounts:373 admin_tenant=

2017-07-05 05:42:13.310 | +lib/keystone:create_keystone_accounts:1 exit_trap

2017-07-05 05:42:13.329 | +./stack.sh:exit_trap:474 local r=1

2017-07-05 05:42:13.347 | ++./stack.sh:exit_trap:475 jobs -p

2017-07-05 05:42:13.366 | +./stack.sh:exit_trap:475 jobs=

2017-07-05 05:42:13.371 | +./stack.sh:exit_trap:478 [[ -n ” ]]

2017-07-05 05:42:13.386 | +./stack.sh:exit_trap:484 kill_spinner

2017-07-05 05:42:13.391 | +./stack.sh:kill_spinner:370 ‘[‘ ‘!’ -z ” ‘]’

2017-07-05 05:42:13.400 | +./stack.sh:exit_trap:486 [[ 1 -ne 0 ]]

2017-07-05 05:42:13.427 | +./stack.sh:exit_trap:487 echo ‘Error on exit’

2017-07-05 05:42:13.427 | Error on exit2017-07-05 05:42:08.770 | ++userrc_early:source:5 OS_AUTH_URL=http://10.10.1.80:35357

2017-07-05 05:42:08.779 | ++userrc_early:source:6 export OS_USERNAME=admin

2017-07-05 05:42:08.805 | ++userrc_early:source:6 OS_USERNAME=admin

2017-07-05 05:42:08.825 | ++userrc_early:source:7 export OS_USER_DOMAIN_ID=default

2017-07-05 05:42:08.830 | ++userrc_early:source:7 OS_USER_DOMAIN_ID=default

2017-07-05 05:42:08.849 | ++userrc_early:source:8 export OS_PASSWORD=stack

2017-07-05 05:42:08.861 | ++userrc_early:source:8 OS_PASSWORD=stack

2017-07-05 05:42:08.867 | ++userrc_early:source:9 export OS_PROJECT_NAME=admin

2017-07-05 05:42:08.877 | ++userrc_early:source:9 OS_PROJECT_NAME=admin

2017-07-05 05:42:08.884 | ++userrc_early:source:10 export OS_PROJECT_DOMAIN_ID=default

2017-07-05 05:42:08.906 | ++userrc_early:source:10 OS_PROJECT_DOMAIN_ID=default

2017-07-05 05:42:08.911 | ++userrc_early:source:11 export OS_REGION_NAME=RegionTwo

2017-07-05 05:42:08.933 | ++userrc_early:source:11 OS_REGION_NAME=RegionTwo

2017-07-05 05:42:08.942 | +./stack.sh:main:1033 create_keystone_accounts

2017-07-05 05:42:08.965 | +lib/keystone:create_keystone_accounts:372 local admin_tenant

2017-07-05 05:42:08.985 | ++lib/keystone:create_keystone_accounts:373 openstack project show admin -f value -c id

2017-07-05 05:42:13.235 | Could not find resource admin

2017-07-05 05:42:13.305 | +lib/keystone:create_keystone_accounts:373 admin_tenant=

2017-07-05 05:42:13.310 | +lib/keystone:create_keystone_accounts:1 exit_trap

2017-07-05 05:42:13.329 | +./stack.sh:exit_trap:474 local r=1

2017-07-05 05:42:13.347 | ++./stack.sh:exit_trap:475 jobs -p

2017-07-05 05:42:13.366 | +./stack.sh:exit_trap:475 jobs=

2017-07-05 05:42:13.371 | +./stack.sh:exit_trap:478 [[ -n ” ]]

2017-07-05 05:42:13.386 | +./stack.sh:exit_trap:484 kill_spinner

2017-07-05 05:42:13.391 | +./stack.sh:kill_spinner:370 ‘[‘ ‘!’ -z ” ‘]’

2017-07-05 05:42:13.400 | +./stack.sh:exit_trap:486 [[ 1 -ne 0 ]]

2017-07-05 05:42:13.427 | +./stack.sh:exit_trap:487 echo ‘Error on exit’

2017-07-05 05:42:13.427 | Error on exit

2017-07-05 05:42:13.437 | +./stack.sh:exit_trap:488 generate-subunit 1499233057 276 fail

2017-07-05 05:42:13.994 | +./stack.sh:exit_trap:489 [[ -z /opt/stack/logs ]]

2017-07-05 05:42:13.999 | +./stack.sh:exit_trap:492 /home/sanctum/devstack/tools/worlddump.py -d /opt/stack/logs

2017-07-05 05:42:15.265 | +./stack.sh:exit_trap:498 exit 1

2017-07-05 05:42:13.437 | +./stack.sh:exit_trap:488 generate-subunit 1499233057 276 fail

2017-07-05 05:42:13.994 | +./stack.sh:exit_trap:489 [[ -z /opt/stack/logs ]]

2017-07-05 05:42:13.999 | +./stack.sh:exit_trap:492 /home/sanctum/devstack/tools/worlddump.py -d /opt/stack/logs

2017-07-05 05:42:15.265 | +./stack.sh:exit_trap:498 exit 1

Unfortunately, our colleague who wrote this blog post is no longer working here. Also, these instructions are now quite old – I would recommend you contact the devstack community for help.